Shades of Synchresis

A Proposed framework for the classification of audiovisual relations in sound-and-light media installations

While existing analytical frameworks (Chion 1994, Coulter 2010) provide basic classification tools for experimental audiovisual works, they fall short in elaborating the variety of compositional possibilities afforded by Michel Chion’s notion of “synchresis”. I propose to delineate the various shades of synchresis through a case study analysis of the somewhat simpler relations between light and sound in media installation works using luminosonic objects: objects that appear to emit both sound and light in an integrated manner. A graphical framework for the analysis and composition of audiovisual relations between sound media and self-illuminating light will detail various forms of integrated audiovisual dynamics. The framework will relate the compositional concepts of vertical harmonicity and horizontal counterpoint (Chion) to the perceptual notion of “cross-modal binding” (Whitelaw 2008), thus linking compositional dynamics to perceptual effects and affects.

Objectives

Despite a wealth of publications regarding the history and contemporary praxis of audiovisual production, the current literature does not offer a cohesive, detailed, in-depth framework that may account for the compositional dynamics of audiovisual relations. Furthermore, the few existing analytical frameworks addressing the constitution of audiovisual material centre exclusively on screen-based practices, and thus ignore the possibilities afforded by emergent forms of non-screen-based audiovisual production.

In order to address these shortcomings, I would like to delineate various possibilities of audiovisual compositional dynamics in media installations through a case study analysis of works using self-illuminating light objects and spatial sound. Following this examination, I will place audiovisual typologies within a cohesive framework in a manner that highlights relations between audiovisual compositional dynamics and perceptual meaning. 1[1. It is the perceptual meaning of a light- and sound-emitting object — our understanding of the manner by which light and sound objects operate — that grounds subsequent processes of semantic and associational meaning creation.]

I will limit my analytical enquiry to light and sound media installation works utilizing physical objects that appear to emit both light and sound. A luminosonic object 2[2. “Luminosonic” is an original term coined by the author to refer to light- and sound-emitting objects, constructed through the synthesis of the words “luminescence” and “sonic”.] is in this regard an audiovisual object in which — by virtue of the localized production of sound and light — the two media are perceived as integrated, composite material. That is, sound and light are always in direct relation to one another, mutually constituting our perceptual understanding of the luminosonic object. In this sense, my object of analysis is neither light nor sound as separate media, but rather the compositional dynamics between the two, as well as the manner by which this dynamic affects our understanding of the luminosonic object.

I envision the analysis of light and sound behaviour of a luminosonic object through a metaphorical extension of musical orchestration to the notion of audiovisual orchestration. The importance of deepening and extending the possibilities of audiovisual orchestration is highlighted by recent studies on cross-modal perception, which suggest that sensory modalities do not operate in isolation (Varela 1999; Shimojo and Shams 2001; van Wassenhove et al. 2008). Rather, cross-modal integration underlies most of our perceptual activities, even in supposed unimodal tasks such as listening to music (see Schutz and Lipscomb 2007, Vines et al. 2006, Godøy and Leman 2010). It follows that greater range and subtlety in the orchestration of audiovisual works — whether using luminosonic objects, or in traditional screen-based media — will lead to a greater range and strength of both perceptual and affective states experienced by the spectator. A delineation of the various “shades” of synchresis (Chion 1994, 63) thus serves to deepen both analytic and creative aspects of audiovisual production.

Towards an Analysis Framework for Composite Sound-and-Light Materials

In order to explore possibilities of audiovisual dynamics using luminosonic objects, I will propose an original analysis framework that can: 1) account for the compositional dynamics of sound-and-light as composite material; and 2) account for dynamic changes of audiovisual behaviour. Further, while this framework is conceived as an analytical tool, it may also be used as a guide for creative practice and, as such, will: 3) seek to relate compositional strategies with perceptual results.

Precedents for Analytical Framework

While numerous publications provide historical accounts of audiovisual works, few writers provide insight towards an analytical framework of audiovisual relations. In developing my proposed framework, I have expanded on two existing analytical frameworks concerning audiovisual relations provided by Michel Chion and John Coulter. It is important to note, however, that both Chion and Coulter’s research focuses on image-sound relations in the context of screen-based media. Their research is thus adjusted to fit my focus on the compositional dynamics between sound and light in media installation work.

Chion’s Audio-Vision remains, nearly two decades after its initial publication, the most in-depth investigation of compositional dynamics in audiovisual media. Chion’s enquiry focuses on the phenomena of “added value” (Chion 1994, 5) within the cinematic arena as the reciprocal “expressive and informative” (Ibid.) enrichment of sound by image and of image by sound:

Sound shows us the image differently than what the image shows alone, and the image likewise makes us hear sound differently than if the sound were ringing out in the dark. (Chion 1994, 21)

Chion details numerous cinematic examples in which “added value” occurs on a semantic level as sound enhances, compliments or opposes the semantic or expressive content of an image, leading to a “product of… mutual influences” (Chion 1994, 22). Furthermore, “added value” may manifest through temporal animation of image by sound or vice versa (Ibid., 13). Sound may temporally animate an image through its re-rendering of the overall qualitative temporal experience (Ibid.). As well, sound — by virtue of its internal teleology — may vectorize an image, “orienting [it] toward a future, a goal” or “creating a feeling of imminence and expectation” (Ibid., 13–14).

Chion identifies a special subcategory of “added value,” which he terms “synchresis” (a combination of synchrony and synthesis), to describe “the spontaneous and irresistible weld produced between auditory… and visual phenomenon” (Ibid., 63). Synchresis may occur “independently of any rational logic,” leading to perceptual agglomeration of disparate image-sound combinations. However, the strength of synchretic experience may depend on “[functions] of meaning… contextual determinations… gestaltist laws… [and] rhythm” (Ibid., 63–64). Chion illustrates the phenomenon of synchresis through the example of cinematic fight scenes: synchronous, hyper-real, overdubbed sound welds irresistibly with the visual image of punching. Notably, the synchretic effect remains strong even when the viewer is aware of both the meticulous construction of the audiovisual scene in post-production and the inescapable chasm between the hyper-real “punch” sound and its relatively unimpressive real-world counterpart.

Chion lays the groundwork for an analytical framework through the articulation of vertical and horizontal dimensions of audiovisual relations. Perhaps stemming from his training as a composer, vertical and horizontal dimensions follow a musical conception: the vertical dimension delineates audiovisual harmonic relations, while the horizontal dimension delineates audiovisual temporal relations (Ibid., 35–36). The vertical dimension is conceived as a comparison of content appropriateness between auditory and visual media. Audiovisual consonance would involve conventional reinforcement of image with an appropriate sound, while audiovisual dissonance involves “a discord between [the] figural natures” of image and sound components (Ibid., 37–38). Of course, consonant or dissonant relations between sound and image are not tied to realism, but rather to the conventions of the medium. For instance, the hyper-real “punching” sounds accompanying a fight scene would appear consonant in the context of the cinematic medium despite their lack of realism; however, replacing a “punch” sound with a gong hit results in dissonant audiovisual relations, as the gong hit is an unconventional sonic reinforcement in the cinematic fight scene.

In contrast to the vertical dimension, the horizontal dimension allows comparison of temporal relations between image and sound. Chion’s horizontal dimension extends from audiovisual unison — sound and image operating in a synchronous, unison relation — to audiovisual counterpoint, in which despite the simultaneity of image and sound tracks, each medium “possesses its own formal individuality” (Ibid., 36). By referring to the musical term counterpoint — literally, “point against point” — simultaneous sounds and images lacking strong temporal correlation can be conceived as two individual “voices”: one auditory and the other visual. 3[3. It is important to note that Chion points to several problems in the use of musical conceptions of harmony and counterpoint in audiovisual studies, particularly in relation to film criticism. First, Chion presents the potential problem of utilizing harmony and counterpoint — devices used to relate between “notes… the same raw material” — to describe audiovisual phenomena, which is a combination of two different sensory materials (Chion 1994, 35–36). Furthermore, Chion argues for the dominance of vertical audiovisual relations in the cinematic medium, detailing examples of misdiagnosed and imperceptible audiovisual counterpoint (Ibid., 38–39). However, the latter problem seems specific to the cinematic medium, especially due to the double function of both film sound and image as sensory experiences and semantic signifiers (Ibid., 38). Thus, the problem of misdiagnosed audiovisual counterpoint may be avoided in the extension of musical concepts to audiovisual analysis of non-image-based media.]

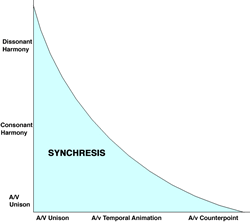

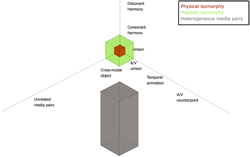

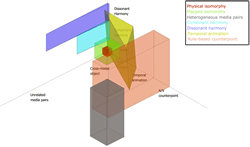

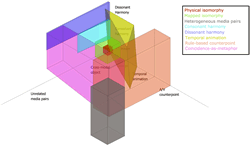

While Chion does not represent his ideas in a graphic form, Figure 1 provides an original illustrated adaptation of his two-dimensional framework. The vertical, harmonic dimension ranges from audiovisual unison (image-sound correspondence perceived as a “realistic,” multimodal manifestation of an object or event, rather than as two separate media), through consonant harmony (image-sound correspondence that reinforces conventional medium expectations and are thus perceived as strongly correlated) and on to dissonant harmony (image-sound relations that are perceived as weakly correlated due to their opposition of convention). The horizontal dimension ranges from audiovisual unison (image-sound relations that are in synchronous unison, thus drawing attention away from the use of two separate media), through temporal animation (non-unison audiovisual correspondences that are nonetheless related or complementary, with one media temporally animating the other) and on to audiovisual counterpoint (image-sound relations that are perceived as two independent voices).

The phenomenon of synchresis is shaded in light blue. Note that the maintaining of a synchretic effect is dependent on the relationship between harmonic and temporal relations. As audiovisual harmonic dissonance is introduced, it is necessary to enact audiovisual unison relations on the horizontal dimension if the synchretic effect is to be maintained: that is, the morphological congruency of the image-sound combination (for instance, a punch image with the sound accompaniment of a gong hit) supersedes the discord in content appropriateness between image and corresponding sound. In the horizontal dimension, the maintaining of synchresis using audiovisual counterpoint necessitates a unison harmonic relation: if the image and sound represent the same “content,” disparity in their temporal correspondence may be overlooked, and its synchretic experience is maintained (Ibid., 38–39).

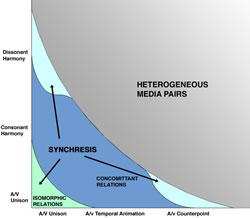

Using Chion’s writing as a starting point, Coulter offers a fully developed framework for the analysis of media pairs in “electroacoustic music with moving images” (Coulter 2010, 26). Coulter presents a framework for description of media pairs using the content of both audio and video media (on a continuum ranging from referential to abstract) and the structural relations between each media (on a continuum between homogenous and heterogeneous relations). Commenting on the structural relations between image and sound, Coulter suggests that relations between media pairs may be formalized as isomorphic, concomitant or heterogeneous.

In Coulter’s system, isomorphic relationships contain shared, formal “features that act as catalysts in the process of integration” (Ibid., 27–28) and that — despite the use of two distinct perceptual media — “rely on the activation of solitary [mental] schema” (Ibid., 28). For instance, the cinematic image of “punching” in a fight scene coupled with conventional sound design activates a single mental schema (the schema for “punch” or “impact”); isomorphic relations are maintained regardless of the multi-modality of the sensory input. Conversely, concomitant relationships occur “when two (or more) schemas are simultaneously activated [or overlaid]” (Ibid.), leading to audiovisual integration through a “process of highlighting and masking” of features based on their support of homogeneity (Ibid., 27). For instance, the cinematic image of a “punch” coupled with a synchronous gong sound triggers two schemas in terms of its referential content: one schema for “punch” and another for “gong”. However, a synchretic effect is maintained in this particular context due to the morphological similarities and synchronous attacks.

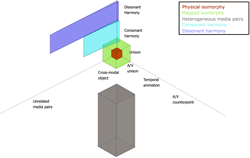

In contrast to isomorphic and concomitant relationships, heterogeneous media pairs are perceived as two different media that just happen to be experienced simultaneously. Each medium triggers its own schema, and highlighting based on similarity is avoided. While synchresis is not experienced (there is no “irresistible weld”), heterogeneous media pairs may still exhibit added value, as sound and image are constantly reflecting new meanings on one another. Coulter’s concepts of isomorphic, concomitant and heterogeneous media pairs can be overlaid on the earlier representation of Chion’s framework (Fig. 2).

While Chion and Coulter’s frameworks for classification of audiovisual relations provide an important precedent, both attempts share several shortcomings. Most notably, they provide several key categories of audiovisual relationships but are incapable of providing further detail in terms of subcategories. While this shortcoming is understandable given the potentially infinite nature of sound-image combinations, an enquiry of the relatively limited range of sound and light combinations should aim for greater detail. Furthermore, neither existing framework accounts for or even addresses the “strength” of synchretic experience —compositional relationships between media pairs (harmony/dissonance, unison/counterpoint, isomorphy/concomitance/heterogeny) are not correlated to perceptual experience and its accompanying affect. Without this correlation, it is impossible to provide a notion of hierarchy or hierarchical motion 4[4. Akin to the musical hierarchical motion between a dominant chord and the tonic.] between different types of audiovisual relations. Due to this shortcoming, Chion and Coulter’s analysis frameworks remain limited as tools for compositional design of audiovisual works.

Towards an Original Analysis Framework

I propose a framework that delineates in detail the compositional dynamics of sound-and-light (as composite, integrated material) in a manner that: 1) accommodates dynamic changes of audiovisual relations; and 2) correlates the compositional dynamics of audiovisual materials to perceptual results. This framework is envisioned as both analytical tool and compositional aid for the creation of new works using luminosonic objects.

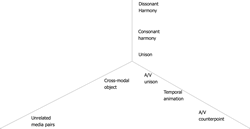

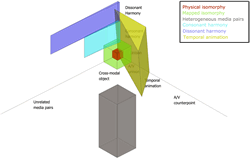

My framework is developed through both analysis of existing audiovisual installations and creative experimentation. Building on Chion’s work in this area, I will maintain the vertical and horizontal axes of audiovisual harmonicity and audiovisual temporal relations that describe essential compositional devices. In order to correlate these compositional devices with perceptual experience, I will add a third axis measuring perceptual bond, representing the strength of the synchretic experience. The axis of perceptual bond will range between the percept of a “cross-modal object… [in which] stimuli in different modalities are ‘bound’ into correlated wholes” (Whitelaw 2008, 259) and the percept of unrelated (heterogeneous) media pairs, in which the two media are independent and distinct save for their simultaneous manifestation.

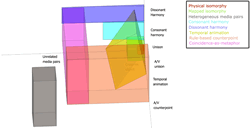

Through this addition, we arrive at a three-dimensional spatial model of audiovisual relations, which may be populated by a number of typologies. Each typology occupies its own “space”, or range of possible variations in location (i.e. variations in harmonic, temporal and perceptual relations). While each typology possesses a distinct location, overlaps between neighbouring typologies may exist. 5[5. In subsequent graphic representations, lines delineating typological boundaries are portrayed as absolute in order to enhance intelligibility. In reality, the boundary lines of any given typology is blurry, modulated by the individual perceiver and the preceding compositional context.]

The three-dimensional framework allows similarity and difference between audiovisual typologies to be discerned through spatial proximity or distance along the three axes. Furthermore, the three-dimensional audiovisual relation-space is envisioned as a virtual “volume” through which a path of successive audiovisual typologies (embodied in a single luminosonic object) may be traced. This approach allows changes between subsequent audiovisual typologies to be used as motivic devices that may be repeated, varied or opposed.

Audiovisual Typologies: Populating the Analysis Framework

I will populate my proposed framework one typology at a time 6[6. Each typology discussed in this section refers to video support examples, which may be viewed in the online web resource http://www.adambasanta.com/thesis/typologies.html], beginning with the two extremes of the spectrum: isomorphic audiovisual relations, which appear as a homogenous, “cross-modal objects” (Whitelaw 2008, 259), and their diametric opposite, heterogeneous media pairs. Following isomorphic and heterogeneous typologies, I will detail a spectrum of audiovisual relations ranging between the two extremes, tracing a path away from unison relations along the harmonic axis, followed by a correspondent tracing along the temporal axis.

Homogenous Media Pairs

Isomorphic sound-and-light relations may be placed at the meeting point of the harmonic, temporal and perceptual bond axes. This typology is defined by the shared formal features manifested: sound and light behaviours are deemed “realistic” in terms of their content appropriateness, triggering a single mental schema to process both stimuli, while exhibiting synchronous (unison) morphological features in the temporal realm. The combination of unison relations in both harmonic and temporal axes results in the substantiation of a “cross-modal object” (Whitelaw 2008, 259), the point at which the synchretic effect is strongest and most irresistible. The perceptual and mental effects of isomorphic audiovisual relations are “sticky”: the percept of a cross-modal object is both strong and rewarding, structuring subsequent audiovisual events (Ibid., 268). As a result, subsequent audiovisual relations deviating away from isomorphism (for instance, a short time delay between light and sound stimulus, or signal processing of the sound component) may still be perceived as cross-modal synchretic objects.

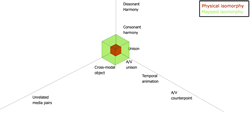

Homogenous media pairs can be divided to two subcategories of audiovisual isomorphism: physical isomorphy and mapped isomorphy.

Physical Isomorphy

In physical isomorphism, shared formal features result from the physical coupling of media. In other words, the symmetry in audiovisual relations is a product of the underlying physical system that simultaneously produces both media. For example, in artificiel’s condemned_bulbes, an array of 64 1000-watt incandescent bulbs are activated through a modulation of the voltage sent to each bulb (Video 1). Due to the size of the bulbs, the application of electrical voltage results in simultaneous light and sound, as the excitation of the carbon filament is amplified by the natural resonance of its glass encasing. 7[7. See more about condemned_bulbes (2002) in this issue of eContact!] The combination of bulb illumination with the resonant buzzing of the filament is appropriate in terms of content (sound and light behaviour are consistent with our expectation of an audiovisual manifestation of electrical energy) and corresponds to temporal unison relations (there is no sound without light, no light without sound). Other examples of physical isomorphy can be found in Nam June Paik’s Exposition of Music-Electronic Television (in which sound and light are a product of electromagnetic manipulation of television signals) and Robin Fox’s Laser Show (here the sonic waveform undergoes analogue conversion 8[8. Although this example of physical isomorphy can be framed as a mapping process, it is defined by its physical and static nature. In opposition to software mapping described below, the “mapping” in physical isomorphic typologies cannot be changed or modified, as it is a product of the physical system producing it.] to a laser-based oscilloscope).

Mapped Isomorphy

In contrast to the physical coupling aforementioned above, mapped isomorphy operates through cross-media parametric mapping. For instance, an excerpt from my own work Room Dynamics (2012) highlights a mapped isomorphic relation that takes place through amplitude following of sound level and its mapping on to light intensity (Video 2). Note that in this example, the ca. two-metre distance between the light bulb and the speaker hanging above it does not diminish the cross-modal bond between light object and the accompanying sound: spectators have frequently reported that they perceived the sound as coming from the light bulb, illustrating Chion’s observations regarding spatial magnetization of sound by vision (Chion 1994, 44 and 69–70). Other examples of mapped isomorphy can be found in Chris Ziegler’s Forest II (Video 6) and Ziegler and Paul Modler’s Neoson.

Physical and mapped isomorphy may be distinguished along technical lines, with the fixed physical nature of the former contrasting with the inherent flexibility of software mapping. However, this technical difference manifests perceptually, as the ability to modify parametric relations between light and sound results in various perceptions (or strength) of the cross-modal bond. For instance, a mapped isomorphic relation between the amplitude of a light “tremolo” 9[9. A rhythmic undulation of light intensity.] and spectral brightness of a simultaneous audio signal will manifest an isomorphy that may not be strongly perceived as a cross-modal object. 10[10. This is due to the less salient nature of the mapping process, as well as the potential departure from rhythmic unison on the temporal axis.]

Discussion of Isomorphic Relations in Popular and Experimental Contexts

Both physical and mapped isomorphic relations are common typologies in experimental and popular audiovisual contexts. They are especially prevalent in works that attempt to investigate the cross-modal phenomenon of synæsthesia. The popularity of isomorphic typologies is at least in part due to the evolutionary reward function that follows the percept of a strongly bonded cross-modal object (Ramachadran and Hirstein 1999, 21–23). In an evolutionary sense, the process of audiovisual binding, beginning with “discovering correlation and of ‘binding’ correlated features to create unitary objects or events must be reinforcing for the organism — in order to provide incentive for discovering such correlations” (Ibid.). This evolutionary reward function permeates into discussions of æsthetic pleasure resulting from the perception of cross-modal objects in artistic contexts (Whitelaw 2008, 271). The mental reward offered by isomorphic typologies may elevate its hierarchical status in a manner similar to the tonic chord of a musical work: the return to an isomorphic typology can provide a sense of arrival, conclusion and æsthetic pleasure.

Furthermore, isomorphic audiovisual relations and the percept of cross-modal objects — especially in the case of luminosonic objects identified as cross-modal objects, as seen in condemned_bulbes and Room Dynamics — cast new light on the notion of the sound object in electroacoustic music. 11[11. The connection to electroacoustic music goes beyond my own background as a composer and the discussion of “objects” found in both Schaeffer and Whitelaw’s writings, as several of the works analyzed in this text have been created by artists with a background in electroacoustic composition and sound art. Notably, Artificiel, Hans Peter Kuhn, Iannis Xenakis and Bernhard Gál began their careers or pursued parallel paths in electroacoustic and/or sound art contexts before embarking on the creation of audiovisual works.] With regards to the aforementioned works by artificial and Basanta, we can imagine a scenario in which a blindfolded spectator would strip each work of its visual content, leaving only the sounds to occupy the installation space. In such a presentation, sounds would be perceived as disembodied from their unseen source, a basic theoretical concept of electroacoustic music scholarship (Schaefer 1966; Smalley 1996; Truax 2001; to name a few). However, by removing the blindfold in the installation space and rebinding sound and light via their isomorphic relations — that is, recasting them as cross-modal luminosonic objects — we have re-embodied the formerly disembodied sound within a physical object. This re-embodiment is not without tension, as the perceptual certainty of cross-modality (the sound is definitely coming from the light object) conflicts with observational knowledge of everyday life (light objects do not usually make this sound). Although far from removing source ambiguity, the notion of re-embodied sound may be significant in terms of accessibility of sound and audiovisual work, as it avoids the disembodiment of sound and the potential barriers to accessibility associated with such æsthetic practice (McCartney 1999, 252).

Heterogenous Media Pairs

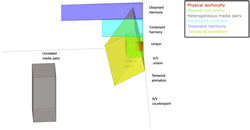

The polar opposite of the homogenous, isomorphic typologies can be defined as heterogeneous media pairs. In this typology, audiovisual relations are perceived to be different in both “harmonic” content and temporal development, resulting in a lack of cross-modal binding. This is not to say that heterogeneous media pairs do not share mutual influence, but rather, that this mutual influence is a type of “added value” rather than a synchretic experience (Chion 1994, 5). Note the positioning of the heterogeneous typology in the polar opposite corner from isomorphic typologies in the audiovisual relation-space (Fig. 5).

In the context of works using luminosonic objects, the audiovisual œuvres of German sound artist Hans Peter Kuhn and architect-mathematician-composer Iannis Xenakis showcase two approaches to heterogeneous media pairings. Kuhn’s audiovisual work continually revisits the “[motif] of architectural stillness alongside musical movements” (Kuhn 2000, 27), as can be seen in Vertical Lightfield (2009), a light and sound installation consisting of 26 fluorescent tubes, loudspeaker, servo drives, computer and aluminum panels (Video 3). In this work, the slow undulation of undimmed neon bulbs is coupled with brief auditory disruptions, conceptualized as a multi-tempo composition: the slow tempo of the neon bulb undulations is contrasted with the fast tempo of individual sounds and the medium tempo of the sound occurrence rate (Kuhn 2012). Coupled with the metaphorical tempo of the perceiver (their mood, purpose, daily occurrences previous to the experience of the work), Kuhn aims to create a multi-modal “meta rhythm” (Ibid.): an adaptation of Chion’s concept of added value. Kuhn rejects the immediate reward of isomorphic audiovisual relations — the notion of “telling the same story twice” in two simultaneous media — and rather aims to construct an additive meaning by “telling two separate stories… [each on a] different plane… in parallel…” (Ibid.).

An interesting correlate to Kuhn’s compositional dynamics and his conceptual justification can be found in the audiovisual works of Iannis Xenakis, most notably in Polytope Cluny. The surviving partial documentation of this work showcases the heterogeneous nature of media relations described in Xenakis’ original dramaturgical sketches, as we perceive a strong contrast between “the musical aspect… continuous and acoustic in origin… and the light show… discontinuous and electronic, with light flashes in movement” (Xenakis 2008, 205). Much like Kuhn, Xenakis aims to create light and sound behaviours that bear no strict relation to one another, but rather, showcase “an encounter between two different musics, one to be seen and the other to be heard” (Ibid., 206). The conception of two “different musics” is extended to the realms of perceptual and compositional functions: “light occupies time, for its effect depends on rhythm and duration while music shapes space” (Ibid., 231).

It is worth noting that both homogenous and heterogeneous typologies are conceptually charged, in that their use is often justified on conceptual grounds. 12[12. In the case of isomorphic relations, as an attempt to approach synæsthesia (Whitelaw 2008, 259) or enhance accessibility; in the case of heterogeneous relations, as a means to combine two media in order to create a novel composite percept.] As a result of these conceptual justifications, works utilizing either typology tend to use it exclusively, discarding the possibility of modulation between one another. Since one of my stated goals in the creation of a spatial framework for audiovisual relations is to create a navigable space through which a developing sequence of relations can be articulated, I will now populate the middle grounds between isomorphic and heterogeneous relations, beginning with an exploration of the harmonic axis.

The Harmonic Axis

The harmonic dimension of audiovisual relations, following Chion’s definition, may be considered a measure of content appropriateness between sound and visual — or in our case, light — media. Both sound and light events remain in temporal unison, while the “appropriateness” of light to sound relations may vary. However, the notion of content appropriateness is, as mentioned earlier, entirely contextual: it is the relation of appropriateness of sound and light events within a given context. The contextual nature of content appropriateness may result in two different percepts of audiovisual harmonicity, despite the identical nature of audiovisual materials.

Consonant Harmony

As we move away from audiovisual harmonic unison (the percept that “this is the actual sound made by the light object,” as in artificiel’s condemned_bulbes or Basanta’s Room Dynamics 13[13. Note that the sentiment expressed is only factually true in the case of artificiel’s condemned_bulbes. However, the technical reality is secondary to the perception of “unison” experienced by spectators in Room Dynamics.]), we reach audiovisual consonant harmony: an appropriate or conventional reinforcement of light events using sound. In opposition to audiovisual harmonic unison, we are aware that the combination of light and sound is contrived; however, we allow ourselves to indulge in the illusion of unity, as the temporal unison of light and sound media make the audiovisual events ecologically plausible.

United Visual Artists’ Array (Video 4) provides an example of audiovisual consonant harmony. In the video excerpt, columns outfitted with LED strips appear to produce various audiovisual behaviours. The red light column — conceptualized by the artists as an “agency” moving through the column space (UVA n.d.) — manifests light activity alongside a distinct sounding accompaniment in perfect temporal unison. While there is no particular reason for this specific sound to be coupled with the red light column, the combination of light and sound events seems appropriate given the overall digital æsthetic vocabulary. In other words, the relationship between light and sound is plausible given the ecology of the work: it is the sound the light column would make if it did make sound. So much so, that through a combination of content appropriateness (consonant harmony) and temporal unison, we perceive a strong bond between sound and light, resulting in the percept of a cross-modal object.

Dissonant Harmony

Moving further away from audiovisual unison along the harmonic axis, we may arrive at dissonant audiovisual harmonic relations. Following Chion, I define audiovisual dissonance in luminosonic objects as manifesting “a discord between [the] figural natures” of light and sound components (Chion 1994, 37–38). Consider an excerpt from Room Dynamics (Video 5) in which mapped isomorphy gives way to consonant harmony (via the increased application of resonators) and finally to audiovisual dissonant harmony (a flashing light simultaneous with the sound of a piano note). The dissonant audiovisual event lacks the ecological plausibility found in UVA’s Array, despite onset synchrony and morphological symmetry between light and sound intensity. The lack of appropriateness between sound and light events challenges the perception of a bonded cross-modal object due to the concomitant relationship between media pairs. Using Coulter’s terminology, the two schemas triggered by this event (a light bulb and a piano) are so different in terms of content that highlighting may only occur on the perceptual level, not on the conceptual level.

An increase in audiovisual dissonance may challenge the percept of a cross-modal object despite morphological and temporal isomorphisms, and may eventually give way to the perception of unrelated media pairs (Fig. 6). However, when the percept of a cross-modal object is maintained, audiovisual dissonance presents an interesting tension between the re-embodied nature of the sound and the ecological dissonance stemming from the lack of content appropriateness between sound and light manifestations. Spectators may have a strong sense of re-embodiment of the sound within a physical object (as discussed in terms of isomorphic audiovisual relations) — and are thus certain about the sound’s physical source — while simultaneously remaining perplexed by the unexpected nature of the sound produced by the luminosonic object. The combination of source certainty alongside an uncertainty of means of production may be considered an audiovisual variation of Smalley’s second-order surrogacy in which “vestiges of… gestural activity” can be deduced despite the absence of “a realistic explanation” (Smalley 1996, 85).

The Temporal Axis

While the vertical axis provides a measure of content appropriateness between simultaneous audiovisual media, the horizontal axis allows a comparison of temporal relationships between light and sound behaviours. Movement away from the synchrony of audiovisual unison may entail variation in the onset of either media, or a degree of independence in the morphological development of both light and sound. At times, the behaviour of two separate media voices may mimic the musical device of heteronomy (two voices performing complementary variations on a similar idea, occasionally coalescing), rule-based polyphony (for instance, the rhythmic alteration found in the “hocket”), or counterpoint (literally, “point against point”). The movement away from audiovisual unison along the temporal axis may, of course, be combined with various forms of audiovisual harmony. Two main categories of temporal variation — temporal animation and rule-based counterpoint — are offered below.

Temporal Animation

Following Chion’s concept, sound or light can temporally animate its media counterpart, texture the overall qualitative temporal experience or vectorize its media counterpart “towards a future, a goal… expectation” (Chion 1994, 13–14). Temporal animation usually involves a simultaneous onset of both light and sound behaviours, followed by a morphological departure of either media. This departure is subtle and thus avoids the percept of heterogeneous media pairs.

An example of temporal animation can be found in Chris Ziegler’s Forest II (Video 6). In this short excerpt, the visual light texture moving through the neon bulb assemblage temporally animates an ambient, low-frequency sound. Although there is an obvious morphological discrepancy between the relatively continuous sound and the spatial texture of the light-emitting objects, the parallel morphological development is complementary, as it provides “added value”: we re-evaluate the sound based on the lights, and vice versa. While falling short of the percept of a cross-modal object, light and sound seem to correspond to a quasi-causal relationship. This causality may be explained through the metaphor of “energy exchange”: the light texture may be perceived as a form of disruption caused by a dominant sonic element, or alternately, the light texture may be perceived to be causal of amplitude disruptions in the sonic element. In either case, the two media manifest a complementary relation and are perceived as composite (though perhaps unstable) materials. Therefore, they cannot be classified as heterogeneous media pairs.

A pedagogical excerpt designed by the author illustrates how temporal animation may be used to coax the perception of synchresis and intersensory bias (Video 7). In this example, a sine tone (emerging from a speaker placed above the visible light bulb) fades in, remains static and fades out, while the intensity of the light bulb undulates at various speeds. Listening to this example with one’s eyes closed, the sine tone is clearly perceived as a static element. However, when concentrating on the visual image of the light bulb, my perception of the sine tone changes 14[14. This experience has been reported by several colleagues who have been exposed to this example, although this exposure was informal and far from laboratory conditions.]: with each undulation of light intensity, I hear a slight amplitude modulation of the sine wave, or the addition of upper partials to the signal. That is, despite the morphological divergence of sound and light behaviours, the luminosonic object is perceived as a cross-modal object. In fact, the synchretic effect described above goes beyond the mere bonding of two separate sensory stimuli into a single correlated whole: rather, one signal is found to change the perception of its counterpart in a manner similar to the McGurk effect. 15[15. The perception of a third sound component when exposed to simultaneous sonic and visual stimuli.]

The influence exerted by visual stimulation on the perception of the static sound wave may be explained through the concept of cross-modal integration, whereby one sensory modality exerts bias on another sensory modality. The current theory explaining intersensory bias postulates that cross-modal interactions depend “on the structure of the stimuli”: a modality carrying a “more discontinuous (and hence more salient)” signal will present dominant bias (Shimojo and Shams 2001, 508). The pedagogical example is consistent with this theory, as the more discontinuous signal (light intensity) exerts bias over the less discontinuous signal (sound). Although a simple reversal of the compositional roles of light and sound in this example do not result in strong intersensory bias, the general theory provided by Shimojo and Shams may be useful for further artistic investigation of temporal animation of luminosonic objects.

Temporal animation is placed along the temporal axis of the three-dimensional analysis framework. Depending on the harmonic relation between light and sound, as well as the proximity to audiovisual counterpoint, various degrees of cross-modal bond are possible. This has been portrayed visually through angling of the temporal animation “state-space” away from the percept of a cross-modal object along the perceptual bond axis. The temporal animation typology can be viewed either along the temporal axis (Fig. 7) or in relation to the harmonic and perceptual bond axes (Fig. 8).

Rule-Based Counterpoint

Moving further away from temporal unison along the horizontal axis, we arrive at a point at which sound and light operate as two independent voices: that is, audiovisual counterpoint. However, audiovisual counterpoint need not result in the percept of heterogeneous media pairs (as discussed in the works of Kuhn and Xenakis). Rather, some forms of simple rule-based counterpoint (Fig. 9) may retain a strong cross-modal bond despite the independence of media.

The hocket is a simple counterpoint technique dating back to medieval music, “produced by dividing a melody between two parts, notes in one part coinciding with rests in the other” (OUP n.d.). Translated into sound and light behaviour, a mapped isomorphic relation between sound and light intensity gives way to an audiovisual hocket — light intensity alternates with sound intensity (Video 8). 16[16. It is noteworthy that as the speed of the hocket increases past ca. 3 Hz it is difficult to ascertain a difference between isomorphic mapping and audiovisual hocket, despite 3 Hz being well below both visual and auditory fusion rates. This phenomenon is evident in Videos 9 and 10.] Despite the independence of each voice, the relation between them seems to bind the divergent morphologies into a single cross-modal luminosonic object. Again, this bond may be explained using the ecological metaphor of “energy transfer,” whereby electrical energy is transferred between light and sound. Using this metaphor, audiovisual behaviour can be rationalized through the logic of finite electrical energy being routed to two competing circuits: as electrical energy is routed to create sound, it saps energy from the light intensity circuit, and vice versa. The “energy transfer” metaphor is likewise at play when using smooth sound and light intensity curves (Video 9). The concept of an audiovisual hocket can be further illustrated in an artistic context through an excerpt from Room Dynamics (Video 10), in which an audiovisual hocket alternates with isomorphic mapping throughout a spatially dispersed ensemble of luminosonic objects.

It follows that the placement of the rule-based counterpoint typology in the spatial framework of audiovisual relations would fall in a fairly limited range along the temporal axis, as it must always correspond to audiovisual counterpoint. However, depending on the complexity of the rule, as well as the harmonic relation between light and sound, various degrees of cross-modal bond are possible.

Coincidence-as-Metaphor

The final typology discussed in this text is termed “coincidence-as-metaphor”, examples of which may be found in Bernhard Gál’s site-specific intermedia installations Klangbojen and RGB, Ziegler’s Forest II (Video 6), and Ziegler and Modler’s Neoson. In this typology, light and sound behaviour coincide in time but do not exhibit the morphological congruency found in physical and mapped isomorphism or various forms of audiovisual harmonies. Rather, this typology often manifests through a combination of dynamic sound and static light, such that the light element operates as an indication of presence (Fig. 10).

For instance, in Gál’s Klangbojen (2003/07), eight “sound buoys” (literal translation) are positioned along a river (Video 11). Each “sound buoy” contains an LED and a loudspeaker. As the multi-channel sound composition plays throughout the buoys, the respective lights do not correspond to morphological variations found in the sounds; instead, light indicates the presence of sound in both the metaphorical and spatial senses of the word. A similar technique is utilized in RGB (2001), an intermedia installation for coloured light, sound and three quotidian objects: a toaster, an aquarium and a ventilator. Each object is coloured with a particular coloured light and accompanied by a sound composition. In this work, the coincidence of sound and coloured light goes beyond an indication of presence, as couplings of objects and saturated audiovisual ambiences are negotiated in the realm of metaphorical meaning.

I have positioned this final typology in the spatial framework of audiovisual relations in a manner allowing a range of harmonic and temporal variations between sound and light media. Note, however, that temporal unison or morphological congruency is avoided, as the coincidence-as-metaphor typology does not exhibit that relationship. Furthermore, note that the positioning of the coincidence-as-metaphor typology along the perceptual bond axis does not correspond to the percept of a cross-modal object: again, this percept would require stronger morphological congruencies than simultaneity of onset. While the coincidence-as-metaphor typology exhibits added value — the reframing of one media by the presence of another — this reframing occurs with the spectator’s explicit understanding of the two media as separate in both form and function, drawn together solely through simultaneity of manifestation and the metaphorical suggestion which that entails.

General Comments and Conclusion

Having populated a three-dimensional analysis framework with eight audiovisual typologies, we arrive at a functional state-space of audiovisual relations (Fig. 10). While additional typologies may be added through analysis and creative practice, the current framework significantly improves Chion and Coulter’s frameworks in terms of detail. Each identified typology offers various potentials of manifestation, while remaining related to one another in terms of compositional dynamics (harmonic and horizontal axes) and perceptual meaning (axis of perceptual bond).

Throughout the identification and placement of typologies within the three-dimensional framework, I have maintained a focus on the relation between compositional parameters and perceptual attributes. This focus allows an examination of the interaction between the two, especially in terms of the various strengths and “shades” of synchretic experience.

The framework proposed here may be used for analysis of audiovisual relations in works using luminosonic objects. Furthermore, this framework may be used as a basis for the analysis of audiovisual relations in other forms of audiovisual production. While analysis of screen-based media using the proposed framework offers various complications 17[17. For instance, see Chion’s reservations regarding the use of the term “counterpoint” in audiovisual screen-based media (Chion 1994, 35–40). Furthermore, the notion of audiovisual harmony increases in complexity when analyzing screen-based media that veers from abstract imagery.], it may be useful in the analysis of various object-based audiovisual practices in media arts. 18[18. In particular, audiovisual practices related to robotics or the activation of mechanical objects.]

More importantly, I feel that my proposed framework may be useful in the development of new compositional practices in audiovisual media, luminosonic or otherwise. Consistent with Bhagwati’s AGNI methodology 19[19. A proposed research-creation methodology, AGNI is an acronym for the stages of Analysis, Grammar, Notation and Implementation.], the analysis undertaken in the development of the framework and the identification of various audiovisual typologies may be utilized as “grammar” or vocabulary for the development of new works (Bhagwati, forthcoming): that is, the very act of analysis provides awareness of possibilities for compositional practice. Through the correlation of compositional parameters (harmonic and temporal relations) and perceptual results, said typologies can be utilized with awareness as to the possible perceptual meanings enacted by the spectator — the manners by which luminosonic objects substantiate themselves as audiovisual objects.

Finally, my proposed framework provides additional tools for creative practice using luminosonic objects or other audiovisual media. By virtue of the spatial approach undertaken, the notion of hierarchical relations between audiovisual typologies may be addressed: for instance, a movement between unrelated media pairs, via audiovisual counterpoint, temporal animation and arriving at mapped isomorphy may be likened to a musical modulation towards the tonic (a return to the rewarding percept of a cross-modal object). The spatial framework may allow the tracing of a “path” or succession of audiovisual typologies: for instance, from mapped isomorphy through consonant harmony and, finally, arriving at a dissonant harmony. This path — envisioned as a “phrase” of subsequent perceptual audiovisual relations — may be used as a motivic unit: repeated, varied and opposed. That is, we arrive at a notion of audiovisual composition in which the succession of various modes of audiovisual substantiation can be conceived of as a formal, motivic and developmental device.

Bibliography

Bhagwati, Sandeep. Analysis, Grammar, Notation, Implementation: The AGNI methodology. [Forthcoming].

Chion, Michel. Audio-Vision: Sound on Screen. Trans. Claudia Gorbman. New York NY: Columbia University Press, 1994.

Coulter, John. “Electroacoustic Music with Moving Images: the art of media pairing.” Organised Sound 15/1 (April 2010) “Sonic Imagery,” pp. 26–34.

Gál, Bernhard. “RGB — An Intermedia Installation by Bernhard Gal.” Bernhard Gál’s website. http://www.bernhardgal.com/rgb.html [Accessed 1 August 2011]

Godøy, Rolf Inge and Marc Lemen (Eds.). Musical Gestures: Sound, movement and meaning. New York: Routledge, 2010.

Kuhn, Hans Peter. Light and Sound. Heidelberg: Kehrer Verlag, 2000.

Kuhn, Hans Peter. Email correspondence.16 May 2012.

McCartney, Andra. “Sounding Places: Situated conversations through the soundscape compositions of Hildegard Westerkamp.” Unpublished PhD Thesis. Toronto: York University, 1999.

Oxford University Press (OUP). “Hocket.” Oxford English Dictionary Online. [Accessed 1 August 2011]

Ramachandran, Vilayanur Subramanian and William Hirstein. “The Science of Art: A Neurological theory of æsthetic experience.” Journal of Consciousness Studies 6/6–7 (1999).

Ramachandran, Vilayanur Subramanian and Ed M. Hubbard. “Synesthesia: A Window into perception, thought and language.” Journal of Consciousness Studies 8/12 (December 2001), pp. 3–34.

Schaeffer, Pierre. Traité des objets musicaux. Paris: Éditions du Seuil, 1966.

Schutz, Michael and Scott Lipscomb. “Hearing Gestures, Seeing Music: Vision influences perceived tone duration.” Perception 36/6 (June 2007), pp. 888–897.

Shimojo, Shinsuke and Ladan Shams. “Sensory Modalities Are Not Separate Modalities: Plasticity and interactions.” Current Opinion in Neurobiology 11/4 (August 2001), pp. 505–509.

Smalley, Dennis. “The Listening Imagination: Listening in the electroacoustic era.” Contemporary Music Review 13/2 (1996) “Computer Music in Context,” pp. 77–107.

Truax, Barry D. Acoustic Communication. 2nd Edition. Westport CT: Aplex Publishing, 2001.

UVA. “Array | United Visual Artists.” United Visual Artists. http://uva.co.uk/works/array [Accessed 5 January 2012]

van Wassenhove, Virginie, Dean V. Buonomano, Shinsuke Shimojo and Ladan Shams. “Distortions of Subjective Time Perception Within and Across Senses.” PLoS ONE 3/1 (2008), e1437. https://doi.org/10.1371/journal.pone.0001437

Varela, Francisco J. Ethical Know-How: Action, wisdom and cognition. Stanford CA: Stanford University Press, 1999.

Vines, Bradley W., Carol L. Krumhansl, Marcelo M. Wanderley and Daniel J. Leviti. “Cross-Modal Interactions in the Perception of Musical Performance.” Cognition 101/1 (August 2006), pp. 80–113.

Whitelaw, Mitchell. “Synesthesia and Cross-Modality in Contemporary Audiovisuals.” The Senses and Society 3/3 (April 2008), pp. 259–276.

Xenakis, Iannis. Music and Architecture: Architectural projects, texts and realizations. Iannis Xenakis Series, no. 1. Edited by Sharon Kanach. Hillsdale NY: Pendragon Press, 2008.

Art Works Cited

Artificiel. 6281 (2004).

_____. condemned_bulbes (2002).

Basanta, Adam. Room Dynamics (2012).

Fox, Robin. Laser Show (2005–present).

Gál, Bernhard. Klangbojen (2003/2007).

_____. RGB (2001).

Gál, Bernhard and Yumi Kori. Defragmentation/Blue (1999).

_____. Defragmentation/Red (2000).

Kuhn, Hans Peter. A Vertical Lightfield (2009).

Paik, Nam June. Exposition of Music-Electronic Television (1963).

UVA (United Visual Artists). Array (2008).

Xenakis, Iannis. Polytope Cluny (1972).

Ziegler, Chris. Forest II (2007–09).

Ziegler, Chris and Paul Modler. Neoson (2006).

Social top