Hadronized Spectra (The LHC Sonifications)

Sonification of proton collisions

We as human beings are on the verge of a major paradigm shift in the way we perceive our environment and our lives. Science, in the form of astrophysics as well as quantum physics, is beginning to show us that the world we experience in a linear manner is much broader than we think. From such notions as quantum entanglement, parallel universes and photons that are everywhere at the same time until they are observed, (at which point they appear static), we are seeing the beginning glimpses of a world that is non-linear. To be clear, this means we are talking about a multiverse, a multi-dimensional universe in which time is not sequential and space is not contiguous. From this perspective, the earth is not flat… nor is it an orb but rather it is a multi-dimensional, constantly changing and undulating object… perhaps not unlike the Calabi-Yau space (Fig. 1).

These discoveries, which are obviously not yet fully comprehended, are being researched in many settings worldwide — one of the most notable being that of the Large Hadron Collider (LHC) in CERN. Considering the life-altering implications of this work, it is not surprising that it is extremely inspiring to our creative minds.

The subject of this paper is a brief description of the processes involved in my recently completed project, Hadronized Spectra (The LHC Sonifications), which is based on data derived from proton collisions at the LHC. It consists of five compositions and is about an hour in length. The score for each composition was synthesized in part from data generated by the Atlas detector at the LHC. Specifically, the hadronization process, as set into motion by the physicists at the LHC, served as an “out of time sub-score” that generated the data from which the “in-time” scores for this project were derived.

However, to quote Frank Zappa, “Talking about music is like dancing about architecture.” It is very difficult to communicate the creative processes involved in composing. We make thousands of split-second decisions all along the path that eventually culminates in a finished composition. Further, the basis for these decisions is often primarily intuitive, making them even less tangible. Thus, I will delineate the procedural aspects of putting this project together in hopes of providing some insight, but be aware that in doing so we are only scratching the surface of what actually took place. Listening examples from parts of the project will be provided to further illuminate the subject. Listening tells the whole story, and to that end, perhaps it would be best to begin by listening to the first movement of the first piece, titled Emergence (Audio 1).

Immersion and Emergence

When one becomes deeply immersed in the creative process, aspects of the activity often begin to manifest in quite an unexpected manner. These manifestations are the result of a phenomenon we commonly term “emergence”. In general, emergence might be described as the effect of the outputs being greater than the sum of the inputs. Something happens — something beyond what one would expect the combination of algorithms we set in motion should be able to produce. The ghost in the machine, as it were, emerges. Similar to the way that diamond mineworkers are looking for that faint glimmer in the dark fissures between the rocks, I am a miner for these emergent properties. For me, these are the basis of every creative endeavour. I am constantly looking for that speck of light that will ultimately unfold into a creative expression, and I am constantly creating conditions conducive to their occurrence.

Sonification

In his book The Poetics of Music, Igor Stravinsky states/

As for myself, I experience a sort of terror when, at the moment of setting to work and finding myself before the infinitude of possibilities that present themselves, I have the feeling that everything is permissible to me. If everything is permissible to me, the best and the worst; if nothing offers me any resistance, then any effort is inconceivable, and I cannot use anything as a basis, and consequently every undertaking becomes futile. … [M]y freedom will be so much the greater and more meaningful the more narrowly I limit my field of action and the more I surround myself with obstacles. Whatever diminishes constraint diminishes strength. The more constraints one imposes, the more one frees one’s self of the chains that shackle the spirit. (Stravinsky 1947, 66/68)

This sounds a bit like something Houdini might have said.

As a composer, never have I found myself more constrained than when working on sonification projects. The rules I set forth are simple and yet stringent. First, I must remain true to the data that I am attempting to sonify, or what is the point? And second, I must create a musically satisfying result from the sonification. Inevitably, these two simple objectives are from their inception at odds with each other, and yet at the same time they offer the most seductive creative enticement, due to the extremely constraining and therefore extremely freeing nature of the endeavour. This typifies the very powerful and yet paradoxical nature of the phenomenon that Stravinsky was so eloquently referring to.

There are several types of sonification, each of which is an aspect of auditory display. For the purposes of this paper I will use the term “sonification” as being synonymous with “parameter mapping sonification”, which consists of obtaining acoustic attributes of events by a mapping from data attribute values (Hermann, Hunt and Neuhoff 2011). The rendering and playback of the data items yields the sonification.

Sonification is often thought of as a scientific approach that implies accurately representing data using sound in a way that portrays its source. However, for me it means that the data is intended to be the basis for a musical composition. As such, it is one of the several approaches to score synthesis that I have implemented over the years. The primary goal is to create a very “musical” piece through an interpretation of the data. The premise being that the organization and form engendered by the stream of data will be innately interesting to the listener, if only subconsciously, due to its uniquely organized structure, thus providing a formal framework. It is a secondary goal to represent the data as a reflection of its source.

The General Process

Let us further establish a basis for communication by stipulating some terminology. I have long abandoned contemporary classical views on musical composition. For example, I no longer think in terms of notes, but instead in terms of events, algorithmic events and their output. Furthermore, I think in terms of frequency relationships, rather than parameters such as melody, harmony, key signatures and scales. Additionally, beats, time signatures and other rhythmic structures fall under the umbrella of frequency relationships — thank you to Stockhausen for pointing that out over half a century ago. In addition, for my own needs, counterpoint refers to the wide scope of parametric counterpoint, which is the interaction of the parameters used to create and manipulate events.

I compose using Csound and find it perfectly suited to my compositional sensibilities. For this project, I used only a single instrument — one that I have been expanding and refining for nearly 15 years (described in more detail below). It is a sample playback instrument that implements 19 pfields, which are parametric variables in a Csound score, and provides a very wide degree of variance with regard to the original base sample. The second movement of Emergence uses the exact same four base samples as the first movement. In the beginning, one can hear the similarities but as the piece progresses it would be difficult to discern the source of the sounds as being the same. The premise being that the sounds in the second movement are related to those in the first to provide continuity and yet they are different enough to sustain interest.

The Large Hadron Collider

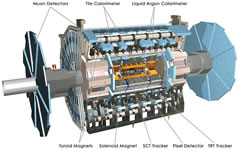

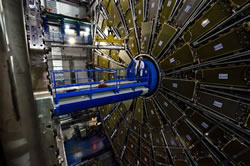

As mentioned earlier, aspects of the scores for each piece of music on this project were directly synthesized from data generated by the Large Hadron Collider (Fig. 2). The LHC is the largest particle accelerator in existence, and is arguably the most complex machine ever built. It is an astonishing testament to what we as human beings can do if we work together in a positive manner. Located on the French-Swiss border its development, construction and subsequent use have involved the cooperation, both financially as well as technologically, of over one hundred countries. As I understand it, any physicist in the world may access the data it produces as well as conduct experiments using it either on site or remotely. The super-computing grid that was created in order to accommodate the more than six million pieces of data that are collected in any single event is second to none and is a technological marvel.

The process involved in producing Hadronized Spectra, which was completed in January of 2013, was an interesting and lengthy one requiring almost two years to accomplish. It began in the spring of 2010 when a friend mentioned that Dr. Lily Asquith, an English physicist working at the LHC, had been interviewed on NPR about a project she was spearheading called LHCSound. Though I am not a physicist, I am very interested in quantum physics, and so I was quite intrigued by the idea of using the LHC data as a basis for a composition. After listening to the interview online, I immediately contacted her. Several informative emails later, I was given access to several sets of data from proton collision events collected by the ATLAS detector (Figs. 3, 4).

It was clear from the beginning that using the data provided would present an intense challenge. The numerical representations of the proton collision events were very difficult to work with and yet adhere to my rules for sonifications, and so it was at times overwhelming. Allow me to explain why by first providing a layman’s description of the process of hadronization.

Protons are stripped from hydrogen atoms and are then accelerated to 99.9997% the speed of light by extremely powerful electromagnets. Two streams of protons, each travelling in opposite directions, are beamed within a circular underground tunnel that is 27 km in circumference. At top speed they make the circuit 11,000 times per second. The streams are then crossed within a given detector such as the ATLAS (of which there are four in the LHC) and as the protons collide, measurements are taken. The heat produced by these collisions is many times hotter than the temperature at the core of the sun. As a result of the collision events, “hadrons” are produced, which are quantum level sub-particles. Included in the list of sub-particles are several types of quarks and gluons. As the protons come apart in a collision, the angle at which each sub-particle splits off, the energy it releases and many other aspects are detected and measured. In 2011, Dr. Asquith provided me with a list of five of the data parameters, described here using quantum physicist’s terminology (Asquith 2011):

- Transverse mass — The transverse mass of an event can be thought of in very simple terms as how heavy an event is. Transverse mass is calculated by adding up the mass and transverse momenta of all particles produced in a collision.

- Transverse sphericity — The sphericity of an event is a measure of how close the spread of energy in the event is to a sphere in shape. An event that appears to be completely smoothly distributed in three dimensions is spherical and has a sphericity of 1. An event that is clumpy and irregular is closer to having sphericity 0.

- Centrality — This is similar to sphericity, but is more a measure of how much of the “event” is contained within the central part of the detector. By the “central” part of the detector we mean the disk (ATLAS is cylindrical) that passes through the collision point.

- Electromagnetic fraction — The ATLAS energy detectors are called calorimeters, and there are two types of calorimeter. One of them is designed to measure the energy of particles, which interact via the electromagnetic force, and the other is designed to measure the energy of particles, which interact “hadronically” via the strong nuclear force. The electromagnetic fraction is calculated by dividing the energy detected in the electromagnetic calorimeter by the total energy detected.

- Missing energy fraction — ATLAS is designed to be able to detect pretty much any kind of particle. A neutrino, however, is not directly detectable by any part of ATLAS. This funny little particle refuses to interact with anything. Billions of them are passing through your body as you read this, mostly coming from the sun. They will fly right through planet earth without even noticing it is there. The only way we can detect neutrinos with ATLAS is to look at the spread of detectable energy in the detector and try to balance it according to the laws of conservation of energy and momentum. We calculate the amount of energy carried by the neutrino(s) produced in a collision and subsequent particle decays and divide this by the total detectable energy in an event.

One challenge that I had with trying to map the data to a reasonable correlate of my instrument parameters was that the hadronization process occurs so rapidly that the detectors cannot capture the timing. Upon discovering this, I considered abandoning the project because it seemed there was no way to reasonably represent the events without such fundamental information. However, after further consideration, it was decided that the data that was provided could be in part mapped to the timing pfields of the score even though it was not an actual aspect of the timing relationships. Though this constraint was unexpected, it actually worked out quite nicely.

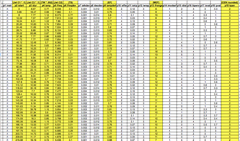

I proceeded to organize eight columns and almost three thousand rows of data into the beginnings of a score by using a spreadsheet. When possible, I chose parameters of the LHC data that seemed to resemble the parameters of the Csound instrument when mapping it to the score. For instance, the transverse mass seemed to me to most directly correlate to amplitude. Sphericity seemed to be a spatial parameter and so was used in determining aspects of the panning.

The Instrument

One way I had expanded my Csound instrument for this project was in developing a system using Cartesian coordinates within the range of 0 to 1, for both x- and y-axis values, to determine a two-dimensional placement of an event within the four-channel panorama. When the event was dynamic this served as a starting point from which an envelope determined the path of the panning within the space (Fig. 5).

The Csound instrument that was used for this project is, from a programming perspective, actually quite simplistic. Score synthesis is the primary driving force behind the complexity of the pieces. Nonetheless, the following is a brief overview of it. 2[2. More detailed information about the instrument are available from the author’s website [PDF].]

The Csound instrument, used for this project, consists of four basic modules/

- Sample playback

- Distance algorithms

- Panning algorithms

- Effects

The sample playback instrument employs the Csound Diskin2 opcode. For any single event, up to 19 iterations of the sample, determined by a pfield in the score, can be produced simultaneously, each of which has its own amplitude, amplitude envelope and frequency. Each iteration is a variation in relation to the base sample values. One way to describe it would be that it creates a complex chorusing effect; another is that it creates a complex set of harmonics. All this is determined on an event-by-event basis throughout the score.

Next, I implemented a section meant to provide the perception of distance in the piece, which I aptly call distance algorithms. They mix the dry output of the Diskin2 events with reverb envelopes and amplitude envelopes. The reverb makes a sound seem farther away the more it is applied, not only because of the auditory cues offered by the additional reverberation, but also because it dulls the sound, which is similar to the way that distant objects appear dull to us visually.

Following this come the panning algorithms. For each event, three pfields in the score determine the initial position in the form of x and y coordinates, as well as whether a particular sound is to move or not. If it is to move through the two dimensions of the quadraphonic panorama, these pfields also determine the way in which it moves by selecting one of about 20 envelopes. The panning algorithms and the distance algorithms work in parallel.

In the last section of the instrument, a pfield in the score determines whether or not an effect is applied to the event and, if so, which one. The choices are reverb, delay, chorusing, comb filter and a variable band-pass filter sweep.

The Score

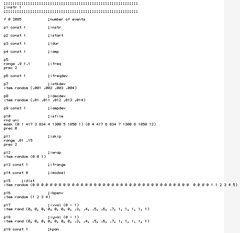

To create the score, I had to first scale the data into a range usable by the Csound instrument. By this I mean that I had to mathematically manipulate the data in a way that maintained proportionately equivalent relationships but at the same time fell within a range useable by the instrument parameters. Eight of the 19 pfields (yellow columns in Fig. 6) were stipulated by the LHC data. The other 11 pfields were determined by an application called Cmask 3[3. A stochastic event generator for Csound scores.] using tendency masks. The Cmask code, or sub-score, that was generated contains several instances of the string “const 0” (Fig. 7). These are dummy pfields that were represented by the LHC data and those columns were eliminated when the results of the sub-score were merged with the LHC data.

Editing

After all of the planning and preparation, after all of the tedious work of stipulating the constraints to be used by the tendency masks, after meticulously creating the base samples, after scaling the sonification data, after all of the testing and mining for emergent quanta… comes the editing, which for me is the most enjoyable and deeply immersive aspect of the compositional process. By the very nature of the way the sound files were generated, there is an inherent general formal structure already present in them and the editing phase is the time to bring these formal relationships into focus.

So with all of the sound files that have been generated in order on the computer screen in front of me, in my DAW, I begin by ruthlessly eliminating much of the material that was generated. Of course, I eliminate anything that is in any way obviously unappealing but then I also eliminate anything that sounds remotely familiar and anything that sounds like any of the clichés that have become prevalent in electroacoustic music. Then I proceed by joining what is left, all the while remaining ready to eliminate anything that does not contribute towards the wholeness of the piece as it progresses. This joining is most often accomplished by using fades, both subtle and overt, to blend one sound file with another. I usually delete much more material than I use, hoping that the material that remains is authentic, creative, unique and true to the goals I have set forth.

At this crucial point in the process, the primary aspect of editing that I am extremely focused upon is phrasing. Two aspects of composition that are often overlooked — in these amazing times with so many sounds readily available to us — are the overall formal structure of a piece and, a fundamental aspect of the structure, the phrasing. Phrasing is the key to turning syntactically interesting words (sounds) into sentences (phrases) and paragraphs (groups of phrases). Phrasing is what makes musical sense of that which would otherwise be isolated fragments of related but unconnected events. It makes all of these beautiful sounds into music.

Phrasing is the difference between related sound combinations that simply exist in adjacent spaces and those that connect and interact in a meaningful way. It is the interplay between the contingent characters. It is where we create tension and release, and where we take the listener far from everything they have ever known, and then return them safely, yet somehow changed. Editing, with a focus upon phrasing, is where the magic happens.

Composition

The output of this Csound instrument was a five-channel audio file meant for four speakers and an LFE. I intended to use the same event data for each of several pieces and soon realized that the scaling of the LHC data and the tendency masks used for the non-LHC parameters, as well as the choice of the four base samples selected for each piece, would provide an avenue for creative license and yet allow me to remain true to the original data.

For the first of the five pieces that was completed, Perturbative Expansion, I allowed myself only minimal editing of the output (Audio 3). I wanted to represent the data in the purest form possible. Listening to the piece it seems that one can almost recognize that the data portrays the spray of hadrons being produced as the protons come apart, of course extremely slower. It eventually turns into a chaos that in the end fades away in parallel to the energy dissipation.

At the opposite extreme, for the piece titled Immersion, I gave myself complete creative license in the form of editing (Audio 4). It began as over two hours of sound that was tediously edited down to a 12-minute piece. The other three pieces were somewhere between these extremes.

To be subjective for just a moment, what was interesting throughout the project was, for lack of a better description, the “tonal” quality of much of the sound produced. Many areas within each piece seem to be quite “harmonic” sounding (Audio 5). An unexpected characteristic of the collision events turned out to be a sort of numerical symmetry.

Though it was challenging at first, through perseverance, I finally began to understand how to work with the LHC data in a way that met all of the criteria set forth. It was a deeply immersive experience and, in the end, the music it produced was quite satisfactory.

Conclusion

I have attempted to provide insight into my own compositional process. As mentioned at the beginning of this text, we can barely scratch the surface in communicating the creative processes involved in composition. However, by providing a corollary to it, by outlining many of the systems employed, it is hoped that one can attain a sense of it.

Bibliography

Asquith, Lilly. “LHCsound.” http://lhcsound.hep.ucl.ac.uk

_____. “Event Shapes.” Private correspondence and LHCsound website, 2011. http://lhcsound.hep.ucl.ac.uk/page_sounds_eventshapes/EventShapes.html [Last accessed January 2011]

Hermann, Thomas, Andy Hunt and John G. Neuhoff (Eds.). The Sonification Handbook. Berlin: Logos Verlag, 2011.

Licata, Thomas. Electroacoustic Music: Analytical perspectives. Westport CT: Greenwood Press, 2002.

Rhoades, Michael. “Azimuth — Algorithmic Score Synthesis Techniques.” Journal SEAMUS 18/1 (2005), pp. 2–11.

Stockhausen, Karlheinz. “How Time Passes.” Die Reihe 3 (1956), pp. 99–139.

Stravinsky, Igor. Poetics of Music in the Form of Six Lessons. Trans. Arthur Knodel and Ingolf Dahl. Cambridge MA: Harvard University Press, 1947.

Social top