Interactive Sound Synthesis Mediated through Computer Networks

The digital age is continuously redefining the bounds of interaction. This has never been more apparent in the realm of sonic arts, where the idea of network interactivity is becoming increasingly ubiquitous. After all, art is essentially born of the interaction between people and the phenomenon around them.

Interactivity is a fundamental element of music performance, whether it is amongst performers, the performers and the audience, or the performers and the work itself. Since the era of The League of Automatic Music Composers and The Hub (Gresham-Lancaster 1998), composers, musicians and music technologists have explored the paradigm of computer networks as the medium of interactivity in music systems (Barbosa 2003; Traub 2005; Mills 2010).

Motivated in part by research into the microsonic components of sound creation, the authors introduce a method in which sound is generated by the feedback of an impulse across a network.

Related Works

In 2005, Gil Weinberg provided a theoretical framework that categorizes the approaches to network music creation. Much of the research in the field of network music has focused in the development of platforms for interactive and collaborative music making. Frameworks developed for musical jamming and improvisation include Auracle (Ramakrishnan, Freeman and Varnik 2004), Jamspace (Gurevich 2006), The Frequencyliator (Rebelo and Renaud 2006), The DIAMOUSES Framework (Alexandraki and Akoumianakis 2010) and Signals, an interactive framework for music robotics (Vallis et al. 2012). Such platforms have extended the ability for multiple people to create and manipulate music together over networks, though the primary function of the network in these works is as a tool for the facilitation of sharing audio and visual content and data in real time.

An inherent issue in real-time network communication is latency. Artists and researchers have approached this problem in different ways. Álvaro Barbosa et al. experimented with methods of dynamically adapting computer latency in network music (Barbosa, Cardoso and Geiger 2005), while Ajay Kapur et al. developed a low-latency framework for bi-directional network media performance over a high-bandwidth connection (Kapur et al. 2005). Essentially, these researchers were interested in the reduction of latency. With peerSynth, Jörg Stelkens sought to incorporate the network latency into the system (Stelkens 2003). This approach influences the authors’ own approach in that it takes latency as an interesting component of the medium that ought to be integrated in the musical output.

Another common domain in interactive network music is social participatory work. Atau Tanaka et al. explored the idea of social computing and participation (Tanaka, Tokui and Momeni 2005). Jack Stockholm’s system was designed for a composition utilizing several users in a public space (Stockholm 2008). The focus of these participatory works tends to be on the act of participation and not the network as a medium for sound creation.

Much of the modern research in network music has made use of the Open Sound Control (OSC) messaging protocol (Wright 2005). OSC allows for the exchange of musical control parameters between different network locations, though it is not an ideal solution for sending audio. Recently, KatieAnna Wolf and Rebecca Fiebrink have created SonNet, a software tool that allows artists to access the low-level packet data that flows across networks (Wolf and Fiebrink 2013). These packets can then be used in composition though methods of sonification, though the content of the packets themselves are not necessarily audio.

The first researchers to explore the concept of network as an acoustic medium were Chris Chafe et al., who were interested in physical modelling using computer networks (Chafe, Wilson and Walling 2002). Building on its concept, our approach utilizes the inherent characteristics of computer networks as components of sound synthesis and interactivity in sonic art performances.

System Overview

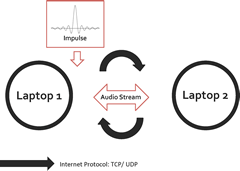

We designed a unique interactive system in which computer networks facilitate the synthesis of sonic material. Figure 1 shows an overview of the system in which the feedback of an impulse between laptop performers over Internet Protocols (IP) generates the sonic material. This section describes the impulse, framework for synthesis, and the sonic material that results from the technique.

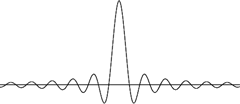

The basis of this method is the impulse, an almost instantaneous burst of substance that can be generated with different pulse widths. Methods of sound synthesis and filter design have long employed impulse generation in their construction (Roads 2004). As the initial input of our system, a single impulse (such as the one shown in Fig. 2) is evolved into complex tonal material through the chaotic feedback created between performers across a network.

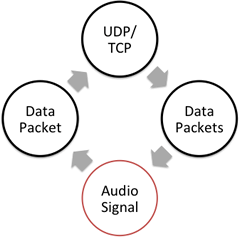

The communication framework is constructed of the User Datagram Protocol (UDP) or the Transport Control Protocol (TCP). These IPs are used to pass sonic material from performer to performer. A typical workflow is shown in Figure 3. Audio is converted to data and passed to the network. Each performer converts data received from the network to audio.

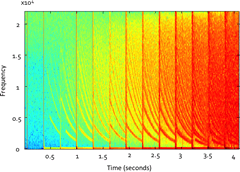

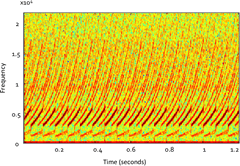

The sound synthesis is a result of a convolution between the feedback of impulses between performers and the inherent features of the network. This results in rich and chaotic sound. In methods such as Trainlet Synthesis, series of impulses are used to generate tones and clouds (Roads 2004). Our method starts from a similar basis but departs in its approach, construction and results. The spectrogramme in Figure 4 shows an instance of this process in which the initial impulse evolves over the duration of 4 seconds from a single impulse to complex sound (Audio 1). In Figure 5, there is a similar process occurring over 1.2 seconds with completely different resulting sounds (Audio 2). The resulting material can be used in further digital signal processing chains to generate the desired æsthetic output.

Performance

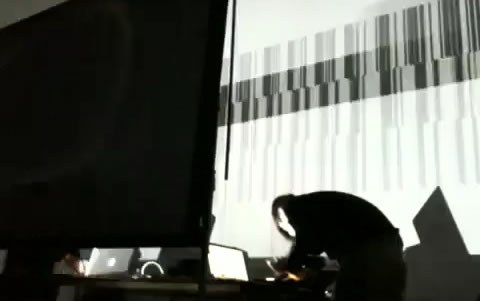

The implementation of the system described here in performance practice resulted in a series of audio-visual compositions by the Kameron Christopher and Jingyin He. At the outset of this collaboration, the authors explore the use of inherent characteristics of the system to induce emergent behaviours towards generating sonic materials. The first composition of the series focuses on intuitively generating a rich palette of sonic materials (Video 1). In SS-0010.110011111011, the secure wireless connection provided by California Institute of the Arts was used. The entire campus, including the dormitories, uses this connection. The composition was performed on a Gallery Night at 9:00 p.m. Network traffic is lowest during this time because most students are attending the gallery openings on campus. The following observations were made:

- Wireless connection causes interesting rhythmic structures and timbre. However, it is unstable and prone to having overflows.

- Restricted shared wireless connections (i.e. academic institutions, arts venues, etc.) vary the pitch and timbre.

- Setting up different bit-depths causes high aliasing and bit-crushing effects.

Building upon these techniques, Planar Chaos (Video 2) focused on the control of timbres, as well as compositional form and structure by steering the chaos. Due to the technical circumstance of the venue, this performance used a wired connection between the performers. The characteristics of wired connection with regards to the sonic materials and resulting composition are as follows:

- Connection via LAN cables provides responsive and stable connections. However, rhythmic structures tend to be rigid and timbre tends to be homogenous.

- Given cables of the same quality, length is directly proportional to latency.

- Increasing computational processes and memory usage can cause intermittent increase in latency. This can be used to simulate the varying latency in a wireless connection.

Performances at Interpolations: Noise in Space consolidated the observations from past performances and presents the refined practice of this methodology. The first piece, titled I, explores the more abrasive and visceral aspects of this synthesis technique, and exploits the complex timbre burst produced by events in the network data stream (Video 3). In contrast, II explores the opposite aspect of the synthesis method, showing how the sounds can be used in highly controlled systems, and manipulated by other digital signal processing methods to produce æsthetic outcomes (Video 4).

These performances have demonstrated that this method is suitable for producing complex sounds that can easily be modified towards compositional ends, using common audio effects such as reverberation, delay lines, waveshapers, filters and bitcrushers.

Conclusion

This approach to interactive sound synthesis mediated through computer networks provides an interesting approach to the field of network music. The methodology puts forth the idea that sonic artists and technologists can consider networks as not only a means of information transfer, but also as a means of generating sonic content. Several live performance applications and considerations have resulted from the authors’ research, with their performances evolving from early rudimentary implementations to more complex musical compositions. It also shows diversity in its sonic content. Performance variations caused by software and hardware mean that composers are able to tune these considerations towards stylistically individualized results.

In future works we would like to explore the relationship between spatialization and the transfer of sound across multiple participants and locations. Through this research, we will begin to form an acoustic soundscape work that will be hosted in installation spaces. Additionally, we want to set up a server for performances in which users across the Internet can access, manipulate and reintroduce the sound into our system, thereby enlarging the paradigm of network interactivity in our work.

Bibliography

Alexandraki, Chrisoula and Demosthenes Akoumianakis. “Exploring New Perspectives in Network Music Performance: The Diamouses Framework.” Computer Music Journal 34/2 (Summer 2010) “Musical Constraint Solvers,” pp. 66–83.

Barbosa, Álvaro. “Displaced Soundscapes: A Survey of Network Systems for Music and Sonic Art Creation.” Leonardo Music Journal 13 (December 2003) “Groove, Pit and Wave — Recording, Transmission and Music,” pp. 53–59.

Barbosa, Álvaro, Jorge Cardoso and Gunter Geiger. “Network Latency Adaptive Tempo in the Public Sound Objects System.” NIME 2005. Proceedings of the 5th International Conference on New Instruments for Musical Expression (Vancouver: University of British Columbia, 26–28 May 2005), pp. 184–187.

Chafe, Chris, Scott Wilson and Daniel Walling. “Physical Model Synthesis with Application to Internet Acoustics.” ICASSP 2002. Proceedings of the 2002 IEEE International Conference on Acoustics, Speech and Signal Processing (Orlando FL, USA: 13–17 May 2002), Vol. IV, pp. 4056–4059.

Gresham-Lancaster, Scot. “The Aesthetics and History of the Hub: The Effects of Changing Technology on Network Computer Music.” Leonardo Music Journal 8 (December 1998) “Ghosts and Monsters: Technology and Personality in Contemporary Music,” pp. 39–44.

Gurevich, Michael. “JamSpace: Designing a Collaborative Networked Music Space for Novices.” NIME 2006. Proceedings of the 6th International Conference on New Instruments for Musical Expression (Paris: IRCAM—Centre Pompidou, 4–8 June 2006), pp. 118–123.

Kapur, Ajay, Ge Wang, Philip Davidson and Perry R. Cook. “Interactive Network Performance: a Dream Worth Dreaming?” Organised Sound 10/3 (December 2005) “Networked Music,” pp. 209–219.

Mills, Roger. “Dislocated Sound: A Survey of Improvisation in Networked Audio Platforms.” NIME 2010. Proceedings of the 10th International Conference on New Instruments for Musical Expression (Sydney, Australia: University of Technology Sydney, 15–18 June 2010), pp. 186–191.

Rebelo, Pedro and Alain B. Renaud. “The frequencyliator: distributing structures for networked laptop improvisation.” NIME 2006. Proceedings of the 6th International Conference on New Instruments for Musical Expression (Paris: IRCAM—Centre Pompidou, 4–8 June 2006), pp. 53–56.

Ramakrishnan, Chandrasekhar, Jason Freeman and Kristjan Varnik. “The Architecture of Auracle: a Real-time, Distributed, Collaborative Instrument.” NIME 2004. Proceedings of the 4th International Conference on New Instruments for Musical Expression (Hamamatsu, Japan: Shizuoka University of Art and Culture, 3–5 June 2004), pp. 100–103.

Roads, Curtis. Microsound. Cambridge MA: MIT Press, 2004.

Stelkens, Jörg. “peerSynth: A P2P Multi-User Software Synthesizer with new techniques for integrating latency in real-time collaboration.” ICMC 2003. Proceedings of the International Computer Music Conference (Singapore: National University of Singapore, 29 September – 4 October 2003), pp. 319–322.

Stockholm, Jack. “Eavesdropping: Network Mediated Performance in Social Space.” Leonardo Music Journal 18 (December 2008) “Why Live? — Performance in the Age of Digital Reproduction,” pp. 55–58.

Tanaka, Atau, Nao Tokui and Ali Momeni. “Facilitating Collective Musical Creativity.” ACM MM 2005. Proceedings of the 13th Annual ACM International Conference on Multimedia (Singapore: Hilton Hotel, 6–11 November 2005), pp. 191–198.

Traub, Peter. “Sounding the Net: Recent Sonic Works for the Internet and Computer Networks.” Contemporary Music Review 24/6 (2005) “Internet Music,” pp. 459–481.

Vallis, Owen, Dimitri Diakopoulos, Jordan Hochenbaum and Ajay Kapur. “Building on the Foundations of Network Music: Exploring Interaction Contexts and Shared Robotic Instruments.” Organised Sound 17/1 (April 2012) “Networked Electroacoustic Music,” pp. 62–72.

Weinberg, Gil. “Interconnected Musical Networks: Toward a Theoretical Framework.” Computer Music Journal 29/2 (Summer 2005) “Networks for Interdependent Music Performances,” pp. 23–39.

Wolf, KatieAnna E. and Rebecca Fiebrink. “SonNet: A Code Interface for Sonifying Computer Network Data.” NIME 2013. Proceedings of the 13th International Conference on New Interfaces for Musical Expression (KAIST — Korea Advanced Institute of Science and Technology, Daejeon and Seoul, South Korea, 27–30 May 2013), pp. 503–506.

Wright, Matthew. “Open Sound Control: an enabling technology for musical networking.” Organised Sound 10/3 (December 2005) “Networked Music,” pp. 193–200.

Social top