Hear / See

A Slow conversation on sound and moving image

The following text is the result of an email exchange between Joseph Hyde and Jean Piché that took place from December 2013 to April 2014.

Jean Piché

Putting music to moving image has historically been far more frequent and common than putting images to music. Besides traditional cinema where the subservience of music to the needs of narrative has ossified and is unlikely to change meaningfully, the audiovisual arts are reversing the trend and becoming a major expressive form for the 21st century. This raises the question of perceptual hierarchies between ear and eye. But there are few guideposts for this. For some there must be intimate imitation / representation of sound by image and for others a sort of sublimated quasi-independent side-by-side presentation of both mediums is more expressive. What is your take on this? Is it the same when you work with dance?

Joseph Hyde, 17 December 2013

This question of perceptual hierarchies between sound and image has formed the backbone of my career. I always knew I was interested in combining the two, and at the start of my career assumed this meant I should pursue a career in music for film and television. However, I rapidly realized that this world held little interest for me. This was precisely because I felt there was a hierarchy at work between sound and image, and that music was strictly limited to a supporting role. Worse, I found myself ill at ease with this role — it seemed to me (and you hint at this in your question that music was generally there to provide an emotional shorthand, and that as this emotional shorthand needs to be universally understandable it needs to lean heavily on tradition and cliché and veer towards the simplistic.

I don’t think this has changed much, but I do feel that the role of sound in film has changed in some respects. What I’m talking about here is the rise of sound design as a distinct discipline — at the time (late 80s) it was of course already an established field of practice, but I don’t think it was something that people really talked about a great deal (at least if they did, I missed it). I think over the last 25 years sound design has really developed, and that inventive sound design has proliferated across many areas of film and television. I also feel that what I do in my videomusic work is much closer to sound design than film / TV music.

I didn’t discover this at the time though, and simply retreated back into the world of music for two or three years, during which I immersed myself in electroacoustic music through a PhD with Jonty Harrison at Birmingham University, the home of BEAST.

My return to audiovisual work coincided with a shift in technology. Around the mid 90s I saw something happening in video that I’d seen happening in audio technology about 10 years earlier. This was the early appearance of more affordable technologies that might allow video work to escape the confines of dedicated professional practice and institutional contexts — i.e. it allowed video to be made by semi-professionals or amateurs (this is so ubiquitous now that it’s hard to imagine that it wasn’t always the case).

For me this allowed me to engage with the visual again, but this time on my own terms. The big discovery for me was non-linear editing systems — these had been around for a while (AVID), but a cheaper alternative presented itself in the Media100 system, the cheapest version of which was essentially just a PC with a basic video capture card running a very early version of Adobe Premiere. Encountering this was a real revelation for me, as I realized that what I was basically looking at was Pro Tools but for video rather than audio.

Since then I think I’ve basically been trying to find a way of extending electroacoustic (perhaps one might say musique concrète) techniques to video. This is a task that seems harder the more I work at it — the more I work with the two media the more I become aware of their differences — or more importantly, the differences between the way we see and the way we hear. And I realize that the attempt to treat video in a Schaefferian way involves much more imagination and tangential thinking than I initially supposed.

The idea I’m most interested is reduced listening, and an attempt to apply a similar principle to the visual domain (I call it visual suspension). The reason this has become something of an obsession for me is that if video material can be treated (and more importantly perceived) as an abstract, malleable entity then we at least open the doors to some new hierarchies, some new relationships between sound and image.

Jean Piché, 3 January 2014

I came to visuals in a very similar way you did, but a few years earlier. I did the music for a few films early in my pre-university career, to pay for cheese and baby clothes. To be honest, I don’t think I was very good at it, mostly because, at the time, it hadn’t dawned on me that the main purpose of the music was to make itself unheard. My lack of enthusiasm was evidently apparent to the producers and, like you, I decided to make my bed elsewhere.

So I sought other views and discovered electronic imagery in the video art of the late 70s, the Vasulkas, Whitney, Al Razutis, and became aware of the DIY community around analogue video processors. Jody Gillerman was part of that scene while studying with Dan Sandin at the Art Institute of Chicago and had built a Sandin Image Processor. We met in a workshop organized by Al Razutis and did a real-time work, Whispers in a Plane of Light 1[1. This and other works by Jean Piché can be viewed on his Vimeo page.], in which I hooked up a Fairlight CMI sampler into the IP via an analogue converter. This was played at one of the first international conferences on digital arts (Digicon ’83) in 1983, to the befuddlement of the attendees. I was hooked on imagery by then but it took a while before I worked again with someone else.

Most of the work I did with American video artist and theorist Tom Sherman was done in the analogue realm, in a traditional A/B roll editing suite. In those early collaborations with Tom, we constantly looked for ways to give each other room for expression but our collaboration retained the traditional asymmetrical hierarchy of image and sound. Tom made the video tape, and I made the music to go along. We coined the term “videomusic” around that time (1987–89) from the inheritors of Whitney and experimental video artists. Later we differentiated this practice from the visual music of the Jordan Belson / Oskar Fischinger persuasion based on the fact we worked with real video imagery and the parametric relationship between audio and video was fortuitous.

The idea of making my own images came when I realized that the workflow involved in making video on fixed media was identical to that of producing musique concrète: collect material in the real world, transform the material with studio apparatus and compose the master work on the final tape.

However, beyond the workflow similarities of audio and video studio work, issues of language — at least an audiovisual language not linked to serving the needs of a narrative — were not readily apparent. The simple act of laying image on sound (and sound on image) seemed a satisfactory elaboration of content. Newness of outlook justifies itself until you are asked to explain why that is so.

More on that later but you seem to have arrived at the same conclusion: moving images, once you remove their iconic / narrative value exhibit the same discursive potential as raw sounds in musique concrète. The idea of reduced listening — ignoring causal relationships between the sound made and the sound heard — is useful to understand this relationship. “Reduced seeing”, so to speak, can apply to moving images in the same way. But, just like Schaeffer only hints at the concept of meaning, organizing coherent discourse outside explicit narrative is a tough thing to explain (and teach).

“Meaning” is one of those marker words in contemporary critical literature. The search for meaning, the emergence of meaning, the pertinence of meaning, the expression of meaning, are conceptual fixtures in critical theory, yet one never gets to what the “meaning” actually is. Content never gets discussed beyond the fact that its meaning is paramount, but no one know what the meaning is exactly. Presently, I am trying to reconcile my practice with the idea that meaning sits on a continuum between a narrative to which all media collaborate to support a story and, at the other end, the exclusive sensory experience of pure music. Hence, one has to ask: does music mean something?

Abstract moving imagery is in the same discursive straightjacket as sound-based music. It relies on the internal logic of its materiality, its forms and features to propose an experience of time, whereas the structuring powers of functional harmony in music are akin to the structuring powers of narrative.

Can the (widely variable) rules of sound-based music organization be similarly applied to abstract moving images? If so, is there anything to be learned from the way sonic parameters behave in time? Time. This is pretty much all about time, in the end.

Joseph Hyde, 5 January 2014

I’m very interested in what you say about your coining of the term “videomusic”. I always took this somewhat at face value (not exactly simply “video and music”, but more as a subset of visual music specific to the video medium), but you hint at two more conceptual meanings here, which perhaps you arrived at consecutively.

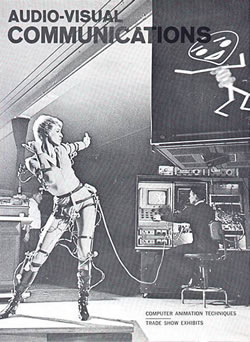

If I understand you correctly, what you perhaps at first meant in using the term was a corollary to “video art”. This seems to me an idea very specific to its time. I’m not sure “video art” still has any currency (as an aside, I now feel the same about “digital art”), but I with the benefit of hindsight it seems that videomusic in this sense was underexplored and had (perhaps even has) great potential. I’ve often been struck by the fact that many of the pioneers of video art (the Vasulkas, Nam June Paik, Bill Viola) had musical backgrounds, and I’ve recently become very interested in early video synthesis. The Paik / Abe experiments are quite well known, but it seems video synthesis was a strand of practice through the 70s and 80s (with systems developed by Buchla and EMS, as well as your own work, about which I’d love to hear more) which never quite took off. There are some fascinating “almost rans” here — the most interesting I’ve come across recently is ANIMAC (a precursor of the Scanimate system), which offered a kind of motion capture — in the 60s! (Fig. 2). 2[2. For more on this system, see the course notes for the Ohio State University’s course (Winter 2007), “A Critical History of Computer Graphics and Animation,” Section 12: “Analog approaches, non-linear editing, and compositing.”]

The second meaning of the term you seem to have evolved is to denote work that uses video material (i.e. material recorded with a camera) as opposed to animation — a distinction perhaps analogous to that between musique concrète and electronische Musik 30 years earlier. This I find equally interesting. I find it a little strange that visual music should be so commonly equated with animation — in one of the seminal articles defining the term, explicitly so. 3[3. Brian Evans, “Foundations of a Visual Music,” Computer Music Journal 29/4 (Winter 2005) “Visual Music,” pp. 11–24.] It is surprising how little of the visual music “canon” (if such a thing can be said to exist) comprises camera-sourced footage, although examples can be found, such as Len Lye’s Trade Tattoo (Fig. 3). I think further examples can be found by broadening the search beyond works explicitly denoted as visual music — I’d reference the films of Geoffrey Jones as a case in point. But there aren’t many such early examples; perhaps film as a medium didn’t lend itself to (or was to expensive for) such manipulation, and video — another magnetic medium just like audio tape — facilitated a new approach.

In this context I can understand the reasons for making a distinction from the term “visual music”, but I still find the latter interesting. For me its value is not as a genre definition, but rather as an idea, that the essence of music need not be expressed as sound, but might manifest itself in another medium, specifically the visual domain. I find this fascinating. It seems to me an ideal — somewhat akin to reduced listening actually —, which is probably in essence unattainable, but it no less worthwhile because of that. It’s an idea that rears its head at crucial moments in history: Newton’s Opticks, equating the nature of colour with the musical scale in 1704; Kandinsky striving to attain the “purity” of music as he pushed visual art into abstraction in the early 20th century; Ruttman, Richter, Eggeling and Fischinger using musical forms as they invented animation slightly later; Belson and Jacobs accidentally kick-starting psychedelic counter-culture with their Vortex concerts in San Francisco in the late 50s; at the same time, the Whitney brothers inventing computer animation as they attempted to evolve a (visual) “digital harmony”; and so on.

Anyway, to answer your question: Time. Yes, I agree, it’s all about time. The more I think on it, the more I believe that time is pretty much the only thing that sound and (moving) image have in common. All other “equivalences” (e.g., between colour and pitch) seem to be flawed, or actually meaningless. I think the ways in which we see and hear are fundamentally different, but the way in which we experience time is more universal (although certainly variable, as we all know). Varèse’s definition of music as “organised sound” is well known — of course he is referring to organization in time. One might see sound itself as time, since what differentiates sound from other variations in air pressure is periodicity. Therefore we might modify Varèse’s definition to “organised time”, which might also apply to (time-based) audio-visual media. I think most of the formal compositional principles we apply to sound can also be applied to video or film, but we still need to recognize the differences between the two media. Even here, these are felt, and I think the ways in which we perceive sonic time and visual time are different. A lot of this seems to be tied up with space. I’ve had to be clear in my discussion above that I was talking about time-based visual media, because visual media need not involve time at all (a painting or sculpture). But of course, without time, sound is not possible. More generally, I think sound and image work completely differently in spatial domain. Sound is fundamentally immersive (generally, if we hear something, we are immersed in the vibrations — if it’s loud enough or low enough we can feel it on our skin or even deep in our bodies). Image, on the other hand, seems to always be something outside of ourselves, something that we observe. There is no (exact) sonic equivalent of “the gaze”, or indeed of closing our eyes.

Space seems to be becoming more important in visual media. 3D film and TV may or may not be just another resurgence of an old gimmick, but there are many other areas of practice opening up — expanded cinema, projector mapping, prototypical holography. I know that you’ve been experimenting with spatial projections recently — can you say anything about what that brings to audiovisual practice?

Jean Piché, 18 January 2014

First, let me enthusiastically agree with the point you make about “composability” of audiovisuals from a musical perspective. Musical expertise can have a direct bearing on the coherence of audiovisual works. While we’d have to restrict this approach to non-narrative visuals, time (measures, rhythmic cells, periodicity) certainly unfolds on a similar plane, in the sense that music and moving images occupy the same temporal ranges. Formal constructs, of any compositional style, also have direct applications across both media (a theme and variation vs. a scene or object presented from different points of view?). More on that later, perhaps. I’m sure we’ll also bring back issues of narrative and how it fits into the sort of audiovisuality we are interested in.

I also like your hinting at space as a meeting point for sound and image. That parallel is more complicated however since both media address a different perceptual mode. I have recently started experimenting with 3D projection as a way to spatialize visuals in a manner analogous to (but actually quite different from) placing sound in space. 4[4. See the video documentation of HELIX-Prep, études pour écran torsadé (2012).]

I have been preoccupied with this ever since I started doing video in the 90s. I tried to work outside conventional forms, namely by using three channels of video to form an image that was more “immersive” and filled the peripheral zones of visual perception. 5[5. See for example Spin (1999–2001), for three SD video channels and stereo sound.] In hindsight, this was an unsatisfactory proposition given that everything still happened in front, with the added premium of a stretched aspect ratio filling the field of view more completely. From that experience, I was never convinced that visual immersion even makes sense as a concept. Only an iMAX system can claim to get close to “optical” immersion. Too bulky. Too pricey. Too filling.

Musing on this a little further, we have no eyes in the back of our head and, in a curious spin on this concept of “gazing” you mentioned, we (humans) are almost forced to focus locally on part of an image in front of us. It is almost impossible to apprehend an image in its entirety, except if the image is very far away and constitutes, in itself, the point of focus. We are always looking at something.

Now, if we completely fill the field of vision with a (projected) image, does this image become reality, since we can’t see anything else? Sounds corny, but good narrative film does this quite handily even to the point that we don’t care that the action takes place in a cinema. We are still “in the movies,” whether it fills our entire field of view or not. We are inside “the story” despite the fact we are not inside the image. The blending experience happens in a sublimated space bearing no relationship to the physical space.

And then sound is immersive by nature but does not require focal attention. One can learn how to ignore some sounds in a wavefront and focus on specific signals — especially when physical survival is at stake — but our auditory attention is rarely solicited that way, even when we actively listen to music. We go for the “whole picture”, as it were, for that is where the pleasurable experience lies. The professionally interested auditor will probably listen with a different ear but we don’t usually listen to a piece of music solely because the trumpet part is very good. But either way, immersion is inherent to sound / music and the perspective from which we hear sound often changes its meaning and its relative importance to the “scene” we are inhabiting.

In any case, sound and image are always perceived in space. Where art works are concerned, this space is conventionally static (the cinema, the concert hall, etc.) and in a few cases (sculpture, installations) it offers a variable point of contact. So, the idea I am interested in is to engage the viewer / listener into an experience of space being inhabited by objects, auditory and visual and to allow different perspectives into these objects by an active participation of the viewer. For lack of a better term, let’s call this the audiovisual object. It involves video, audio, physical computing (mechatronics) and the active implication of the viewer. To some extent, it is close to the fabulous work you have been involved with the danceroom Spectroscopy project.

Placing an object in space reintroduces immersion of a kind for visuals. Think of a sculpture you walk around to examine different angles. The audiovisual object proposes the same sort of interaction. It is immersion of a different sort but the experience is akin to displacing a perceptual construct in three dimensions.

The audiovisual object adds a layer of complexity by integrating the space in which it is displayed and into which the audience can move. It reintegrates spatiality. You can walk around it, you can get very close to it or move far away from it. The object occupies a finite space that is filled with an acoustic wavefront. Consequently, when you move into this field, you vary your position of hearing as you vary your position of seeing. The viewer / auditor recontextualizes the relationship of sound to image by contributing his movement. Admittedly, this re-focusing will work best when the initial (static) audiovisual contract is readily understood — i.e. the sounds do, in some correlated way, what the visuals are doing.

This can be pushed in unexpected directions. Lets imagine for the sake of argument that 24 channels of sine waves are projected into a spherical loudspeaker dome in the middle of which a tri-dimensional projection surface is suspended, the shape of which is arbitrary. The visuals projected on this 3D screen represent the sum signal of the acoustic front. When the viewer / auditor moves in the space, his / her displacement is tracked and the phase relationships of the wavefront are changed by consequently varying the frequencies, loudness and phase of the 24 sine waves. As the viewer moves about the space the audio wavefront changes and the projected image, viewed from a different point of view also varies.

Another idea we are currently developing explores propagation of energy in a given medium. This project is pure installation and involves video in a minimal way. It consists of a field of 81 “trees”, each having an audiovisual pod that runs up and down and spins on its “trunk”. 6[6. See the digital animation demo of the Vertex system in action.] Only one of the trees is directly controlled and the others mimic its movements (up and down plus rotation) with stretching time delays the further they are from the master tree. This will give rise to variable wave movements that are directly linked to phasing effects of rotating speakers mainly dispersing sine waves and impulse trains. The pods themselves are enveloped in four low resolution LCD screens displaying the waveform of the sounds being broadcast from its speakers. It is a fairly expensive project but the initial prototype is very exciting and opens up a completely different way of looking and hearing congruent audiovisuals by adding physical movement to the mix.

A variant of the installation will be to capture the movements of a viewer and have the forest of trees rise up as s/he advances close to its borders and close down on him when she walks in the middle.

This is all becoming possible because of the increasing sophistication of motion control apparatus (mechatronics). To a point, it makes manifest the possibilities of kinetic holographic abstraction for the ear and the eye.

Do these prospects move music into a role of audiovisual partner to a greater good? In this context, I do question myself about the autonomy of music (New Music in any case). Are we moving towards a kind of concerted multimodal expression where all the components are singular collaborators to the experience and would not have a life of their own?

How does dance fit into this? Your Spectroscopy project re-introduces theatre into the mix. I am starting to think about my “trees” in the forest as mechanical dancers…

Joseph Hyde, 30 January 2014

I’m going to pick up on a couple of things you’ve mentioned before but I’ve not really addressed. The first is narrative. This is something I’ve actually moved away from more and more in my own work. Of course, I’m taking the term at face value — it can be used in many ways, and I guess if one has a sufficiently loose definition then it’s something that will always, inevitably, be present in any time-based medium. But if — for the sake of argument — we stick to a simplistic definition somewhat equivalent to “storytelling”, then it’s something that can be found less and less in my work. This is quite strange, because it was something that I used to be particularly interested in. Somewhat perversely, when I was working in what might be seen as the more abstract form of pure music / sound, I explicitly explored ideas of narrative and storytelling, and this can also be found in two of my early (collaborative) audiovisual works, Songlines and Nekyia. 7[7. These and other video works by Joseph Hyde can be viewed from the “video” tab on his website.] However, many of my more recent audiovisual works actually work quite hard to avoid any such elements. This doesn’t reflect any general aversion to this territory, and I would say that this avoidance of storytelling is confined to my single-screen fixed media audiovisual works. I have specific reasons for this, and if anything they are more cultural than æsthetic. When one is watching a screen (and I do mean a regular old-fashioned rectangle — a TV, as cinema screen), one is in a place we have all grown up with now, one of suspension of disbelief, a kind of limbo (this is an overly negative way of putting it, but one can describe it as “couch potato mode”). It’s what you refer to as a particular kind of immersion. I don’t have any problem with this per se, but something that I’ve observed is that when one is in this “zone”, one stops listening. I think this is because of the proscribed roles that sound has within traditional film and TV, where it is there to “add value”, to be osmosed subconsciously to support the emotional (music) or physical (sound design) elements of what’s on screen. If one breaks down traditional narrative elements then this can be sidestepped, the viewer / listener can be encouraged to remain more present in the moment, and to actively use their ears.

Of course, there are other ways to subvert this “mode” of audiovisual perception, and they are precisely those that you are exploring in your own work (and that I have started to explore, to a lesser extent, in my own). The kind of work you refer to that makes use of multiple screens, 3D surfaces and audiovisual “trees” will definitely facilitate this kind of shift in mindset on the part of audiences.

What I see all of this (very exciting) work as having in common is physicality, and I think this is very important. It’s something I actually see everywhere in relation to technology and media, both in the arts and everywhere, and I think it’s very positive. It seems to me that really since the early days of cinema, a lot of media was concerned with “the virtual”. I think the 90s idea of virtual reality was merely the culmination of a long history of audiovisual media as a form of escapism, as something other than, alternate to, reality. This is still common in TV and film, and in course in newer media such as gaming. However, a lot of the most interesting new work I see in technology-based creative practice seems to be concerned with bringing media into the real world. There are buzzword areas of practice such as augmented reality, pervasive media, physical computing and wearable technology, but in our area of work your idea of a sculptural approach, of physical audiovisual objects, seems a very fertile one.

This brings me to my work in dance, which I suppose is my way of bringing physicality to my practice. It’s no coincidence that one will indeed find elements of narrative and storytelling in my dance-based work. The dancers, by their very physicality, will keep an audience connected to the physical space they are in, and avoid the issues (if they are issues) mentioned above. A theatrical experience is very different to a cinematic one. You asked earlier in our conversation whether working with dance was different to working with fixed media, and for this reason (amongst others), the answer is an emphatic “yes”! I’m very interested to expand the physicality of my work in this area though — we’ve started to do this with danceroom Spectroscopy by making a 360-degree immersive dome version, but for me this is only scratching the surface. Actually what I’d really like to be able to do is to bring sound and video into the same space as the dancers. It strikes me that this is actually very much what you are trying to achieve with your audiovisual objects and sculptural approach. I think technology is really opening up new possibilities in this area, so let’s see how far we can run with it!

Jean Piché, 7 April 2014

Difficult to conclude… I hope we get the chance to continue. Presently I am finding it more and more challenging to keep abreast of even a small subset of the work being done in audiovisuals. Every time I open my Facebook feed or one of the various sites dedicated to expanded audiovisuals, I find it a bit overwhelming. It’s as if every artist, whether they root their practice in music, the visual arts or theatre, is now using mediated content (by this I mean processed through or emanating from some sort of machinery). It is taking over not only the personal space of home viewing but also the public performance space. As technology advances, we approach the limits of the “credibility gap”. Will we soon be able to fabricate virtual worlds and experiences that so resemble the real thing that we will be sold composed universes? I should go and read Kurzweil’s treatise on the singularity.

Joseph Hyde, 11 April 2014

I agree — this feels like the beginning of a conversation rather than the end — I’ve no doubt we will continue the dialogue one way or another. I know what you mean about being overwhelmed by the breadth of what’s going on in the audiovisual domain at the moment, and I agree, this is something ubiquitous — not just affecting arts practice but also day to day life. For me it’s a matter of evolution rather than necessarily expansion — as some areas grow, others diminish. I think perhaps traditional forms of “passive viewing” screen-based media are in decline (television in its traditional scheduled channel format, the Hollywood blockbuster), while others are growing. For me this is largely about scale — the YouTube video may be only a few minutes long rather than half an hour or 90 minutes; the most ubiquitous screens around us are now the 4–5-inch screens of our smartphones, but at the same time there is increased interest in expanded cinema and large-scale projector mapping. I think you’re right about the “credibility gap”, but I think we seem to be heading less towards virtual worlds and more towards a blend between the virtual and the physical — augmented reality and pervasive media. One of the reasons I’m so interested in the idea and history of visual music is that it always seems to (re)surface at times of cultural and artistic change. I’ve no doubt that it will have a role to play in the changes we see around us today.

Social top