Sonik Spring

The Sonik Spring is an interface for real-time control of sound that directly links gestural motion and kinæsthetic feedback to the resulting musical experience. The interface consists of a 15-inch spring with unique flexibility, which allows multiple degrees of variation in its shape and length. These are at the core of its expressive capabilities and wide range of functionality as a sound processor.

A spring is a universal symbol for oscillatory motion and vibration. Its power stems from being an object whose shape, length, motion and vibrating kinetic energy can be easily felt and modified. The Slinky is a familiar example of such an object. As an interface, the Sonic Spring draws on the Slinky’s simplicity and appeal. It too can be compressed, expanded, twisted or bent in any direction, allowing the user to combine different types of intricate manipulation. The novelty of the Sonik Spring lies within the unique malleability of its coils: they provide well-balanced resistance, triggering a muscle feedback response that lends a strong sense of connectedness with the person who plays it. This powerful rapport mimics a quality found in acoustic instruments that enables the interface to become a truly responsive musical device, capable of delivering a wide range of expressive musical content (Wanderley and Orio 2002).

Holding and playing the Sonik Spring is meant to feel as if one is touching and sculpting sound in real time. The depth of the interaction attainable by the user of this new controller is thus quite intense. The continuous change in the interface’s physicality, induced by arm, hand and wrist motions, overall gestures and visual cues, are all directly translated into a strongly grounded sonic narrative.

Related Work

The Harmonic Driving, part of the Brain Opera, was a pioneering music controller that explored force feedback using a spring-based device. It featured a large compression spring attached to a bike’s handlebar. Changes in the spring’s bending angles steered the alteration of various musical parameters. The amplitude of the bending angles was read with capacitive sensors that detected the relative displacement between the spring’s coils (Paradiso 1999). More recent examples of controllers addressing the same issue are the Sonic Banana (Singer 2003) and the G‑Spring (Lebel and Malloch 2006). The former consists of a small flexible rubber tube with four bend sensors linearly attached to it. The G‑Spring is a heavy, 25-inch close-coil expansion spring, housing light-dependent resistors to measure the amount of light that can slip through it. Both controllers, when respectively bent and extended, map the data from the sensors to sound synthesis parameters.

The Sonik Spring, unlike the controllers described above, uses accelerometers and gyroscopes to measure complex spatial motion, which have proven to be both highly efficient and very convenient given their light weight and tiny dimensions. As an interface, it clearly offers greater physical flexibility, since the spring can be manipulated easily and freely to vary its length, overall shape and orientation. Also, because the Sonik Spring is portable, wireless and very comfortably played using both hands, it allows a higher degree of control. All of the above characteristics make it look and feel like a friendly, performable, “human-scaled” instrument (Fig. 2).

Design

The Sonik Spring features a coil with an unstrained length of 15 inches and a diameter of 3 inches. The spring extends to a maximum length of 30 inches, and when fully compressed shrinks down to 7 inches. It therefore allows a length variation from roughly half its size to exactly twice the length. When applying mappings of the spring’s varying length to simple linear changes in musical parameters, these proportions, which cover a 4:1 ratio, prove to be uniquely intuitive because of a strong correspondence between the visual and auditory results. This is easily illustrated when modifying the pitch of a sound one octave higher or an octave lower: the spring’s length would respectively change to half its size and twice its size.

The spring attaches at both ends to hand controller units made of Plexiglas. Each unit houses the orientation sensors and five multi-purpose push buttons. At their edge, the hand controllers connect to circular shaped plates, which are held in the user’s hands in such a manner that the fingers can access the push buttons with ease.

Sensing Motion

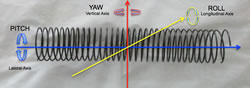

The Sonik Spring uses a combination of accelerometers and gyroscopes to detect spatial motion. Three groups of these sensors are housed within the interface: one in each hand unit and one group at its middle. This set-up captures the extensive possibilities of changes in motion, especially those related to various types of torsion and bending. Each group of sensors consists of a 2‑axis accelerometer to detect pitch and roll, and a 1‑axis gyroscope to detect yaw (Fig. 3).

The amount of expansion or compression of the spring is measured using a small joystick built into the right-hand controller unit. The joystick’s shaft was lengthened to allow it to reach and sit tightly against one of the spring’s coils. Changing the spring’s length forces the shaft of the joystick to move accordingly, giving an accurate measure of the overall length variation.

The five push buttons are symmetrically placed in each hand controller unit. Their position guides the fingers to comfortably hover over them and assure that a specific finger triggers each button. The buttons perform multiple tasks, from tape-like transport functions to routing the data from the sensors to be processed.

Gathering Sensor Data

A MidiTron wireless transmitter placed within the right-hand controller collects the information from the ten analogue sensors and ten digital buttons (Fig. 4). The analogue sensor data is formatted as MIDI continuous controller messages, and the on-off states of the buttons as MIDI note-on and note-off messages. This information is sent to a computer running the Max/MSP software, which does all the data processing.

Playing the Sonik Spring

The Sonik Spring can be used in three different “performance modes”: Instrument Mode, Sound Processing Mode and Cognitive Mode.

Instrument Mode

In instrument mode, the Sonik Spring is played as a virtual concertina, using the gestural motions commonly associated with playing this instrument while adding new performance nuances unique to the physical characteristics of the spring.

The sensors of the left hand unit trigger the generation of chords while those of the right hand generate melodic material. The motion of pulling and pushing the spring emulates the presses and draws of virtual bellows using the tone generation technique of an English concertina. The loudness of the tones produced by the instrument is a function of both the absolute length of the spring as well as the amount of acceleration force exerted to make that length change from its previous position. The rate (speed and acceleration) at which the length changes is given by the joystick’s displacement and by the combined data from the three accelerometers, being assigned to changes in loudness using different mapping strategies.

The accelerometer and the five push buttons of the right hand unit are combined to generate the melodic material. This is accomplished using fingers index through pinkie, to access four buttons that borrow the pitch generating method of a 4‑valve brass instrument, allowing the production of the 12 chromatic tones within an octave. Chords are generated using the five push buttons, the accelerometer and the gyroscope of the left hand controller. The software that generates the chords is largely based on the author’s previous work implemented in the wind controller META-EVI (Henriques 2008).

Sound Processing Mode

In its current implementation, the software uses a granular synthesis engine to playback and process sounds stored in memory (Gadd and Fels 2002).

Mapping the variation of the length of the spring to different parameters, switchable using push button presses on the right hand controller, achieves the best results as far as the correspondence between the auditory and visual domains is concerned. The most striking use of the length variation is to map it to classic pitch transposition where both pitch and tempo are simultaneously altered (Video 1). Holding the sound playback and performing scrubbing effects, forward or backwards, on a short section of a sound, by extending and compressing the spring, is also perceptually rewarding. Mappings of the left hand accelerometer include the control of a sound’s pitch and playback speed by respectively varying the spring’s lateral and longitudinal axial rotations, that is, its “pitch” and its roll. The gyroscope of the left hand controller, detecting the spring’s yaw, is used to perform panning changes on the sound being processed.

The switches of the right hand are use to perform tape-like “transport functions”. Therefore sounds can be triggered forward or backwards, stopped, paused, muted and can be looped. It is also possible to choose variable loop points and isolate a chunk of an audio file anywhere within its length, with the capability to trigger the loop start point at will, thus creating rhythmic effects.

The sensors of the right hand are used to perform additional functions such as control grain duration and randomize playback position.

For this performance mode, a vocabulary of a small group of gestures has been implemented. This was done to obtain a simple but effective way to correlate visual to auditory information (Cadoz 1988; Mulder 2000). These gestures are as follows:

- Twisting the hand units symmetrically in opposite directions and with the same force to map changes to a varying filter cut-off frequency;

- Twisting the hand units symmetrically in opposite directions while bending the spring down to map both filter cut-off and resonance frequencies;

- Bending the spring so that it defines a “U” shape mapping that shape to LFO rate, acting on the pitch being played;

- Bending the spring so that it defines an inverted “U” shape, mapping it to LFO amplitude;

- Shaking the interface along its lateral axis to map oscillation of the centre mass to the frequency of an oscillator doing amplitude modulation.

Cognitive Mode

An interesting use of the Sonik Spring is as a tool to test different sensorial stimuli. At an immediate and simple level, it can be used to gauge an individual’s upper limbs’ muscle and force responsiveness by directly linking variations in a sound’s parameter — such as pitch or loudness — to variations of the spring’s length and shape. Therefore via the action of stretching and compressing the spring, as well as the motion of bending and twisting, the Sonik Spring can specifically be used to assess and monitor shoulder, arm and wrist muscle response and overall health. Physical therapy applications of the device can consequently be devised. Further relevant applications can integrate the kinæsthetic response and visual motion of the spring with meaningful auditory feedback, leading to a more complex study of an individual’s level of cognitive perception (Wen 2005).

Collaborations with medical research groups within the State of New York University are currently underway to explore these capabilities.

Conclusion

The Sonik Spring is a very versatile interface that integrates kinæsthetic and visual feedback and maps them into the auditory domain. It can be used as a fun and intricate real-time sound processor that engages the user in a very complex way. It can also be utilized as an expressive performance tool based on the paradigm of the concertina, being able to creatively explore new sonic worlds. Current developments of the Sonik Spring as an interface based on a coil with unique flexibility characteristics include exploring its potential as a tool for health and physical therapy applications.

Acknowledgments

I want to thank Vitorino Henriques for his work and insights on the hardware component of this project.

Bibliography

Cadoz, Claude. “Instrumental Gesture and Musical Composition.” ICMC 1988. Proceedings of the International Computer Music Conference (Köln, Germany: GMIMIK, 1988), pp. 1–12.

Gadd, Ashley and Sidney Fels. “MetaMuse: A Novel Control Metaphor for Granular Synthesis.” CHI 2002. Proceedings of the 2002 Conference on Human Factors in Computing Systems (Minneapolis MN, 20–25 April 2002), pp. 636–37.

Henriques, Tomás. “Meta-EVI: Innovative Performance Paths with a Wind Controller.” NIME 2008. Proceedings of the 8th International Conference on New Instruments for Musical Expression (Genova, Italy: Università degli Studi di Genova, 5–7 June 2008), pp. 307–10. http://nime2008.casapaganini.org

Lebel, Denis and Joseph Malloch. “The G‑Spring Controller.” NIME 2006. Proceedings of the 6th International Conference on New Instruments for Musical Expression (Paris: IRCAM—Centre Pompidou, 4–8 June 2006), pp. 220–21.

MidiTron Wireless Interface. [Hardware]. http://www.miditron.com

Mulder, Axel G.E. “Towards a Choice of Gestural Constraints for Instrumental Performers.” In Trends in Gestural Control of Music. Edited by Marcelo M. Wanderley and Marc Battier. Paris: IRCAM, 2000, pp. 315–35.

Paradiso, Joseph A. “The Brain Opera Technology: New Instruments and Gestural Sensors for Musical Interaction and Performance.” Journal of New Music Research 28/2 (1999), pp. 130–49.

Singer, Eric. “Sonic Banana: A Novel Bend-Sensor-Based MIDI Controller.” NIME 2003. Proceedings of the 3rd International Conference on New Instruments for Musical Expression (Montréal: McGill University — Faculty of Music, 22–23 May 2003), pp. 85–88.

Wanderley, Marcelo M. and Nicola Orio. “Evaluation of Input Devices for Musical Expression: Borrowing Tools from HCI.” Computer Music Journal 26/3 (Fall 2002) “New Music Interfaces,” pp. 62–76.

Wen, Bingni. “Multisensory Integration of Visual and Auditory Motion.” Unpublished. May 2005. Available at http://www.klab.caltech.edu/cns120/Proposal/past/wen_proposal.pdf [Last accessed 29 January 2013]

Social top