The Use of Electromyogram Signals (EMG) in Musical Performance

A Personal survey of two decades of practice

A “Media Gallery” featuring works and performances by the author is also published in this issue of eContact!

This text retraces the use of bio-signal sensors as musical controllers over a period of 20 years. The work centres around an instrument originally called the BioMuse, developed by Hugh Lusted and Ben Knapp of BioControl Systems at Stanford University’s CCRMA in the late 1980s. My use of the BioMuse’s electromyogram (EMG) function to track muscle tension to control computer-based sound began in the early 1990s (Lusted and Knapp 1996). The work continues to the present day and, in the ethos of an evolving, yet same musical instrument, the underlying principles of operation remain consistent over time. During this time, however, the system has undergone enormous technical development and the project itself has witnessed an important evolution of musical style.

Hardware

The original BioMuse from 1990 was a two-unit 19” rack mount box (Fig. 1) with an umbilical cord leading to a belt back with eight EEG/EMG biosignal inputs (Knapp and Lusted 1990). The arm and headbands used medical grade wet-gel electrodes in triplets, with each channel providing a different signal not dissimilar to a balanced microphone line (+, -, ground). The biosignal was comprised of microvolt difference voltages with respect to the reference ground, resulting from subcutaneous neuron impulses that were picked up by the electrodes via non-invasive surface contact with the skin. All signal processing took place in the main unit, which was run on a Texas Instruments TMS320 digital signal processing (DSP) chip, with the final output as a user-programmable MIDI event and control stream. My use of the BioMuse was comprised of four EMG channels: the anterior / posterior muscle pair on the left forearm, the right forearm and the right tricep.

The increasing clock speeds of low-cost microcontrollers and the evolution of wireless technologies resulted in the first of a series of portable biosignal units by BioControl. This included the Wireless Physiological Monitor (WPM) in 2004 (Knapp and Lusted 2005). The WPM used a 8051 family microcontroller in a portable cigarette-sized pack to process two channels of biosignal, one from a gold-plated dry-electrode triplet directly on the unit (Fig. 2), with an auxiliary input channel coming from a wet-electrode arm band. The main arm band also held the microcontroller unit, creating bulk and weight on the arm. The microcontroller transmitted digitized biosignal over a radio frequency (RF) point-to-point cable replacement protocol to an RF receiver / RS232 serial base unit. Running four channels of EMG on two arms required using two independent WPM units, one on each arm, each transmitting to distinct receiver units, connected to separate serial ports on the host computer. Basic analogue signal conditioning and analogue-digital conversion (ADC) took place on the WPM unit, with signal processing offloaded to the host, something that was not possible in the day of the original BioMuse.

The most recent iteration of EMG hardware by BioControl is the BioFlex dry electrode active electronics arm band. Based on a miniature low-power surface-mount Burr-Brown INA2128 instrumentation amplifier chip, the electronics are embedded in the elastic backing behind the gold place dry electrode triplet in each arm band, making it lightweight and transparent (Fig. 3). Each arm band is powered by a 5V low current source (typically provided by sensor interface boards) and performs preamplification, filtering and signal conditioning. The output is an analogue signal compatible with 5V control voltage inputs used by common sensor interface hardware such as the Arduino or i-Cube. The sensor interface is worn on the body and communicates over standard wireless communications protocols such as Bluetooth or ZigBee to the host computer.

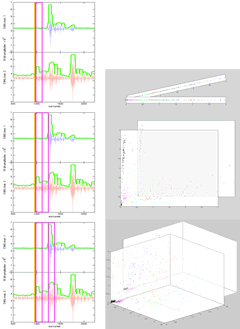

Electromyogram Signal

The electromyogram (EMG) signal is an electrical voltage generated by the neural activity commanding muscle activity. Surface electrodes pick up this neural activity by making electrical contact through the skin. Muscle tension results in higher energy in the biosignal, in the millivolt range and having a frequency range from DC to 2 kHz. When individual motor units (the smallest functional grouping of muscle fibers) contract, they repetitively emit a short burst of electrical activity known as a motor unit action potential (MUAP). The time between successive bursts is somewhat random for each motor unit. When several motor units are active (the timing of the electrical burst between distinct motor units is mostly uncorrelated), a random interference pattern of electrical activity results. Observed at the skin surface by conventional bipolar electrodes, the interference pattern can be modelled as a zero-mean stochastic process. To modulate muscle tension, the number of active motor units is modulated or the average firing rate of active motor units is modulated. In either case, the standard deviation of the interference pattern is altered — it is augmented by an increasing number of active motor units and/or an increase in the firing rate of individual motor units. Thus, the standard deviation of the interference pattern, generally referred to as the EMG amplitude, is a measure of muscular activation level (Cram 1986).

The EMG signal has been compared in its richness to audio, making audio signal processing and pattern recognition techniques potentially relevant in analyzing the biosignal. However EMG is ultimately not a continuous signal, but the sum of discrete neuron impulses. This results in an aperiodic, stochastic signal that poses challenges to audio-based signal and information processing.

Despite the fact that the original BioMuse was conceived as a MIDI instrument and that today’s BioFlex is Arduino compatible, the nature of the EMG makes it fundamentally different from basic sensors such as accelerometers or stretch sensors. This results from the character of the signal and the nature of gesture that is picked up, and has a bearing on the kind of gesture recognition and sound synthesis mapping that can be performed on output.

Control Paradigms

The original BioMuse was conceived by Knapp and Lusted as an alternative MIDI controller — a non-keyboard based way to control synthesizers. In this sense, it stretched, but nonetheless conformed to the event-parameter paradigm of MIDI. Musical events are initiated as notes, with associated expressive parameters accompanying the initial event trigger — typically in the form of velocity captured on the keyboard, mapped onto a range of synthesis parameters. Subsequent shaping of sound would take place as continuous data streams would modulate sustained sound synthesis.

This event-based control paradigm presented a challenge for the musical use of EMG as a continuous flow of rich, complex data. The BioMuse performed envelope following, from which note events could be generated by amplitude threshold triggers. Various strategies were developed to use the multiple EMG channels in conjunction with one another to generate series of events whose sustaining sounds were shaped by subsequent muscle gesture. The richness of expressivity, then came out of how naturally and how fast events could be generated and in what ways continuous control could directly modulate sound synthesis.

One of the richest sources of sound in this early period was that produced by frequency modulation (FM) synthesis (Chowning 1973), as commercialized by Yamaha in the DX7 synthesizer. While the DX7 could be programmed to produce spectrally rich sounds, continuous control at the top level over basic timbral parameters was more constrained than in analogue synthesizers of the day (Fig. 4a). 1[1. One voice of frequency modulation synthesis on the DX7 was comprised of six sinusoidal oscillators which could be configured in one of 32 different arrangements (“algorithms” in Yamaha’s terms), with output oscillators (carriers) being frequency modulated at audio rate by other oscillators (modulators) above it in the stack.] To overcome this, the EMG signal was mapped to MIDI System Exclusive messages specific to the synthesis architecture of the DX7. This allowed direct manipulation of carrier / modulator operator frequencies and modulation indexes of operators in the FM synthesis stack (Fig. 4b). This sort of control would have been non-trivial for traditional MIDI controllers and resulted in an expressive, time and gesture-varying synthetic sound that corresponded to the modes of gestural articulation idiomatic to the BioMuse.

The mapping approach used with the DX7 was extended to sample-based synthesis using the Kurzweil K2000R synthesizer and its Variable Architecture Synthesis (VAST) architecture. The VAST system allowed user-configurable synthesis chains that included oscillators, samples, waveshapers, filters and effects in a variety of different signal paths, with all synthesis parameters directly accessible by one of 128 MIDI continuous controllers (Smith 1991) [Fig. 5].

Later, with the arrival of MSP and real-time signal processing in the Max graphical programming environment, these notions were implemented free of commercial synthesizer manufacturer constraints. Sound sources could now arbitrarily be wavetable oscillators or samples, and could be looped or granulated. This fed different forms of frequency shifting, harmonizing, resonant filters and ring modulation, before an output stage that included waveshaping and amplitude modulation. These were all controlled through dynamically changing mappings based on strategies established in the field of New Instruments for Musical Expression (NIME) over time. These include “one-to-many” mapping, where a single sensor input it mapped to multiple synthesis parameters, or “many-to-one” where multiple sensor inputs might be combined to control a single synthesis parameter (Hunt and Wanderley 2002).

The long-term goal in the time that I have been performing with EMG has been to arrive at a more sophisticated machine understanding of the gestures represented in the EMG signal. This started as early as 1991 with Michael Lee’s MAXNet neural network object for Max control signals (Lee et al., 1991, 1992). The challenges in using this object with the EMG were the sheer volume of data generated by the BioMuse at the time, as well as the segmentation of the incoming signal and the definition of intermediary layers in the network. This work continued at the Sony Computer Science Laboratory (CSL) with hardware engineer Gilles Dubost and intern Julien Fistre, with whom we created custom hardware and a k-nearest neighbours method to train and subsequently distinguish in real time six different hand gestures using two channels of forearm EMG (Dubost and Tanaka 2002; Tanaka and Fistre 2002). Currently, in the MetaGesture Music project funded by the European Research Council (ERC), we are applying recent advances in machine learning to the EMG and other physiological signals.

Intention, Effort and Restraint

By using two EMG channels per forearm, on the anterior and interior muscle groups respectively, we are able to capture directionality in wrist and hand flexing (Fig. 6a). Basic pattern recognition allows us to detect wrist rotation. At first glance, this is not dissimilar to the kind of information we can capture from a 2D accelerometer configured to report XY rotation. The EMG, however, does not report gross physical displacement, but the muscular exertion that may be performed to achieve movement. In this sense, the EMG does not capture movement nor position, but the corporeal action that might (but might not) result in movement (Fig. 6b). The biosensor is not an external sensor reporting on the results of a gesture, but rather a sensor that reports on the internal state of the performer and his intention to make a gesture.

Muscle tension requires physical exertion. At the same time, free space gesture presents an interesting contradiction in the lack of an object of exertion. As there is no physical object on which to exert effort, the EMG performer finds himself making gesture in a void without tactile or haptic feedback. We propose two solutions to this situation: first, the internalization of effort through restraint, and second, the creation of haptic feedback through the physicalization of sound output. The physicalization of sound is the projection of audio of specific frequencies at amplitudes sufficient to create sympathetic resonance with parts of the body other than the ear and creates a kind of haptic feedback loop through acoustical space of the effort engaged in musical gesture (Tanaka 2011).

On a traditional instrument, restraint on the exertion applied on an instruments needs to be exercised in order to keep the performance within the physical bounds of the instrument. Restraint in the maximum effort needs to be exercised to avoid breaking the guitar string when bending it, or bottoming out or cracking the clarinet reed when blowing. At the opposite extreme, a minimum exertion needs to be performed in order to produce sound. Restraint is needed in sensor systems such as accelerometers to prevent “overshoot”. Poupyrev shows that haptic feedback which renders accelerometer-based interaction more physical improves performance of simple tasks, such as tilting to scroll in a list (Poupyrev and Maruyama 2003). On the EMG, strategies of restraint allow the execution of fluid movement with little muscle tension and the realization of high EMG levels efficiently without awkward exertion.

Multimodality

The independence of gesture from movement and the exertion of effort in the absence of boundary objects makes the EMG a unique source of information for musical interaction. This also makes the EMG idiosyncratic and less useful as a general purpose control signal. Interesting combinations can result from the use of the electromyogram in conjunction with complementary sensors. Forearm EMG by itself does not report on gross arm movement or position. We have explored techniques for multimodal interaction to distinguish similar muscular gestures in different points in space (Tanaka and Knapp 2002). In a version of the BioFlex instrument produced at STEIM, we supplemented four channels of EMG with 3D accelerometers embedded in both gloves to detect wrist flexion and tilt (STEIM 2012) [Fig. 7].

From Interpolation to Extrapolation

Expressive gesture / sound mappings typically seek to mimic acoustical instruments by mapping increased input activity to greater output amplitude and brighter timbre. While this successfully models the deterministic and linear aspects of 90% of instrumental practice, the claim here is that the last 10% of expressive articulation on an instrument — be it acoustic, electric or electronic — is nonlinear and less deterministic, where musical performance energy arises out of the risk of possible system failure.

The linear amplitude / timbre response of an instrument is exhibited when it is within the bounds of its operational design. As an instrument gets pushed to its breaking point, nonlinear relationships between articulated input begin to emerge: an instrument might require exponentially greater input energy to produce a modest increase in output, as in a brass instrument where air pressure impedance tests the respiratory exertion of the performer. Inverse effects may occur as in the violin producing a brighter, more intense timbre closer to the bridge, but having a fall off in amplitude output that needs to be compensated with greater force on the string. Gestural input might enter a zone where minute changes in input force might create a crossing-the-edge effect from desired tone to disaster. A slight shift in an electric guitar player’s posture might coax the amplifier into a feedback loop; if the performer goes too far into the amplifier’s sound projection field, control of the feedback may be lost. The plucking and bending of a guitar string require simultaneous contact of the plectrum and the meat of the finger in order to generate expressive upper harmonics, at the verge of breaking the string.

This effort to push an instrument to its ultimate output dynamic, or to keep it just within the bounds of breaking up, takes an effort beyond the pure physical resistance of an instrument as boundary object. The final exertion, or throttle, of effort, are gauged by the performer in relationship to the suddenly expanded relationship between gesture and resulting sound, making this final zone of expression well suited for articulation through the EMG (Fig. 8). While the EMG maybe an apt input signal, a correspondingly rich and nonlinear sound synthesis output technique needs to be found to fully take advantage of its potential. Here the linearity of parameter interpolation approaches (Wessel 1979) break down. What is needed, and in some ways, what was modelled in the Sysex approach to FM synthesis on the BioMuse, is a strategy for sound synthesis extrapolation, one where we can plot a physically impossible gestural or sonic point beyond the bounds of system operation (an infinity point), towards which we extrapolate, risking system breakdown.

Bibliography

Chowning, John. “The Synthesis of Complex Audio Spectra by Means of Frequency Modulation.” Journal of the Audio Engineering Society 21/7 (September 1973), pp. 526–534.

Cram, Jeffrey R. (ed.). Clinical EMG for Surface Recordings. Volume 1. Poulsbo WA, USA: J & J Engineering, 1986.

Dubost, Gilles and Atau Tanaka. “A Wireless, Network-based Biosensor Interface for Music.” ICMC 2002. Proceedings of the International Computer Music Conference (Gothenburg, Sweden, 2002).

Hunt, Andy and Marcelo M. Wanderley. “Mapping Performance Parameters to Synthesis Engines.” Organised Sound 7/2 (August 2002) “Mapping / Interactive”, pp. 97–108.

Knapp, R. Benjamin and Hugh S. Lusted. “A Bioelectric Controller for Computer Music Applications.” Computer Music Journal 14/1 (Spring 1990) “New Performance Interfaces (1),” pp. 42–47.

_____. “Designing a Biocontrol Interface for Commercial and Consumer Mobile Applications: Effective Control within Ergonomic and Usability Constraints.” HCI International 2005. Proceedings of the 11th International Conference on Human-Computer Interaction (Las Vegas NV, USA: HCI International, 22–27 July 2005).

Lee, Michael A., Adrian Freed and David Wessel. “Real-Time Neural Network Processing of Gestural and Acoustic Signals.” ICMC 1991. Proceedings of the International Computer Music Conference (Montréal: McGill University, 1991).

_____. “Neural Networks for Simultaneous Classification and Parameter Estimation in Musical Instrument Control.” SPIE 992. Proceedings of The International Society for Optical Engineering, Vol. 1706.

Lusted, Hugh S. and R. Benjamin Knapp. “Controlling Computers with Neural Signals.” Scientific American 275/4 (October 1996), pp. 82–87.

Marrin, Teresa and Rosalind W. Picard. “The Conductor’s Jacket: A Device for recording expressive musical gestures.” ICMC 1998. Proceedings of the International Computer Music Conference (Ann Arbor MI, USA: University of Michigan 1998), pp. 215–219.

Poupyrev, Ivan and Shigeaki Maruyama. “Tacile Interfaces for Small Touch Screens.” UIST 2003. Proceedings of the 16th Annual ACM Symposium on User Interface Software and Technology (Vancouver, 2–5 November 2003), pp. 217–220.

Smith, Julius O. “Viewpoints on the History of Digital Synthesis.” ICMC 1991. Proceedings of the International Computer Music Conference (Montréal: McGill University, 1991). Revised with Curtis Roads for Cahiers de l’IRCAM (September 1992).

STEIM — STudio for Electro-Instrumental Music. “Atau Tanaka — New BioMuse Demo.” [Video 2:13] Published on STEIM Amsterdam’s Vimeo channel. http://vimeo.com/album/1510821/video/2483259 [Last accessed 04 June 2012].

Tanaka, Atau. “BioMuse to Bondage: Corporeal Interaction in Performance and Exhibition.” In Intimacy Across Visceral and Digital Performance. Edited by Maria Chatzichristodoulou and Rachel Zerihan. Palgrave Studies in Performance and Technology. Basingstoke: Palgrave Macmillan, 2011, pp. 159–169.

Tanaka, Atau and Julien Fistre, “Electromyogram Signatures for Consumer Electronics Device Control.” Sony Computer Science Laboratory Internal Report. 2002.

Tanaka, Atau and R. Benjamin Knapp. “Multimodal Interaction in Music using the Electromyogram and Relative Position Sensing.” NIME 2002. Proceedings of the 2nd International Conference on New Instruments for Musical Expression (Dublin: Media Lab Europe, 24–26 May 2002), pp. 1–6.

Wessel, David. “Timbre Space as a Musical Control Structure.” Computer Music Journal 3/2 (Summer 1979), 45–52.

Social top