Investigative Studies on Sound Diffusion/Projection

at the University of Illinois: a report on an explorative collaboration

xii 1999

director of the University of Illinois at Urbana-Champaign Experimental Music Studios

and Professor of Music

with graduate composition students:

Cris Ewing, J-C. Kilbourne, Paul Oehlers, Michael Pounds and Ann Warde

Preface

Sound diffusion refers to both an aesthetic and a performance practice of further enhancing the spatial components of an electroacoutic music composition by electronically delivering musical gestures, phrases, or single sounds to different loudspeaker locations surrounding the audience space by an additional performer, often referred to as the sound diffusor or projectionist. The key distinction here is "live performance by a diffusion artist or projectionist" rather than sound movement by means of a purely automated system or encoding/decoding process. Live performance projection of a composition in 3-dimensional space can be an impressive enhancement of the articulation of a composer's work by presenting points of variable distance, trajectories and waves, sudden near and distant stereo field proximities and effective moving sound to the audience. If done well, what is added through this process is a co-musical activity that supports and significantly expands the listening and performance experience.

While sound diffusion has played a significant role in certain parts of the world for more than 25 years, only recently (within the past 5 to 8 years) have sound diffusion concerts emerged as an interest and trend within the United States—primarily by those centers that are aware of diffusion practice abroad and that have the financial support to access the necessary equipment. Since the readers of this presentation are informed colleagues, composers and performers, this presentation is not meant to be a tutorial on sound diffusion or projection, but a shared report of our work.

Introduction

The use of multi-channel delivery systems in live performance and the concern with spatialization has had an active history at the University of Illinois over the past 35 years with effective explorative performances accomplished by composers Salvatore Martirano (with his 24-channel Sal-Mar Construction), Ben Johnston (with his early presentations of simultaneous playback of multiple stereo recordings), Herbert Brün (with his early four-channel tape performances) and my work with multi-channel performance sculpture and multi-channel tape with instrument performances. Approximately three years ago, I began an extended collaborative study with several graduate composition students on multi-channel diffusion aesthetics, techniques and notation. Cris Ewing, J-C. Kilbourne, Paul Oehlers, Michael Pounds and Ann Warde participated in this research, and work continues as an on-going explorative collaboration in the development of our Discrete Eight System, an eight-channel sound diffusion system used within the University of Illinois Experimental Music Studios. In our investigative work, we found an eight-channel system to be workable and convenient, based upon current availability and affordability of equipment. Our system utilizes three front channels, two side channels and three back channels—all of which are full audio frequency range with at least 30 Hz capability (thus eliminating the need for subwoofers).

The areas of study included:

• awareness of associated aesthetics

• scientific factors for consideration

• investigation of syntax with further development planned

• selection, design and installation of a system

• notation

• exploration and design of performance practice

Our work began with the question "On what should our involvement with sound diffusion/projection be based:

After much discussion, we decided on a combination of the two since our historical interests are rooted in live performance while also having a strong interest in effective application of existing scientific knowledge and research.• a purely scientific paradigm (i.e. physical modeling and analysis-based synthesis techniques for localization, spatialization and simulation of moving sound sources), or

• more of an organic, perceptual and manual performance approach?"

Investigation began with a look at the history of the use of multi-loudspeaker performances. Works by Schaeffer in the 50's utilizing five discrete channels of sound recorded onto tape with four of the five channels directly assigned to the four-channel playback system and the fifth channel being panned live to any of the playback channels by a performer, Varese's Poème Electronique, Cage's Imaginary Landscape series, Brown's Octet, Yuasa's Icon, Subotnick's Touch, Chowning's Turenas, Martirano's Sal-Mar Construction performances, performances on the G.M.E.B. Gmebaphone (Bourges) and François Bayle's work with the Acousmonium, among others, were revisited.

It is obvious the idea of spatialization has been approached by composers in many different ways. Some have chosen to record in multi-track form to deliver premixed amplitude relationships to simulate three-dimensional activity played over a multi-channel concert system, some have elected to distribute stereo recordings in real-time over a multi-channel concert system, some have used predetermined algorithms to pan audio from one channel to the next over a multi-channel concert system and others have utilized an encoding/decoding ambisonic approach, among other methods.

I. Awareness of associated aesthetics:

Fixed medium delivery versus diffusion

We had lively discussions regarding the differences between the position favoring the fixed medium delivery without the incorporation of the enhancement/interference of real-time spatial orchestration during performance and the position favoring diffusion. Numerous people feel that the fixed medium delivery, that is to say, direct playback of the recorded work without any performed diffusion, offers the closest version of what was composed and realized in the studio by the composer. Some critics argue that concert hall performance of an electroacoustic music composition mixed in the studio to the liking of the composer is heard as being quite different from the studio realization and mix due to the larger performance space often negating the carefully constructed balances and images. Many new composers of electroacoustic works complain upon first hearing their work in a concert hall, that it does not sound as it did in the studio. Proponents of the fixed medium delivery are often concerned that their work with its predetermined spaces, balances and dynamics may be destroyed by the diffuser or projectionist. A number of composers feel that diffusion/projection would nullify the composed spaces therefore ruining the composer's arduous efforts, and thus, ruining the composition.

Proponents of multi-channel diffusion often argue that a two loudspeaker system within a concert hall offers only a minute part of the audience a sense of those composed spaces and predetermined balances and dynamics with that small portion of the audience being in the sweet spot of the system. All other members of the audience will not hear the intended balance and stereophonic images that were designed, controlled and mixed within the studio due to how the two loudspeakers interact with the large hall. Furthermore, proponents of diffusion proclaim that much electroacoustic music is either intended or well-suited for diffusion performance, especially works in the Schaefferian and acousmatic tradition. The sense is that performance of electroacoustic music in large spaces should utilize the characteristics of the performance space as part of the listening experience. Through analysis, familiarity and understanding of the work, an informed and experienced composer/diffusor/projectionist can present the diffused work as a continuation of the composer's musical intent in such a way to significantly expand the listening experience of that work.

Acousmatic music versus the compositional instrumental model

Music designed in the instrumental tradition, that is to say, note-based music stemming from the common practice Western music tradition, does not lend itself well to diffusion due to its meaning being derived primarily from the traditional function of the architecture of the notes and rhythm. The instrumental model has been traditionally presented from a fixed position, meaning that most instrumentalists are seated in one location during their performance, and while melodic and harmonic aspects can shift from one location to another within an ensemble, the ensemble itself remains stationary.

The term "acousmatic" is attributed to Pythagoras who supposedly taught classes verbally from behind a curtain to force his students to focus their attention on his words without visual distraction. In 1955, writer Jérôme Peignot used "acousmatic" as an adjective when writing about musique concrète to define sound heard but whose source is hidden. "Acousmatic music," a term introduced by François Bayle in 1974, stems from the musique concrète tradition and refers to a music of images (acoustic or electronic in nature) composed for loudspeaker performance. "Acousmatic" is a philosophy, a compositional approach, a way listening and a methodology for performance. Composer Francis Dhomont writes "Acousmatic art presents sound on its own, devoid of causal identity, thereby generating a flow of images in the psyche of the listener." 1[1. Dhomont, Francis. "Acousmatic Update." Contact!, 8(2), 1995, 49-54.]

Notable proponents of diffusion feel that some electroacoustic music, based more on the instrumental model, should not be diffused as its architecture and constricted/framed development does not lend itself to the philosophy, unmeasured organic gestures and gestural evolution of the acousmatic tradition. This is a debatable point that certainly exceeds the scope of this particular presentation.

How many discrete channels and in what configuration?

One of the problems faced by many of us interested in diffusion performance, is the numerous different configurations and number of channels used from one location to the next. While there are several centers that regularly incorporate diffusion performances, there is no standardization with respect to the number of channels and loudspeakers or the positioning of the loudspeakers (the Birmingham ElectroAcoustic Sound Theatre at the University of Birmingham, UK, uses at least 24 channels for its concerts, the Acousmonium at the GRM uses an orchestra of 80 loudspeakers, Concordia University normally uses 18 channels, Simon Fraser University often uses 8 channels and CNMAT [University of California at Berkeley] also uses 8 channels). The lack of standardization, along with other factors involved, limits performance opportunities, as well as, the evolution of performance practice.

Obviously, the more channels one has, the more expensive the set-up becomes and the economics of such a system clearly becomes a factor. This, coupled with our interest in maintaining a single configuration rather than physically changing the location of each loudspeaker between each piece, led us to adopt an eight-channel system with a fixed loudspeaker configuration. As indicated earlier, our system utilizes three front channels (left, center, right), two side channels and three back channels (left, center, right). We decided on the eight-channels due to the availability of eight-channel recorders and eight-channel ADC/DAC units. We also wanted to transcend the automatic connection to the entertainment industry by moving beyond the current 5.1 audio format.

Stereophonic sources versus panned monophonic sources

Two main approaches seem to be in the forefront; the presentation of stereophonic sources channeled to one or more stereo pairs of loudspeakers—always presenting one or more stereo images to an audience and thus moving stereo images within the performance space, and the other, involving multi-channel panning of a monophonic source or sources within the performance space.

One of the significant problems for presenting stereo recordings of electroacoustic music in a concert hall is the common inability to maintain phase coherence of the stereo image for all audience members. Since the playing of a stereo recording in a large hall will not be heard equally by everyone in the audience due to their seating location in proximity to the sweet spot of the sound system, more stereo pairs of loudspeakers would need to be part of the diffusion system in an effort to present a larger percentage of the audience with a more accurate presentation of the stereo image. Additionally, roping off those areas of audience seating that would receive the least balanced presentation would be preferable.

The live panning of monophonic sources within a multi-channel system, where the concern for maintaining phase coherence is not a problem, is accomplished by straight ahead panning of point sources from one loudspeaker location to another. While this may be more easily perceived by an audience as spatial activity, what is lost with such a presentation is the effectiveness of a stereophonic image. Efforts have been made over the past several years through software development to generate phase information to make the panning of monophonic sources appear as spatial activity within a stereo field, yet very few such applications exist for live diffusion performance.

What is spectromorphology?

Denis Smalley (City University, Northampton Square, London) coined the term spectromorphology as a conceptualization and term used to describe "the interaction between sound spectra and the ways they change and are shaped through time." This conceptualization is particularly helpful when thinking about electroacoustic music that is more concerned with spectral qualities and their temporal evolution. If music is based more on the traditional compositional model where specific notes play a primary role or if the work is organized metrically, then spectromorphological thinking is not helpful. Smalley further states that "spectromorphological thinking is primarily concerned with music which is partly or wholly acousmatic, that is, music where (in live performance) the sources and causes of the sounds are invisible—a music for loudspeakers alone, or music which mixes live performance with an acousmatic, loudspeaker element." 2[2. Smalley, Denis. "Spectromorphology: explaining sound shapes." Organised Sound, 2(2), 1997, 107-126.] He goes into much further conceptual detail in his article "Spectromorphology: explaining sound-shapes" published in the August 1997 issue of Organised Sound, which I highly recommend.

Is there a difference with the terminology: spatialization, projection and diffusion?

While the scientific community tends to embrace the term spatialization, the use of sound diffusion or sound projection has become a rather controversial issue among some of the electroacoustic community. Projection was used by François Bayle in 1974 when he was describing a music of images shot and developed in the studio and projected in a hall like a film. Bayle stated, "with time, this term—both criticized and adopted, and which at first may strike one as severe—has softened through repeated use within the community of composers, and now serves to demarcate music on a fixed medium..." 3[3. Bayle, François. "Musique acousmatique, propositions... positions." Buchet/Chastel-INA-GRM, 1993, 18.]

Jonty Harrison prefers the term sound diffusion as he has written, "We now know, that whatever the source point, sound does not travel in a tightly controllable beam but diffuses within a given space." 4[4. Harrison, Jonty. "Sound, space sculpture: some thoughts on 'what', 'how' and 'why' of sound diffusion." Organised Sound, 3(2), 1998, 117-127. ]

While this issue will remain contentious, we prefer the term projection, as it has a sense of premeditated deliberacy. So many times, we have witnessed electroacoustic music performances at conferences and festivals with little rehearsal time available, yet the work or works are diffused over a multi-channel system by the composer or engineer, often resulting in the sense of poorly improvised diffusion. This, coupled with the current preoccupation with multi-channel theatre/cinematic sound and home theatre sound systems, has often led young composers to an interest in surround sound as an effect rather than a composed, artistic performance medium. We advocate composed diffusion/projection; a contextual understanding of the music, an awareness of the aesthetic and a development of a methodology and performance practice.

II. Scientific factors for consideration:

Part of our past investigation, as well as, a designed part for future instruction for diffusion/projection includes the following areas:

• ear physiology and Head-Related Transfer Functions

• sound source characteristics

- perceived loudness

- loudness being strongly influenced by frequency and spectral composition of the sound, as demonstrated by Fletcher/Munson research• host space characteristics

- size of the space / reflective properties

• factors for determining lateral localization

- interaural time difference - time delay information indicating the amount of time it takes to travel the additional space around the head to reach the other ear.

- interaural intensity difference - for midrange frequencies - the comparison of levels of perceived loudness between the left and right ears.• factors for determining a sound's distance from the observer

- changes in perceived amplitude and spectral composition with higher presence of reflected sound

• positional shift cues

- use of the Doppler effect

• ambisonic theory

- Ambisonics is an extension of the M-S stereo recording technique developed by Alan Blumlein (1930's), involving 4 channels of information (3 perpendicular plane signals and one omnidirectional signal).

III. Investigation and formation of syntax:

Understanding sound diffusion/projection requires specific language and an awareness of associated terms, perceptions and simulation procedures. Having had long conversations and discussions among the members of our collaboration, we soon realized that while we were using many of the same terms, each of us had a different understanding of the terms. We found it necessary to take the time to define many of the basics. While we believe we have just begun to scratch the surface of syntax formation, it was a beginning of the development and understanding of our study of diffusion/projection.

We began by defining the following terms:

projection plane - an abstraction of a flat surface whose orientation is defined by eight loudspeakers of a D-8 sound projection system, forming a front border (running from Front Left through Front Center to Front Right), a back border (running from Back Left through Back Center to Back Right), a left side border (running from Front Left through Side Left to Back Left) and a right side border (running from Front Right through Side Right to Back Right). Perceived sound sources can be described as having a location in this plane or outside its borders. The purpose of this plane is to describe the perceived motion that takes place within the three dimensional space. Although this motion may be in some way three dimensional, only two dimensions can be controlled by D-8 sound projection and are therefore the only two discussed.

spatialization - the perception of a sonic environment and/or space This perception can be created through simulation of the reflective properties of a host space, as well as, how a specific sound source is reflected within that environment. (These simulated reflective properties might include: early reflection, reverberation time, and the frequency characteristics of the reflected sound.)

localization - the perception of a sound as having a definite location within an environment. In the case of sound projection, this location can be described in terms of the projection plane and its borders. Localization is created through simulation of distance and direction by manipulation of relative amplitude balance among multiple loudspeakers, relative balance of direct and reflected sound, interaural time differences, and frequency characteristics.

translation - the perceived movement of the location of a sound source, which can be described as a vector in or outside of the projection plane. Translation is accomplished by simulating a change, over time, of the distance or direction of the sound source with respect to the projection plane. Parameters to change might include amplitude, frequency characteristics and balance of direct and reflected sound.

Projection Performance Activities:

pan - the lateral translation of a sound source through or beyond the projection plane. This is accomplished through simulation of changes in relative amplitude balance between two loudspeakers.

roll - the longitudinal translation of a sound source through or beyond the projection plane. This is accomplished through simulation of changes in relative amplitude balance between two loudspeakers.

cross - the diagonal translation of the sound source through or beyond the projection plane. This is accomplished through a process which combines pan and roll.

contouring - the act of performing a translation, amplitude change, timbral shift, or combination of these to a PHRASE/GESTURE in support of the characteristic shape of the phrase/gesture.

ornamenting - the act of supportively embellishing any one or combination of the perceived location, amplitude or timbral characteristics of a GESTURE.

articulating - the act of accentuating a single EVENT by exaggerating its existing location, amplitude, or timbral characteristics.

The compositional elements of an electroacoustic or instrumental/vocal work may be described as having the following hierarchy, where the first listed is the largest element, and the last is the smallest:

*The significance and/or character of a phrase, gesture or event must be determined with respect to the contextual characteristics of the composition in question (it is realized that phrase, gesture, and event could have similar or the same meaning depending on context). This is accomplished through critical analysis, understanding and supportive interpretation of the composition by the diffusor/projectionist.

IV. Selection, design and installation of a system:

As mentioned earlier, we found working with an eight-channel system to be workable, affordable and convenient based upon currently available commercial equipment. The fashionable quest for nomenclature brevity forced us to refer to our Discrete Eight System as the D-8 System. The D-8 System was installed in a room within the Experimental Music Studios' facilities primarily designed for diffusion/projection exploration and rehearsal.

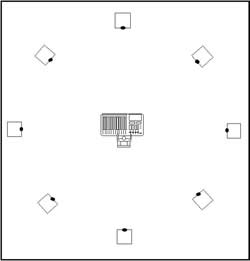

Initial research indicated three different loudspeaker positionings for eight-channel systems are in common use. The first is a circular pattern (see figure one) that seems to be effective if one is primarily working with mono sources to be panned from one loudspeaker to the next, without the fundamental concern for maintaining stereo imaging.

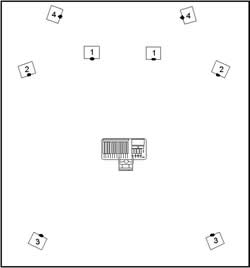

The second pattern (see figure two) is one designed to more effectively present stereo images to an audience, assuming the majority or all of the sonic material is in stereo form. Loudspeaker pair #1 presents a well-focused stereo image to those audience members seated closer to the front. Loudspeaker pair #2 allows for a more effective sense of wider lateral activity and presents a focused stereo image to audience members seated behind the center. Loudspeaker pair #3 is designed to present sonic material from behind the audience, and loudspeaker pair #4 is designed to present distant sonic material.

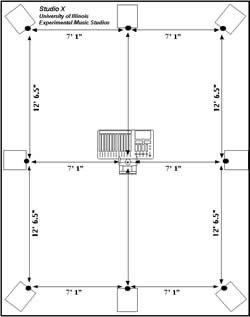

The third approach (see figure three) works with three stereo pairs of loudspeakers (front, sides and back) along with front and back center-fill loudspeakers. This last approach seems to have evolved from the theatre sound model and allows for maintaining and moving stereo images from front to back, while also allowing for panning of mono signals in a circular fashion. We elected to work with this third approach as it seems to combine aspects of the other two . When working with stereo images, a mono mix of the two channels would be sent to the center-fill loudspeakers if one would choose to do so.

Two-channel output sources (CD, DAT or directly out of audio cards) are divided into multiple outputs by custom active signal divider/line amplifiers prior to being fed into multiple mixer inputs to allow for either prepositioning of specific events to the output busses or for different equalization settings. Auxiliary sends can be used to pass information to multiple digital audio processors whose outputs are also further divided into multiple outputs by custom active signal divider/line amplifiers and returned to separate mixer input channels (rather than the aux returns to allow for further equalization) thus allowing us the opportunity to work with digitally processed environments should it seem appropriate. Due to our modest budget, we are currently using Yamaha Waveforce WF115 concert loudspeakers powered by passively-cooled Stewart World 600 amps. Our console is a Yamaha GA24/12. A custom designed, AC powered, stop watch with dimmable LED readout and foot control pedal for starting and stopping, positioned directly over the mixing console, has assisted performances where specific timings are relevant to the performance.

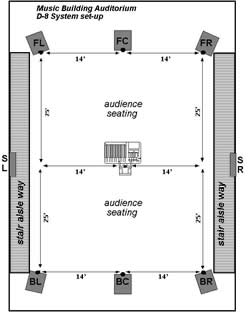

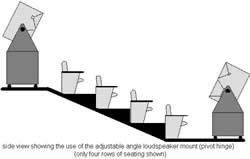

A second D-8 System was designed and purchased for concert use primarily within our music building auditorium which seats 250 (see figure four). Several problems confronted us immediately. First, the system could not be permanently installed within the auditorium as the room is an open access facility used for classes, demonstrations, meetings and concerts, and set-up time is limited to 1 hour due to the heavy scheduling. Secondly, the room is a proscenium design with the audience seating at a 26 degree angle and with aisle stairways on both sides. Firecode does not permit the aisle ways to be blocked.

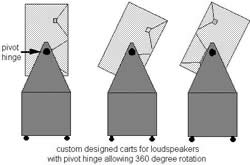

We needed a way of being able to roll in a system without having to run long lengths of cable. We decided to again use Yamaha Waveforce WF115 concert loudspeakers powered by passively-cooled Stewart World 600 amps. The power amps were installed into an adjoining projection booth with inputs to the system having been put through conduit to a wall panel on the back wall of the auditorium. Permanent loudspeaker lines were installed from the power amps through conduit to the locations within the auditorium where the loudspeakers would be positioned. Short jumper cables were made to allow us to connect the loudspeakers to the wall connectors. We designed loudspeaker carts (with large rubber wheels) that mounted the loudspeaker cabinet on a pivot hinge (see figure five), thus allowing us to quickly move six of the loudspeakers into place and to angle the loudspeaker cabinets to match the angle of the rake (see figure six).

The problem of the side loudspeakers was solved by designing two loudspeaker cabinets 7 inches deep, 48 inches wide, 36 inches high and using JBL components with similar frequency response characteristics to the Yamaha loudspeakers. These cabinets offer full frequency range down to 25 Hz. and have a steel back mount which allows us to mount the cabinet on the side walls of the auditorium where a corresponding steel mount has been permanently attached (and painted to match the wall color).

The mixing console I/O was connected easily to wall connections via a snake for access to the power amp inputs.

V. Notation:

We feel the existence of a projection score assists the performer and reduces the amount of large scale improvisation. While the performer does not have to follow each notated moment within the score, it does serve as a basic road map reflecting salient aspects of the projectionist's performance design. For our purposes, it was determined that eight-channel projection information (varying amplitude levels and channel information versus time parameters) is best displayed in the form of a graph (eight vertical channel columns [arranged in 3 stereo pairs plus two single channels for front center and back center] - each column having a horizontal amplitude axis) with a vertical time axis progressing from bottom to top. Black graphic shapes indicate the amplitude contour information for each channel, the thicker the shape, the higher the amplitude and vice versa. Comments are often written in the vertical margins to alert the projectionist to specific cues or types of articulation. To date, this approach to notation has worked well for us within the performance situation. An example of our notational approach (see figure seven) is included at the end of this report.

Closing

As I attempt to bring this report to a close, I wish to acknowledge and offer my appreciation to graduate students Cris Ewing, J-C. Kilbourne, Paul Oehlers, Michael Pounds and Ann Warde, who were part of this initial collaboration. I also wish to thank Kevin Austin and Jonty Harrison for their input and insight over the recent years with regard to our mutual interests. We are aware that we have only begun to explore the basics of diffusion/projection, and as our investigations continue, we are rapidly realizing there is so much more involved for the composer, projectionist and the listener with respect to the fragile art of sound diffusion/projection. While careful use of such systems can spectacularly enhance the organic structure, space and performance of electroacoustic music, I also caution those new to diffusion to guard against whim and effects. Poor improvisation at the faders can easily subvert the musical integrity of a work and that of the composer. Remember, sound diffusion/projection is an art and a performance requiring awareness, analysis, sensitivity and artistry.

References

Austin, K. 1995. On Sound Projection. Contact! 8(2):55-67.

Bayle, F. 1993. Musique acousmatique, propositions...positions. Paris: Editions Buchet-Chastel/INA.

Chowning, J. 1971. The Simulation of Moving Sound Sources. Journal of the Audio Engineering Society. 19(2).

Composition/Diffusion in Electroacoustic Music. Editions MNEMOSYNE (Bourges). volume III. 1997.

Dhomont, F. 1995. Rappels acousmatiques/acousmatic update. Contact! 8(2):49-54.

Doherty, D. 1998. Sound Diffusion of Stereo Music over a Multi Loudspeaker Sound Sytem: from first principles onwards to a successful experiment. Journal of Electroacoustic Music (SAN). volume 11:9-11.

Harrison, J. 1998. Sound, space, sculture: some thoughts on the 'what', 'how' and 'why' of sound diffusion. Organised Sound 3(2):117-27.

MacDonald, A. 1995. Performance Practice in the Presentation of Electroacoustic Music. Computer Music Journal. 19(4):88-92.

Reynolds, R. 1977. Explorations in Sound/Space Manipulation. Reports from the University of California at San Diego Center for New Music Experiment and Related Research. 1(1):1-23.

Wishart, T. 1985. On Sonic Art. York, UK: Imagineering Press.

Social top