Unconventional Inputs

New/old instruments, design, DIY and disability

Musical instruments today exhibit a split between old and new. On one side, there are a modest number of canonical forms that have slowly evolved over millennia; they are now extremely familiar and a few can reasonably be labelled “iconic”. However, rather than idealized or even near-optimal designs, they are necessarily the product of compromise between incompatible acoustical and human factors, and therefore invariably imperfect. For some musicians and composers these limitations are a source of creative stimulation (Eno 1996; Strauss 2004), but many more rarely deeply consider their interaction possibilities — good or bad. In either case there may be little to no demand for changes to be made in the design of an individual instrument, let alone to a family of instruments

On the other side, throughout the course of human history, technical developments have consistently inspired new forms of music interaction. Our “computer era” has witnessed and indeed assisted the growth of this trend to such an extent that, subsequent to Max Mathews and Richard Moore’s GROOVE system (1970), the new instruments and interfaces field has since sufficiently expanded to justify its own international conference series. Held annually since 2001, each edition of the New Interfaces for Musical Expression (NIME) conference provides a prominent platform for hundreds of new designs. The instruments and interfaces presented at NIME are notably diverse: at one end of the spectrum are interfaces for expert musicians; at the other end are sound toys and interactive installations for novices. There are also numerous instruments and interfaces intended for use by disabled people; these include generally accessible designs and bespoke instruments for specific users (Samuels 2014; Cormier 2016).

Until relatively recently, new interfaces remained overshadowed by the keyboard: few have been adopted by prominent musicians and there are only a handful of new instrument virtuosi. 1[1. This is a topic I address in “The Modular Synthesizer Divided: The keyboard and its discontents,” published in eContact! 17.4 — Analogue and Modular Synthesis: Resurgence and evolution.] This has started to change as manufacturers hurry to enter the music controller market — most notably Ableton and Novation, but also smaller companies such as Haken Audio and ROLI. There is also today a vibrant do-it-yourself (DIY) community that includes academics and students, but also formally untrained makers, hardware hackers and circuit benders. Their participation is eased by the abundant technical information available online and the increased availability of sensors and artist-friendly microcontrollers such as the Arduino.

My own experiences relate directly to the above: I have played musical instruments since childhood and participated in the NIME field for a decade. This is not in itself entirely unusual, but atypical is how my participation has been complicated, and to some extent shaped, by disability. Specifically, I was born seven weeks premature with an unnamed orthopedic condition that caused an assortment of limb problems. These include: an absent left hand and forearm except for a thumb-like digit attached to a double-jointed elbow; dysplasia of the right hip; bilateral patellofemoral dysplasia; bilateral fibular hemimelia; clubfoot and partial terminal deficiency.

The initial prognosis was bleak; I would be unable to walk or even sit up in a chair. My parents refused to accept this and eventually found Nigel Dywer, a consultant orthopedic surgeon at East Birmingham Hospital. Crucially, Dwyer offered a semblance of hope. More importantly, in a fourteen-hour operation, Dwyer sculpted bone and cartilage, cut and reattached tendons, and ultimately created functional feet, ankle joints and knees. The operation succeeded, but I still faced a year in plaster casts that encased my lower body and slowly repositioned my bones. Finally, aided by a below-the-knee prosthesis on one side and an ankle-foot orthotic on the other, I took my first steps and then did not stop. My development surprised even Dwyer himself. Indeed, despite prophesies of imminent doom from his successors, a remarkably active and medically problem-free childhood continued into adulthood, and I moved to Newcastle upon Tyne to study Fine Art at Northumbria University at the age of 18. I later moved to Coventry to study under composer and former Stockhausen Ensemble member Rolf Gehlhaar, and completed a full-time master’s degree. I still experienced few medical problems. As a consequence, I thought about music all the time, but little, if at all, about disability; let alone disability in a musical instrument context.

Two unrelated events fundamentally changed my perspective. Firstly, I met Clarence Adoo on the day of his debut concert with the HEAD=SPACE instrument at Sage Gateshead. Adoo was an acclaimed jazz trumpet player who had been left paralyzed from the neck down after a traffic accident in 1995. Designed by Gehlhaar, HEAD=SPACE provided a concrete example of how new instruments can broaden participation: HEAD=SPACE is operated by the user’s head and breathing only. Moreover, HEAD=SPACE was at least approximately comparable to traditional instruments in terms of functionality offered, capacity for personal expression and potential to support mastery. While I had already designed a handful of new instruments, the experience of seeing Adoo in concert infinitely reinforced my convictions.

The second came in September 2009. I was well into my doctoral study, had completed my first full year as a casual lecturer, and had spent a productive summer at The Open University Music Computing Lab in Milton Keynes (UK). However, after only two days back home, my left foot collapsed. This rendered me immobile and it took six months to diagnose the condition as severe arthritis and bone spurs in the mid-foot and ankle. Treatment would usually involve fusion of the arthritic joints to reduce pain, but the foot was deemed inoperable. Additionally, the anti-inflammatory medication prescribed in the short term had little positive effect and made me profusely ill. I eventually felt little option but to learn to manage the pain and reacquire mobility. This was a slow and arduous process but ultimately made it possible for me to assume my current lectureship in September 2010.

All the same, a lot had changed in a relatively short space of time: the distance I could walk was shorter than previously, standing still for more than a few minutes caused intolerable after-pain, and I started to experience back problems. Moreover, how I conceived the human body and its capabilities had been irretrievably altered. Specifically, if I previously knew the diversity of bodies and the individuality of their affordances, I also considered them to be essentially static or fixed. To therefore find that the capabilities of the body are instead unpredictably mutable had a significant destabilizing effect, not only on my day-to-day life, but also on my creative practice. Most notably, I had created numerous instruments between 2007 and 2009 intended for myself to play, and these formed a key part of my doctoral study. However, that they had been specifically designed around the previous needs of my body now rendered them inaccessible. For instance, the camera-controlled Vanishing Point instrument (Fig. 1) required various now-problematic body movements and states: an outstretched leap, standing almost perfectly still, and swooping low to the floor with my upper torso. Other instruments relied on cumbersome equipment and this too now limited their use. I could nevertheless continue to play the guitar, and to do so required little to no alteration to my established technique. This realization that digital musical instruments often lack the flexibility 2[2. I.e. the ability of an instrument to support a range of approaches.] of traditional instruments spurred further reflection about musical instruments and disability. These reflections underpin the design principles outlined below.

Some Principles for Instrument Design

I will first list eight design principles that relate to musical instruments and disability. These are primarily intended for designers of instruments for disabled people; however, they may also be applicable to the designers of digital musical instruments more broadly, and to the players of such instruments.

- Rather than create new instruments as a default approach, modification of existing musical instruments should be considered as a possible solution for individual needs.

- Awareness and availability are as important as physical design.

- Cultural expectations influence how and when musical instruments are used, and by whom they are used. Digital musical instruments are subject to different expectations to traditional instruments, but they are not unburdened.

- The body can adapt to traditional musical instruments, but for human reasons it is desirable to adapt the instrument to the body.

- Playing a traditional musical instrument can inform the design of digital musical instruments in obvious and less obvious ways.

- There are few universal interfaces: so-called accessible interfaces may enable new participants, but they can also inadvertently exclude others.

- The complexity of digital musical instruments is such that designers need to learn incrementally and develop over time.

- Consider the whole: the elements of a digital musical instrument are not interoperable.

I will now revisit the above principles and expand on their respective origins and relevance in turn before moving on to discuss their wider applicability.

Existing Musical Instruments and Flexibility

I vividly recall when I first decided to play a musical instrument: aged five, I unexpectedly came across a jazz band in the local park and immediately knew that I wanted to participate. My mother was uneasy about the idea, but eventually allowed my father to purchase a gold-coloured B-flat trumpet. Weekly instrumental lessons ensued and continued on into primary school.

By age nine, I had performed with the British Police Symphony Orchestra at Birmingham Symphony Hall, joined Solihull Youth Brass Band and become first trumpet in the school orchestra. I continued lessons at senior school and passed the Associated Board of the Royal Schools of Music (ABRSM) Grade 7 trumpet exam aged 12. All appeared well, but despite the investment of many hundreds of hours, I felt no real affinity for the instrument, and little performer-instrument intimacy. Indeed, the dull toil of daily practice, particularly at higher levels of study, eroded my enthusiasm and I left the trumpet behind after I left school.

Some sixteen years on, it is pertinent to reflect on how I initially came to play that particular instrument. I neither knew nor remembered little about this until relatively recently. However, my father inadvertently revealed that, after my initial expression of interest, he had contacted my school music teacher to determine the most suitable instrument. This led to a meeting that also included a regional musical service tutor, and the three subsequently decided on the trumpet. The trumpet was favoured on the basis that it could be played by the right hand only, did not require immediate modification and was a relatively inexpensive investment. They apparently did not anticipate that my left thumb would be almost ideal to support the weight of the instrument.

Those involved in the selection had, by all accounts, little or no experience of similar cases and limited access to information on the topic. They nevertheless displayed an intuitive if incomplete awareness of musical instrument affordances, and how to match these to specific bodily affordances. This is surely related to (and enabled by) the familiarity of the instrument involved. To put this another way, it is perhaps the ubiquity of traditional instruments that enables their basic interaction requirements to be intuitively understood. This is particularly apparent in the case of the piano: the instrument is capable of some of the most complex music imaginable, but even a complete novice can tap out a simple melody (Jordà 2005).

Consciously or otherwise, there may also be influence from the numerous prominent historical examples of notable performer-instrument relationships that depart from the conventional in terms of physicality. These include performers with altered bodily affordances (i.e. those that occur after already learning to play an instrument) and unconventional bodily affordances that are present prior to learning to play a musical instrument. The former includes pianist Paul Wittgenstein, jazz guitarist Django Reinhardt and the deaf percussionist Evelyn Glennie. The latter includes one-handed pianist Nicholas McCarthy and the asthmatic saxophonist Kenneth Gorelick. The case of guitarist Tony Iommi is more complex in that, after a gory industrial accident, he chose to modify the affordances of both body and instrument rather than relearn to play the instrument right-handed (Dalgleish 2014).

Awareness and Availability

If a decade as a trumpet player can be seen as ample justification for the instrument’s selection, it is notable how the landscape has changed since my childhood. By the end of the 1980s, numerous new instruments and interfaces had been developed. These may or may not have been appropriate for my particular case, but few if any were known about, let alone available, in a modest suburb nine miles from Birmingham city centre. In fact, limited availability has afflicted new instruments and interfaces for much of their history. Many have been one-off creations intended to be played only by their designer, were produced in limited numbers or were simply prohibitively expensive. It follows that digital musical instruments that are little known or scarcely available are far less likely to be adopted.

Economic and social conditions have started to erode this exclusivity. Firstly, as the size of the music controller market has increased, economies of scale have meant that the cost of music controllers have in the main fallen in real terms. Second, the trickle-down economics that have favoured the richest few for decades have been held accountable for their failure to spread prosperity across the economy (Stiglitz 2015). The related tendency to concentrate new technologies inside elite businesses and institutions has also been contested (Mulgan 2015). For instance, the One Laptop Per Child (OLPC), Raspberry Pi and BBC micro:bit projects are all broadly comparable in their aims. In particular, they aim to put computers directly into the hands of children to enable them to learn to code. The subsequent increase in opportunities and prosperity, it is hoped, can in turn stimulate a kind of “trickle up” effect.

Many of these developments have been co-opted for musical applications. Comparable specialized music and audio platforms such as BELA and Axoloti Core have also become available. These have started to appreciably democratize the digital musical instruments field. Firstly, they have provided the DIY-inclined with more options than ever before. Second, their inexpensiveness and ready availability has emboldened designers to pursue more exploratory and experimental practices. Third, the fact that they are open source has enabled new designs to be easily shared and replicated. To have multiples of new instruments is particularly important if designs are to be more than briefly tested.

Influence of Cultural Expectations

I became an avid listener of the John Peel show on late night BBC Radio 1 around the time I started senior school. Peel’s promotion of mostly independent and sometimes obscure music introduced a host of unfamiliar sounds and inspired me to learn to play the guitar. My stepfather enthusiastically sourced a small 1960s acoustic from a cupboard. It had not been played since the 1980s and the neck had bowed severely. It was nonetheless a revelation. As per Hendrix, I initially turned the (right-handed) instrument upside down (i.e. strings inverted), then used my left “thumb” to pluck and right hand to fret notes. This was not entirely intuitive and I moved to a “more typical” left-handed orientation shortly after, modifying the bridge and nut to reverse the order of the strings (Fig. 2).

I practiced at every available opportunity: three to six hours most days and more at the weekend. I steadily improved and decided to start a band. An electric guitar seemed essential, but to find one proved more difficult than expected: the number of left-handed instruments stocked by retailers is tiny. I had also failed to anticipate that, despite considerable proficiency by this point, the ostensible incompatibility between body and instrument would tend to elicit polarized responses. If many people were either amiable or simply ambivalent, others were outwardly sceptical and a minority overtly hostile. The latter seemed to habitually reside in the kind of environment recalled by Rob Power:

There’s nothing quite as infuriating as being patronised by Guitar Shop Man. If you’re over 30 and gainfully employed, you are basically dead to these shark-eyed examples of anti-customer service. […] Throw in crippling anxiety about playing in a shop full of people very obviously judging you, and the complete lack of accurate information about gear and pricing, and you’ve got a perfectly hellish experience. (Power 2015)

It is clear that the guitar is subject to a quite unique set of expectations, slowly accumulated over the last century and now entrenched. These expectations are not only musical but social as well, and have a substantial effect on how the instrument is used and by whom it is used. If conventions can in some instances be creatively subverted, to depart from them can also be a source of considerable unease.

[P]eople seem to always be working, even when we’re playing, because the tools of work have become the tools of leisure, thereby making leisure virtually indiscernible from work. (Alexander 2008)

Nevertheless, as Amy Alexander underlines, despite far less historical prominence, digital musical instruments are no less subject to cultural norms and conventions. Their impact on intended users’ needs to be more fully and consistently considered by designers.

Adaption of the Body to the Instrument and of the Instrument to the Body

The guitar could be a messy business. An inability to hold a plectrum meant that the more I played, the more the metal strings wore away the small nail on my left thumb and cut deeply into the soft under tissue. I initially hoped that the skin would simply harden. This unfortunately did not happen and I attempted to improvise a solution. A trip to a local folk music store identified plastic thumb picks manufactured by Dunlop and intended for banjo players. These were not quite the required size and shape, but I purchased several and attempted to remould them in hot water. This created a usable solution, but lacked the physical security necessary for live performance. Adhesive tape wrapped around the joint between the thumb and the pick improved matters slightly, but contact adhesive ultimately proved to be the only dependable solution. 3[3. Extremely unpleasant!] In retrospect, to force the body to conform to the interaction demands of an instrument can only seem foolhardy: numerous studies have shown how poor posture and technique can not only impact musical performance, but also cause or contribute to long-term health problems. Musculoskeletal issues are typical, and the neck, shoulders and lower back most commonly afflicted (Leaver et al. 2011).

Digital musical instruments have considerable promise in this respect. For instance, acoustic instruments require the performance interface and sound generation mechanism to physically interact in order to produce a sound. This necessity places significant limitations on their form: they cannot solely consider the needs of the human body (Marshall 2009). Digital musical instruments are subject to far fewer restrictions in their design: wireless connectivity options mean that their elements need have no physical connection at all if desired by the designer. They can therefore take almost any conceivable form and could, for instance, explicitly and entirely reflect the needs of the body.

Traditional Musical Instruments Can Inform the Design of Digital Musical Instruments

For Mark Leman, practice enables a musical instrument to become a natural extension of the body of the performer. More specifically, he contends that extended engagement with an instrument enables it to become increasingly known and transparent to the performer to the point that it ultimately disappears (i.e. no longer feels external), and the performer is able to focus on musical aspects (Leman 2008). Numerous authors have discussed the importance of haptic sensation in this respect (Rebelo 2006; Marshall 2009), but there has been only limited discussion of tactility in relation to electric instruments. Nevertheless, once I started to play the electric guitar (a left-handed Gibson SG), I felt a palpable connection to an instrument for the first time: vibrations passed easily from the instrument’s slab body to my thumb and up into my arm and chest. This sense of a musical instrument becoming part of the body may not be unusual for players of acoustic instruments (Leman 2008). However, as Pedro Rebelo (2006) describes, digital musical instruments suffer from an innate lack or loss of intimacy. This is rooted in the emphatic separation of the performance interface from the means of sound generation and sound diffusion: without a physical coupling to pass vibrations to the performer, there is little haptic sensation (Marshall 2009).

For some researchers it is therefore desirable to reinstate haptic feedback in digital musical instruments. To this end they embed vibration motors and other electronic elements in their designs to artificially enhance the amount of haptic sensation. However, my own experiences with the trumpet and guitar have had quite the opposite effect: understanding (to some extent) the properties and possibilities of traditional instruments did not inspire a desire to mimic them, but instead a respect for their integrity as unique, indivisible artefacts. Thus, rather than imitate traditional instruments, I have instead tried to find and exploit what is unique to computer-based instruments. In particular, instead of the acoustic instrument model of direct control over individual sounds (or sound events), many of the digital musical instruments I have designed offer the ability to more loosely steer how iterative musical processes unfold. Indeed, players that expect to find fine control may find them capricious; human input may influence their precariously balanced trajectories, but there is little semblance of being “in control”. Nevertheless, they are distinct from sound toys in their ability to support extended player commitment. In particular, a patient player will discover their potential to be “teased” between states, from the placid to the furiously unruly.

This has all sorts of implications for how learning and mastery are understood. For instance, traditional musical instruments require a specific kind of mastery routed in the physical; the development of the fine motor skills needed to precisely and repeatedly produce specific sound output. By contrast, mastery in the context of my digital musical instrument designs is not only rooted in an acceptance of imprecise influence rather than precise control, but also in a shift from the haptic to the auditory: their behaviours can only be monitored and controlled by close listening.

Accessible Interfaces Can Exclude as Well as Enable

As far back as I can remember, I have played video games. I received a Nintendo Entertainment System for Christmas in 1989 and it felt natural to use my left foot to operate the controller’s D-pad, and my right hand to manipulate its two action buttons. My left thumb eventually superseded the foot and I started to play PC titles. The standard keyboard-mouse interface presented few problems beyond the need to raise the left edge of the keyboard for comfort in extended sessions.

While systems were rendered obsolete every few years, their means of interaction evolved comparatively slowly and I easily adapted to each new controller. This linear progression from one system to the next came to an abrupt end in 2006 with the release of the Nintendo Wii. Conspicuously, Nintendo did not attempt to compete with Microsoft or Sony on the basis of raw audio-visual power. They instead tried to create a more accessible platform that appealed to families and other non-traditional markets. To this end, the Wii introduced a gestural interface based around dual motion-sensitive controllers and an infrared position sensor. Ironically, rather than further enable me, the need to hold two controllers simultaneously 4[4. I.e. one controller per hand.] now excluded my participation.

This initially seemed inconsequential, but the success of the Wii soon inspired related interfaces such as the camera-based Sony Eye Toy (2007) and the infrared-based Microsoft Kinect (2010). The latter represents joint positions as coordinates in 3D space and must be calibrated by the user on startup. I was initially optimistic about the potential of this object-free interaction. However, the inflexibility of the Kinect’s skeleton model meant it could not recognize my body and therefore excluded my use. Coincidentally, this had little impact on how I played video games: I simply defaulted to a more conventional control pad. However, the hackability of the Kinect meant that it subsequently found use in a variety of different contexts, from posture analysis to digital musical instruments. It is only when my students started to take interest in and develop Kinect-based instruments, and Wii controllers were used in the Harmony Space project (Fig. 3), that I considered how other users may be affected.

My own incompatibility with the Kinect is likely an unintended consequence of the need to handle complex environmental conditions (Wei, Qiao and Lee 2014). Nevertheless, it is a reminder that there are few universal interfaces and that interfaces intended to enable some participants can sometimes inadvertently exclude others. Above all, this unexpected incompatibility emphasizes that the assumptions of designers are not to be trusted: it is vital to test on real users, ideally early and repeatedly in the development process.

Incremental Learning and Development

My route into digital musical instrument design has not only been non-linear, but also at least partially the result of circumstance. At Northumbria University I had access to a particularly well equipped metal workshop, and this enabled me to produce bulky steel sound sculptures. The need to work on a more modest scale at Coventry University led me to explore found and bent electronic circuits. Some exceptional sounds were produced, but their restricted sonic palette and limited compositional control made me crave more variety. Gehlhaar proposed that I should learn to code, and I spent the next six months immersed in Max/MSP. I quickly found Max to be a capable hub for controllers and peripherals: new instruments and interfaces have captivated me ever since.

This interest led to a PhD studentship and eventually to my current lectureship in Music Technology at the University of Wolverhampton. During my time as course and module leader, digital musical instruments have gradually infused the curriculum as part of a broader shift towards sound and music computing. The backgrounds of our undergraduate students are typically similar to my own only in that they are not trained in electronics or computer science. Instead, most are experienced in DAW use, some have recorded a band in a studio and around half play a musical instrument. There is often a perception is that code is difficult, and new instruments and interfaces can be entirely alien. Thus, rather than overwhelm new students, the complexity of a multi-faceted subject is recognized: modules are sequenced so that students progressively develop understanding and have the opportunity to apply their skills to a variety of contexts. For example, first-year students are introduced to the graphical Pure Data and the text-based SuperCollider almost simultaneously.

These platforms are then used in the second year for classes in sound synthesis and video game sound, respectively. The third year culminates in a dedicated music interaction module. This encompasses interaction design (IxD) theory, the use of commercial Human Interface Devices (HIDs) and DIY interfaces based on the Arduino microcontroller. The aim is to draw interface and synthesis together, and to consider how the two ends of an instrument can be sympathetically joined. Creative interaction possibilities are often discovered for even the most familiar synthesis algorithms.

Since 2013 the Music Department has also hosted a closely related postgraduate program. The MSc Audio Technology programme enables interested students to explore music interaction-related topics in more depth, and has a particular focus on the application of human-computer interaction (HCI) methodologies to the musical instrument context. A student-led laptop ensemble is an integral part of the course, providing a platform for testing new interfaces and compositions in a uniquely demanding performance context. The instruments developed are diverse. For instance, the 2015 iteration of the ensemble featured a tangible sequencer, a synthesizer controlled by a genetic algorithm, a synthesizer controlled by a game pad and a gesture-controlled live sampler (Fig. 6).

Digital Musical Instrument Elements Are Not Interoperable

Mapping, or how the elements of a digital musical instrument are joined, is crucial, but not always fully considered. For instance, Sergi Jordà (2005) notes that despite the diversity of the NIME community, NIME researchers have focused almost entirely on the performance interface (i.e. input). At the same time, there are Digital Audio Effects (DAFx) researchers who are equally absorbed in the development of novel sound generation and processing techniques (i.e. output). There is little cross-participation or collaboration between the two communities (Ibid.). However, the input and output sides of digital musical instruments are not inherently interchangeable; for example, it is difficult or impossible to play a fluidly expressive (emulated/virtual) saxophone part on a MIDI keyboard.

[G]enerally speaking I think we can say that an instrument, as opposed to a controller or a circuit or an interface, combines a totality of function, control and sound. (Scott 2016)

Jordà also argues that digital musical instruments are considerably more than either input or output aspects alone, and that blinkered views (i.e. fragmentation of the field) ultimately risk unbalanced and incoherent designs (Jordà 2005). That is to say, if the performance interface is developed independently from sound generation on the basis that it can simply be added after the fact, the resultant combination may be found incompatible.

A lot of the off-the-shelf applications are designed by engineers fighting with marketing departments who are, for the most part, not really of that world that they are trying to market to; they may be on the periphery but there’s always this kind of not-quite-getting-it that takes place. So these applications that are made for the public are always designed, and the features that are revealed for the public are thought up by people in a way that’s kind of like design-by-committee; again, unless you’re using Max/MSP or something and designing your own tools. (Cascone 2011)

Amidst the tensions of commercial development that Kim Cascone outlines, it is conceivable that fragmentation may be difficult to avoid in some cultures (at least in the short term). By contrast, most digital musical instruments are DIY efforts developed entirely by individuals or small teams of people, and are therefore especially suited to and ripe for more coherent design.

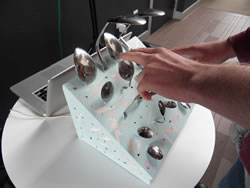

The Spoon Machine (Fig. 7) was developed by three third-year students in spring 2016. The metal spoons act as body contacts and are touched by the performer to produce sound. The instrument is a pertinent example of cohesive design in that input and output were considered throughout the design process and are mutually influential. On one side, the categorization and organization of sounds directly informs the layout of the performance interface. On the other side, the sounds of the instrument are derived from recordings of the performance interface being physically manipulated by the performer. In other words, the sound output of the instrument reflects both the life and the materiality of the instrument, while the performance interface is shaped to accommodate the chosen categorization of these sounds.

Discussion and Conclusions

In the decade since I started to create new instruments and interfaces, interest in the NIME field has increased and the number of participants has significantly expanded. These include commercial manufacturers large and small, academic researchers, and a vibrant community of amateur and informal makers. The NIME conference series alone has provided a showcase for hundreds of new instruments and interfaces, and many more appear on YouTube, Instructables and other online platforms. The intended users of these designs are varied, but some have enabled people who were previously excluded, for instance due to physical disability, to play and perform. However, the NIME community’s quest for novelty has meant that traditional instruments are often overlooked as potential solutions for disabled and other unconventional users. This not only limits options for users and provides only relatively untested designs, it also jeopardizes the potential for mutually beneficial exchange between old and new. For instance, analysis of interactions between performers and acoustic instruments can help to pre-empt problems and identify potential deficiencies in new instruments. Conversely, interaction mechanics tried and tested in the acoustic domain can be transferred to the digital domain to enable performers to leverage their existing skills. More speculatively, rather than replace traditional instruments, digital musical instruments can, by shaking out complacency, enable the unique qualities of traditional instruments to be seen anew.

It is also useful to reconsider what it means to be disabled (or differently abled) in a musical instrument context. If new instruments and interfaces have started to recognize user diversity and made steps towards inclusivity, there remains limited discussion of how interfaces can inadvertently exclude. There is also little consideration of how the capabilities of individual bodies are not only diverse but also in flux, and that they may be subject to changes that are unexpected but significant in terms of musical interaction.

Acknowledgements

Thank you to Rolf Gehlhaar, Simon Holland and the staff and students of the University of Wolverhampton Music Department. Special thanks to Nigel Dywer (FRCS), Jeff Lindsay (FRCS) and Phil Davies.

Bibliography

Alexander, Amy. “Get away from your laptop and dance! Where does work stop and leisure start?” Peacock Visual Arts. Concert information for 20 June 2008 event. http://www.peacockvisualarts.com/get-away-from-your-laptop-and-dance

Cascone, Kim. Unpublished lecture presented at Huddersfield University. May 2011.

Cook, Perry. “Principles for Designing Computer Music Controllers.” NIME 2001. Proceedings of the 1st International Conference on New Instruments for Musical Expression (Seattle WA, 1–2 April 2001). http://www.nime.org/2001 Available online at http://soundlab.cs.princeton.edu/publications/prc_chi2001.pdf

Cormier, Zoe. “And on the MiMu Gloves… The ingenious devices helping disabled musicians to play again.” The Guardian 27 May 2016. http://www.theguardian.com/music/2016/may/27/and-on-the-mimu-gloves-the-ingenious-devices-helping-disabled-musicians-to-play-again

Dalgleish, Mat. “Reconsidering Process: Bringing Thoughtfulness to the Design of Digital Musical Instruments for Disabled Users.” INTER-FACE: International Conference on Live Interfaces (Lisbon, Portugal: 20–23 November 2014). http://users.fba.up.pt/~mc/ICLI/dalgleish.pdf

_____. “The Modular Synthesizer Divided: The keyboard and its discontents.” eContact! 17.4 — Analogue and Modular Synthesis: Resurgence and evolution (February 2016). https://econtact.ca/17_4/dalgleish_keyboard.html

Dean, Roger (Ed.). The Oxford Handbook of Computer Music. New York: Oxford University Press, 2009.

Eno, Brian. A Year with Swollen Appendices. London: Faber and Faber, 1996.

Fletcher, Neville. “The Evolution of Musical Instruments.” The Journal of the Acoustical Society of America 132/3 (2012), p. 2071.

Howe, Blake, Stephanie Jensen-Moulton, Neil Lerner and Joseph Straus. The Oxford Handbook of Music and Disability Studies. New York: Oxford University Press, 2015.

Jordà, Sergi. “Digital Lutherie: Crafting musical computers for new musics’ performance and improvisation.” Unpublished doctoral dissertation, Universitat Pompeu Fabra, 2005.

Leaver, Richard, Elizabeth Clare Harris and Kenneth T. Palmer. “Musculoskeletal Pain in Elite Professional Musicians From British Symphony Orchestras.” Occupational Medicine 61/8 (December 2011), pp. 549–555.

Leman, Mark. Embodied Music Cognition and Mediation Technology. Cambridge MA: The MIT Press, 2007.

Li, Saiyi, Pubudu Pathirana and Terry Caelli. “Multi-Kinect Skeleton Fusion For Physical Rehabilitation Monitoring.” EMBS 2014. Proceedings of the 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (Chicago IL, USA: 26–30 August 2014). http://embc.embs.org/2014 Available online at http://ieeexplore.ieee.org/document/6944762/?arnumber=6944762

Marshall, Mark T. “Physical Interface Design for Digital Musical Instruments.” Unpublished doctoral dissertation, McGill University, 2009.

Mathews, Max and Richard Moore. “Groove — A Program to Compose, Store and Edit Functions of Time.” Communications of the Association for Computing Machinery 13/12 (December 1970), pp. 715–721.

Medeiros, Rodrigo Pessoa, Filipe Calegario, Giordano Cabral and Geber Ramalho. “Challenges in Designing New Interfaces for Musical Expression.” Proceedings of the 3rd International Conference on Design, User Experience and Usability (Heraklion, Crete, Greece: 22–27 June 2014). http://2014.hci.international/duxu Available online at http://www.cin.ufpe.br/~musica/aulas/HCII2014_final.pdf

Mulgan, Nick. “Inequality and Innovation: The end of another trickle down theory?” National Endowment for Science, Technology and the Arts (NESTA). 12 February 2015. http://www.nesta.org.uk/blog/inequality-and-innovation-end-another-trickle-down-theory

Nishibori, Yu and Toshio Iwai. “Tenori-on.” NIME 2006. Proceedings of the 6th International Conference on New Instruments for Musical Expression (Paris: IRCAM—Centre Pompidou, 4–8 June 2006). http://recherche.ircam.fr/equipes/temps-reel/nime06/proc/nime2006_172.pdf

OHMI. “Previous Winning Instruments.” OHMI: One Handed Musical Instrument Trust. http://www.ohmi.org.uk/previous-winning-instruments.html

Paradiso, Joseph and Sile O’Modhrain. “Current Trends in Electronic Music Interfaces.” Journal of New Music Research 32/4 (2003), pp. 345–349.

Power, Rob. “The 10 Worst Things About Being a Guitarist.” Music Radar. 3 November 2015. http://www.musicradar.com/news/guitars/the-10-worst-things-about-being-a-guitarist-630111

Rebelo, Pedro. “Haptic Sensation and Instrumental Transgression.” Contemporary Music Review 25/1–2 (2006), pp. 27–35.

Samuels, Koichi. “Enabling Creativity: Inclusive music practices and interfaces.” Proceedings of the Second International Conference on Live Interfaces (Lisbon, Portugal: 20–23 November 2014), pp. 160–172.

Scott, Richard. “Back to the Future: On misunderstanding modular synthesizers.” eContact! 17.4 — Analogue and Modular Synthesis: Resurgence and evolution (February 2016). https://econtact.ca/17_4/scott_misunderstanding.html

Strauss, Joseph. Stravinsky’s Late Music. New York: Cambridge University Press, 2004.

Stiglitz, Joseph E. “Inequality and Economic Growth.” The Political Quarterly 86 (December 2015), pp. 134–155.

Wei, Tao, Yuansong Qiao and Brian Lee. “Kinect Skeleton Coordinate Calibration for Remote Physical Training.” MMEDIA 2014. Proceedings of the 6th International Conferences on Advances in Multimedia (Nice, France: Université Haute-Alsace, 23–27 February 2014). https://www.thinkmind.org/index.php?view=article&articleid=mmedia_2014_4_20_50039

Social top