Interactive Composing with Autonomous Agents

This article describes an approach to creating interactive performance / composing systems which has been used by the author for improvisation and fixed compositions. The music produced is a result of the interactions of a human performer and an ensemble of virtual performers. The musical output typically consists of several distinct musical layers, but all the sounds heard by the audience are derived from the sound made by the human performer. The approach can be thought of as a way of creating an extended instrument, which is responsive enough that the performer can perceive how the system reacts to performance nuances and note choices, and can then effectively “play” the ensemble. The current systems are implemented in the programming environment Pure Data (Pd), using a design based on the idea of autonomous software agents. The agent design was adopted as it provides a neat way to encapsulate various compositional techniques in a unified way.

Live Performance

The agent design described here has evolved over a ten-year period, initially being used as an interactive control system for synthesizers in improvisatory performance contexts (Spicer, Tan and Tan 2003). The current agent systems rely on a live input signal, usually a flute or vocal performance that is input via a microphone, to provide control over a system and to provide the raw material for all the sounds produced. Statistical analysis of the pitch and duration information in the live signal is used to guide the agents’ behaviour. The details of the analysis vary from piece to piece (or system to system), but typically consist of determining the average pitch of a phrase, the pitch range of a phrase, the length of a phrase or the number of attacks in a phrase. Each of the agents uses the live signal as the raw material for the sound that it produces as its contribution to the resulting musical texture. This dependence on the live input creates a very tight feedback loop between the human performer and the agent ensemble. The fact that all the sonic material produced by the agents is derived from the live signal allows the player to leverage his/her instrumental technique to have significant impact on the ensemble sound. Modern extended instrument performance techniques, such as multiphonics, whistle tones, etc., can also be effectively employed.

Agents

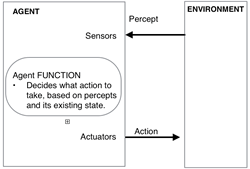

Central to the system is the concept of autonomous agents. The idea of autonomous agents has been used by the computer science community for many years (Russel and Norvig 2003). An agent can be considered to be as an entity that can perceive its operating environment in some way (in this case “listening”), and has some ability to change or interact in that environment (in this case “performing”). An agent has some internal mechanism or process (“thinking”) for deciding the exactly how it will interact with the environment. This “thinking” part of the agent, referred to as the “agent function”, can be implemented in a wide variety of ways, and it is this flexibility that makes the agent model such a useful abstraction. It allows a neat way of encapsulating many different compositional algorithms or decision-making strategies in one, unifying design.

There are two broad categories of agent in the systems that we are concerned with here: performers and controllers. Performer agents transform the input sound in various ways, while controller agents either control which agents are heard by the audience or specify a set of goal states that determine the behaviour of the performer agents.

Performer Agents

In these systems, performer agents continually listen to the live audio signal produced by the human performer. The instantaneous pitch and amplitude data, as well as cumulative statistical data, are used in the agents’ decision-making process, which determines how it alters the live input signal.

There are two categories of performer agents. The simplest type are those which apply “traditional” sound effects, such as echo, chorus, flanging, spectral delay, ring modulation, etc., to the live signal. The agent alters the controls of these DSP effects in response to the input signal. These agents are mainly used to shape the overall ambience the ensemble output.

The bulk of the agents perform more of a textural or structural role. They take the live signal and transform it into distinct musical lines, which may have distinctly different timbres, and perform distinctly different musical functions.

Performer agents produce their audio output by transforming the live input signal. The signal processing techniques employed range from granular synthesis-based pitch shifters to Karplus-Strong plucked string algorithm-based synthesizers. The result of this is that the live signal can be transformed into drones, parallel harmonies, counter melodies, ambience effects and pointillistic clouds. As the audio is a transformation of the live signal, the human performer has an element of control over the entire ensemble sound.

Performer agents all share the same basic operating principle, which is to:

- Determine the current pitch of the live signal;

- Calculate the next pitch the agent will play, using the strategy specific to that agent;

- Calculate the interval between the input pitch and the calculated pitch;

- Transform the live signal so that it has the required pitch using the signal processing technique built into the agent;

- Calculate the length of the new note;

- Play the note for the required duration.

The structure of this type of agent is shown in Figure 2.

There are a variety of algorithms employed in the various performer agents that determine exactly how the agent will create the pitch and duration of the notes it plays. So far, the algorithms implemented are those that interest the author, and include stochastic algorithms, such as Gaussian, beta and exponential distribution random number generators, simple fractal-based line generators, and tracking and evasion algorithms (Dodge and Jerse 1997). The agents use a design built around two 96-element arrays, which store the outputs of the chosen compositional algorithms. One array contains data representing the note duration, and another one contains data for the pitch. With the help of simple mapping functions, the agents use the contents of these two arrays to determine their musical output.

An important aspect of the design of the performer agents is their ability to modify their internal states, so as to meet externally specified targets. The two targets used in these systems are average pitch and average duration. In order to enable an agent to readjust its internal state so as to meet any particular supplied target, a simple form of gradient descent learning is used. Each agent periodically calculates the current average value of the data stored in the pitch and duration arrays, and compares these to a target average pitch and a target average duration. This produces two error measurements that can then be used to bias the contents of each array slightly, so as to reduce the error. Periodically repeating this process, several times a second, eventually enables the agent to converge on the target behaviour.

Controller Agents

In addition to the performer agents, there are two higher-level agents that affect the overall musical output. One agent supplies the target parameters that are used by the agent performers to individually adjust their internal state (the pitch and duration targets). The other agent acts as a “mixing engineer” and determines which performer agents are heard by the audience. Both of these agents are implemented as finite-state machines that make use of an analysis of the live signal to shape the musical output. These systems make use of a simple design, in which each agent can be in one of eight different states. When creating a new composition with this type of system, for each composition, the composer needs to:

- Determine the eight different target states for the system. These will be vectors consisting of either the target volume levels for each agent, or target average pitches and average durations for a performer agent. Setting these states is a very important compositional decision, as it determines the behavioural extremes of the piece.

- Assign each state to the 3D coordinates of the different corners of a cube, centred at the origin, with a side length of 2. E.g., (1,1,1),(1,1,-1),(1,-1,1),(1,-1,-1),(-1,1,1),(-1,1,-1),(-1,-1,1),(-1,-1,-1).

In operation, these agents:

- Periodically derive three values between -1 and 1 from the recently heard live signal. A mapping function, fine-tuned for each specific piece, is applied to the pitch and duration data to produce these values. The three values form a 3D coordinate that is determined by what the human performer has played. The exact mapping is a major compositional decision that is made for each piece, and usually the result of a trial and error process. The basic analysis of the input signal is done using a fiddle~ (or sigmund~) object; this data is accumulated for a particular time interval and then some sort of statistical analysis is performed. For example, in one piece, the mean input pitch, the duration of the last phrase, and its melodic range are each mapped onto a value in the range -1 to 1, to produce the 3D coordinate.

- Calculate the Euclidian distance of this 3D coordinate from each of the vertices of the cube.

- Set the system to the state that has the smallest Euclidian distance from the 3D coordinate.

The fact that these are deterministic processes means that there is an element of predictability in the behaviour of the system. This means that similar musical input gestures will tend to produce similar musical ensemble output, which enables the human performer to learn how to shape the response of the agent ensemble.

Applications

This system was developed specifically to realize a collection of notated compositions for flute and computer, called Pandan Musings (Audio 1). All the flute parts are composed and fully notated in a style that is suggestive of early 20th-century composers such as Poulenc and Debussy, and each piece uses its own optimized agent system. Because each piece is played with a fixed score, the deterministic high-level agents enable the fine-tuning of the various targets so as to achieve specific musical results that are approximately repeatable, or at least recognizable. This creates a consistency in the ensemble part, whilst still leaving a lot of room for spontaneous expression and for serendipitous moments to occur.

Another application of this system is an installation called Free Voice that was created for VOICES — A Festival of Song (Singapore, 13–15 December 2013). It was located at the side of a large atrium, where members of the public congregate. The installation consists of a two different examples of the system described here running in parallel, each with its own microphones. One system consists entirely of “sound FX” performer agents, whilst the other is entirely creating musical lines, using Karplus-Strong synthesizers. These use short bursts of the live signal for excitation, and traces of the input can be discerned in the resulting musical output.

While various versions of this system have been developed for specific compositions, the system is often used in improvisation contexts. The author usually performs with it using live input from instruments such as the flute, saxophone, Rhodes electric piano or analogue synthesizer. Minor adjustments to the analysis parameters are made to optimize the systems performance with each particular instrument.

Conclusion

The autonomous agent-based system design has been a useful tool for the author to create effective sound transformation systems that have been used in real-world performance situations. The fact that this approach produces something that is a blend of an extended instrument and an interactive composing system is a very attractive aspect of these systems, as it means that the human performer can leverage his/her standard technique to react to and control the musical result, enabling each performance to be a unique experience.

Bibliography

Dodge, Charles and, Thomas A. Jerse. Computer Music Synthesis, Composition and Performance. New York: Schirmer Books, 1997.

Russel, Stuart and Peter Norvig. Artificial Intelligence: A Modern Approach. Second edition. New Jersey: Prentice Hall, 2003.

Rowe, Robert. Interactive Music Systems: Machine listening and composing. Cambridge MA: MIT Press, 1993.

Spicer, Michael J., B.T.G. Tan and Chew Lin Tan. “A Learning Agent Based Interactive Performance System.” ICMC 2003. Proceedings of the International Computer Music Conference (Singapore: National University of Singapore, 29 September – 4 October 2003).

Social top