Considering Interaction in Live Coding through a Pragmatic Aesthetic Theory

Live coders can use æsthetic evaluation to improve their work. A pragmatic æsthetic theory based on the writings of John Dewey, one of the central philosophers of pragmatism, can be employed for such evaluations. Before presenting that theory, this paper gives a working definition of live coding, followed by a brief history of live coding. The next section presents Dewey’s theories of art and valuation and then revises them. Interaction in live coding is then examined from the perspective of this revised theory. The final section discusses through a concrete example how such interaction might be evaluated in order to improve live coding performances.

Defining Live Coding

Live coding is the interactive control of algorithmic processes through programming activity (Collins 2011; Ward et al. 2004; Brown 2007). This paper focuses on interacting with code as a performance and does not deal with public programming as a tutorial. Live coding is by custom projected for an audience to see and also typically includes improvisation (Collins et al. 2003). The style of music is not fixed, meaning live coding is a performance method rather than a genre (Brown and Sorensen 2009).

Live Coding History

The first documented live coding performance was carried out by Ron Kuivila at STEIM in 1985 (Sorensen 2005). The piece, Watersurface, was done in a “precursor of Formula,” a programming language called the FOrth MUsic LAnguage developed by David Anderson and Kuivila (Anderson and Kuivila 1991), and involved Kuivila “writing Forth code during the performance to start and stop processes triggering sounds through [a Mountain Hardware 16-channel oscillator board]” (Kuivila 2013). “[The] original performance apparently closed with a system crash” (Kuivila et al. 2007).

From 1985 to the early 90s, The Hub used a standardized data structure that was shared via network and interpreted by the programmes of each performer to carry out performances (Gresham-Lancaster 1998). Earlier (1980–82), the League of Automatic Composers did extended performances with programmes that were tuned as they ran (Brown and Bischoff 2002). The degree to which such tuning required coding is unspecified in existing literature.

Scot Gresham-Lancaster cites John Cage and David Tudor as influences (Gresham-Lancaster 1998). This impact is supported by Michael Nyman, who contrasts experimental music like that of Cage, Steve Reich and various performers belonging to the Fluxus group with what he calls the avant-garde (Nyman 1999). Nyman writes that experimental music focuses on situations in which processes work across a field of possibilities to bring about unknown outcomes (Nyman 1999, 3) and show the uniqueness of particular moments (Ibid., 8). Such music may have an identity located outside of the final audience-perceived auditory material, have non-traditional methods for dealing with time and force performers to use skills not typically associated with musicianship (Ibid., 9–13). Experimental music frequently presents performers with surprise difficulties in performance and may resemble games and give performers rules to interpret (Ibid., 14–16). Who the performers are may be ambiguous, or it may require new ways of listening (Ibid., 19–20). These issues appear in live coding frequently, particularly the need for new performance skills (Brown 2007, 1) and the similarity to games (Magnusson 2011). One thing that appears to separate live coding from this experimental music tradition is that performers before live coding seem not to have changed the rules during performances, while this is a central concern for live coding. Further examination of the 20th-century experimental music tradition may reveal additional interesting connections with live coding.

After an apparent gap in the 90s, live coding activity increased in the first decade of the 21st century 1[1. The number of articles on the subject as well as presentations at various conferences festivals etc. has greatly increased in the past 10–15 years.]. Alex McLean’s article on “Hacking Perl in Nightclubs” appeared on Slashdot in 2004; in it he describes interacting with running Perl scripts to produce music, including a script that can edit itself (McLean 2004). Other early examples include Julian Rohrhuber’s experiments in live coding with SuperCollider and the duo of McLean and Adrian Ward (later joined by David Griffiths) called Slub (Fig. 2). Systems used in the previous decade for live coding include McLean’s feedback.pl, Griffith’s Fluxus and computer music languages SuperCollider and Chuck. It became common practice to project live coding activity for the audience to see during the performance (McLean et al. 2010). While at least some of those systems continue to be used, additional systems like Extempore, Tidal, Overtone and this author’s Conductive have appeared, and a growing culture and body of work has developed around live coding.

A Pragmatic Aesthetic Theory

Art as Experience

We will consider live coding æsthetically with a pragmatic æsthetic framework proposed by this author based on Dewey’s 1934 essay, Art as Experience. John Dewey (1859–1952) was an American philosopher and educator and, along with William James and Charles Sanders Peirce, was one of the central figures of the philosophical movement called “pragmatism” (Hookway 2013).

Dewey explains pragmatism as a philosophical approach that clearly defines an idea and sees how it works “within the stream of experience” to show how “existing realities may be changed” and to produce plans for effecting such change. In pragmatism, “theories… become instruments,” allowing ideas to be judged valuable or not from their consequences (Dewey 1908). In other words, it is an approach that seeks to develop practical ideas that can be put to use in the course of life and through that use be evaluated and revised. Pragmatism can be contrasted with other philosophical approaches. For example, utilitarianism is a theory of ethics (Driver 2009), while pragmatism is a general philosophical approach. As another example, rationalism holds reason as the source of knowledge, while pragmatism favours an empirical base to knowledge (Markie 2013).

Before summarizing this author’s recent revision, it is useful to consider the main points of Art as Experience, an account of æsthetic experience as “essentially evaluative, phenomenological and transformational” (Shusterman 2000, 21). Dewey begins by saying “the actual work of art is what the product does with and in experience” (Dewey 1934, 1). He calls art “a process of doing or making” (Ibid., 48) and an engagement with intention. “[Art] does not denote objects” in Dewey’s theory (Leddy 2012). An external product is a potential art experience depending on its audience. For Dewey it is preferable to think of art as an experience and a painting or performance as a tool through which that experience can be realized. This leads to a “triadic relation” in which the creator produces something for an audience which perceives it (Dewey 1934, 110). What the creator has produced creates a link between the creator and the audience, though sometimes the creator and the audience are the same (creator as first audience member). For Dewey, experience also means an interaction with an environment that is unavoidably human in every case and creates a feedback loop in which actions and reactions affect one another. These experiences are always composed of both physical and mental aspects. Experiencing the world means transforming it “through the human context” (Ibid., 257) and equally being transformed. According to Leddy, a potentially infinite number of experiences can be derived from a single artifact or situation. Because art is experience, it is always temporal in nature. The æsthetic quality of the experience is emotional and involves both perception and appreciation (Leddy 2012). “[Emotions] are qualities… of a complex experience that moves and changes,” writes Dewey (Dewey 1934, 43). Dewey discards the distinction between what he calls “fine” and “useful” aspects of art, terms he saw applied to things like painting and industrial design respectively.

Dewey’s Theory of Valuation

Dewey wrote a considerable amount of material on valuation. His theory can be summarized as follows. The value of something derives from how well it suits the achievement of an individual’s intentions and the consequences of achieving those ends through those means. The object of an appraisal is also evaluated while considering its consequences with respect to other intentions held by the individual (Dewey 1939). Everything of value is instrumental in nature. Every end is in turn a means for another intention in a continuous stream of experience. Value cannot be assigned in a disinterested manner, rather it is assigned to an experience according to the context of the experience, including, but not limited to, the culture it takes place in (Dewey 1939). Such judgements are always in flux and susceptible to revision based on newly obtained experience. Valuations are instrumental for future valuations and action (Dewey 1922) and are used to control the stream of an individual’s experience (Dewey 1958).

A Revised Pragmatic Aesthetic Theory

There are some problematic points to Dewey’s theory and as a result it has been criticized or revised by various people, including Richard Shusterman (2000) and John McCarthy and Peter Wright (2004), the latter pair using it to explain interaction with technology. In 2013, this author presented a revision of Dewey’s theory in “Towards Useful Aesthetic Evaluations of Live Coding,” as well as a summary of it in “Pragmatic Aesthetic Evaluation of Abstractions for Live Coding.” That summary is presented here with some modifications.

An “affect” is an emotional state. An “affectee” is a person experiencing affects in an interaction with affectors. An “affector” is an object of perception that stimulates affects in an affectee; this can be a physical object or something abstract. A work of art is an affector which in some way was created, organized or manipulated with the intention of it being an affector. A person involved with the creation or arrangement of an affector is an artist.

An art experience is the experience of affects in an affectee as the result of the affectee’s interaction with a set of affectors, with at least one of those affectors being a work of art. The art experience is the experience of those affectors either simultaneously or in sequence. Experience involves a possibly infinite number of affectors arrayed in a network structure in which they influence each other and the affectee either directly or indirectly. Changing the perceived network of affectors changes the nature of the experience.

The value of an affector is connected to the value of the art experience in which it is involved. The value of an art experience is determined by the affects experienced (Bell 2013a) and how well those affects and the other consequences of the experience and its affectors suit the intentions of the affectee.

Applying this æsthetic theory to an art experience means:

- Analysing the intentions held by an affectee;

- Determining the network of affectors that are present in the experience;

- Examining the consequences of the interaction with those affectors, including resulting affects;

- Determining the relationship between those consequences, affects and the original intentions;

- Either (a) assigning value to the experience and its affectors according to those intentions or (b) changing intentions and then repeating this process with the new intentions.

This process bears some similarity to the technique for analysis of the experience of technology described by McCarthy and Wright.

Considering Interaction in Live Coding with the Revised Aesthetic Theory

Intentions

Live coders have expressed a broad and diverse set of intentions (Magnusson 2011), though the central and common intention is the real-time creation and presentation of digital content (Brown 2007), often audio content, and doing so particularly through the use of algorithms. Some seek the challenge and the chance to improvise, while others desire flexibility of expression. There are live coders who want to code collaboratively. It is the aim of some to communicate algorithmic content to an audience, thereby making the coder’s deliberations clear, as well as showing how that activity is guided by the human operator. Some aim to demonstrate virtuosity, interact more deeply with a computer or discover new musical structures. This can mean trying to describe generative processes efficiently, either in terms of computational power necessary or code necessary to express an idea. The intention can even be ironic and in opposition to the goal of clear communication of algorithmic processes for the audience (Zmölnig 2012) [Video 1]. 2[2. These intentions and others can be found in representative papers such as those by Brown and Bischoff (2002), Collins et al. (2003), Blackwell and Collins (2005), Sorensen (2005), Brown (2007), Brown and Sorensen (2009), Mclean et al. (2010), Magnusson (2011) and Thielemann (2013).]

Interaction in Live Coding

An interaction method is a compound affector, consisting of various aspects like usability, appearance and historical position. This comes into relation with what is being interacted with: a programming language and its notation, algorithmic processes, a synthesizer and so on. The interaction method is one of the affectors in the network of affectors that make up the experience, including the resulting sound and projected visuals.

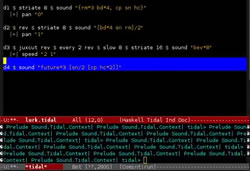

The custom of projecting the coding activity for the audience makes the projected interaction one of the primary affectors in the experience of live coding. Though live coding systems are quite personal (Magnusson 2011), interaction in live coding can be classified into two superficial categories based on this visual display: an orthodox style and idiosyncratic styles. Though not perfectly uniform, the orthodox style involves a text editor and an interpreter, and it can be observed in some live coding performances by Alex McLean and Andrew Sorensen, among others. Within this style, some audience members may pay attention to the performer’s choice of editor, syntax highlighting, presence or absence of visual cues upon code execution and so on. McLean uses his McLean’s Haskell library Tidal (Video 2) in performance, which, in addition to its pattern representation and manipulation features, allows the use of the interpreter for the Glasgow Haskell compiler (GHCi) and the Emacs text editor to live code patterns and trigger a synth using the Open Sound Control (OSC) protocol (McLean and Wiggins 2010).

Idiosyncratic styles may or may not involve such visual cues, but they can include graphics, animation or other interactive elements. Examples of idiosyncratic live coding interaction styles include some performances and systems by Griffiths (McLean et al. 2010), Thor Magnusson (Magnusson 2013) and IOhannes Zmölnig (Zmölnig 2012). Griffiths’ Scheme Bricks is a graphical environment for programming in the Scheme language that trades the editing of the signature parentheses of Scheme for manipulating coloured blocks (Video 3). It not only allows the user to graphically manipulate fragments of code in a way Griffiths feels differs from text editing, but also helps prevent such coding mistakes as incomplete pairs of parentheses (McLean et al. 2010).

These software tools and their various features function as affectors, along with the resulting audio and other affectors, to form the experience. The performer experiences them through use and the audience experiences them through projection.

The nature of live coding when presented with a projection emphasizes an interaction with the audience in a way that other approaches to live electronic music usually does not. McLean and Wiggins question the affects of the audience as a result of experiencing the projection, suggesting that some affectees may experience alienation even while others appreciate the opportunity to see the coder’s interaction with the system (McLean and Wiggins 2011). While their anecdotal evidence says both are possible, Andrew Brown suggests that a positive reaction is in fact more common. However, he also notes that it can be perceived as showing off or a distraction (Brown 2007). The reason for this may be that some see the projection of coding as a flaunting of technical skill or a detraction from the performance due to the difficulty of understanding the meaning of the code. This partly depends on the affectee’s background (Bell 2013a). Considering their intentions is also important: audience purposes can range from dancing to deep consideration. For example, overemphasis of projected code might be a mismatch for an affectee wanting to dance, while it could be essential for those interested in learning how music can be represented in code.

Regardless of the superficial appearance of the live coding, many fundamental aspects are shared which have their own range of variation. For example, some performances may use previously written code, while others may be coded from scratch. Another example is the feedback from the interpreter, with some being sparse and others verbose.

Interaction with abstractions is one of those fundamental aspects of live coding. One of the challenges of interaction in live coding is the higher level of abstraction for making sounds in live coding compared to manipulation of a traditional physical instrument (McLean and Wiggins 2010). The coder creates those many sounds by means of algorithmic processes that Andrew Brown characterizes as “typically limited to probabilistic choices, structural processes and use of pre-established sound generators” (Brown 2007, 1). Those algorithmic processes are mapped to synthesizers. This creates some tension for the performer, who must juggle two somewhat dissimilar types of interaction: one with the algorithmic processes and another with the synthesizer. The interaction with these algorithmic processes functions as an affector for the performer and, to the extent that they are aware of it, for other affectees (such as the audience) as well. 3[3. More on the æsthetics of these algorithmic processes in live coding can be found in the author’s 2013 article “Pragmatically Judging Generators.”] In order to control these algorithmic processes, a user employees abstractions and the notation defined to express them in a given language. 4[4. A more complete discussion of these abstractions and how they function as affectors can be found in the author’s 2013 article “Pragmatic Aesthetic Evaluation of Abstractions for Live Coding.”] The level of abstraction among live coders can vary and there is significant diversity in the notations used to express them, such as the parentheses-filled expressions employed by Sorensen and Griffiths (Video 3), SuperCollider code used by Rohrhuber and others, and even differences in the Haskell used by McLean (Video 2) and this author (Video 4). Naturally the idiosyncratic methods mentioned previously provide somewhat different means for interacting with the abstractions in their respective systems.

Evaluating Interaction in Live Coding

With such a diverse set of intentions and affectees, clearly evaluating the interaction might seem to be an impossible task. The æsthetic theory presented says a potentially infinite number of evaluations could be obtained. However, remembering that the theory is intended as a useful tool for suggesting a plan for change, it seems desirable to apply the system even partially. One strategy may be to focus on one intention of one affectee, while keeping in mind that it is only an aspect of the total experience for that affectee. Given a particular performance, the affectors involved can be listed out and their consequences examined.

One of the author’s recent performances took place on 11 May 2013 at the Linux Audio Conference in Graz, Austria in the basement of the Forum Stadtpark (Video 4). One of the more important intentions of the performance was maximizing opportunities to improvise. This and other intentions had been greatly considered in advance of the performance and played a significant role in the planning and execution of the performance.

At the performance, an audience of up to 60 people stood in front of a low stage. The lights were turned off when the audio began. This performance of bass music emphasized generative rhythms. A custom live coding system, a programming library called Conductive, was used to trigger a simple sampler built with the SuperCollider synthesizer and loaded with thousands of audio samples. An orthodox style was used in which prepared code was loaded into the Vim editor, edited and sent to the Haskell interpreter, where it was executed. Doing so, multiple concurrent processes spawned events and other parameters. Abstractions were used to generate sets of rhythmic figures, which were paired with patterns of audio samples and other synthesis parameters. The concurrent processes read the generated data and used it to synthesize sound events. Such data was generated and stored. During the performance, pieces of data were chosen and supplied to various functions to allow improvised music-making. By watching the projected interaction, the audience could to some extent observe the generative processes, which was another of the intentions of the performance. The interaction was projected on a large screen behind the performer.

In this case, the dark performance environment made it somewhat difficult to see the audience. As a result, some trepidation about audience reaction (wanting to encourage the audience to dance being another intention) slightly limited perceived freedom. It occupied some attention that might have been spent otherwise had a dancing audience been observable from the beginning. That nervousness also impaired the ability to modify the code in the editor. The software library made switching between patterns and designing time-varying parameter changes simple, but insufficient familiarity with the library functions and the design of the library itself meant there were not opportunities for generating new rhythm patterns during the performance. The lack of familiarity also meant that the performance relied heavily on modifying and executing code that had been written previously in practice sessions. A sense of restriction resulted to some extent. The resulting changes of patterns felt somewhat mechanical and not as free as had been intended. However, it was possible to shape the music in real time and develop the music by reacting to the generative output, so some satisfaction could be had from improvisation. In addition, no sense of restriction came from the use of the text editor and interpreter. The projection failed to perform as an affector for the performer because it was not visible. For affectees in the audience, however, it functioned, though its effectiveness was limited due to the small font size that was used.

Putting the experience to use, it seems that productive changes might include simplifying the generation of new rhythm patterns by changes or additions to the library, somehow obtaining a better view of the audience and practising more. The original intention to maximize opportunities for improvisation remains an important one.

Conclusion

While obtaining a complete analysis for every intention for even one affectee is a very large task and beyond the scope of this paper, it is hoped that this example analysis shows how this theory can be applied. Given enough planning and resources, it is thought that it could be applied more comprehensively to a larger group of affectees with the hopes of obtaining useful evaluations that can then be employed to improve future performances.

Acknowledgements

Much gratitude goes to PerMagnus Lindborg for many helpful comments leading to this revised version. I would also like to thank Akihiro Kubota and Yoshiharu Hamada for research support.

Bibliography

Anderson, David P. and Ron Kiuvila. “Formula: A Programming language for expressive computer music.” Computer 24/7 (July 1991), pp. 12–21.

Bell, Renick. “Towards Useful Aesthetic Evaluations of Live Coding.” ICMC 2013: “Idea”. Proceedings of the 2013 International Computer Music Conference (Perth, Australia: Western Australian Academy of Performing Arts at Edith Cowan University, 11–17 August 2013). http://icmc2013.com.au

_____. “Pragmatically Judging Generators.” GA2013. Proceedings of the 16th Generative Art Conference (Milan, Italy: Politecnico di Milano University, 10–12 December 2013). Available online at http://www.generativeart.com/ga2013xWEB/proceedings1/17.pdf [Last accessed 2 February 2014]

_____. “Pragmatic Aesthetic Evaluation of Abstractions for Live Coding.” Meeting No. 17 of the Japanese Society for Sonic Arts (Nagoya, Japan: Nagoya City University School of Design and Architecture, 5 October 2013). Available online at http://www.jssa.info/doku.php?id=journal017 [Last accessed 2 February 2014]

Blackwell, Alan and Nick Collins. “The Programming Language as a Musical Instrument.” PPIG05. Proceedings of the 17th Annual Workshop on Psychology of Programming Interest Group (Brighton, UK: University of Sussex, 28 June – 1 July 2005). http://www.ppig.org/workshops/17th-programme.html

Brown, Andrew R. “Code Jamming.” M/C Journal of Media and Culture 9/6 (December 2006).

Brown, Andrew R. and Andrew Sorensen. “Interacting with Generative Music Through Live Coding.” Contemporary Music Review 28/1 (February 2009) “Generative Music,” pp. 17–29.

Brown, Chris and John Bischoff. “Indigenous to the Net: Early network music bands in the San Francisco Bay area.” 2002. Available online at http://crossfade.walkerart.org/brownbischoff/IndigenoustotheNetPrint.html [Last accessed 2 February 2014]

Collins, Nick. “Live Coding of Consequence.” Leonardo 44/3 (June 2011), pp. 207–211.

Collins, Nick, Alex McLean, Julian Rohrhuber and Adrian Ward. “Live Coding in Laptop Performance.” Organised Sound 8/3 (December 2003), pp. 321–330.

Dewey, John. “What Does Pragmatism Mean by Practical?” The Journal of Philosophy, Psychology and Scientific Methods 5/4 (1908), pp. 85–99.

_____. “Valuation and Experimental Knowledge.” The Philosophical Review 31/4 (1922), pp. 325–351.

_____. Experience and Nature. London: George Allen and Unwin, Ltd., 1929. Available online at https://archive.org/details/experienceandnat029343mbp [Last accessed 2 February 2014]

_____. Art as Experience. New York: The Penguin Group, 1934.

_____. “Theory of Valuation.” In International Encyclopedia of Unified Science, Vol. II (1939).

Driver, Julia. “The History of Utilitarianism.” In Stanford Encyclopedia of Philosophy. Edited by Edward N. Zalta. 27 March 2009. http://plato.stanford.edu/entries/utilitarianism-history [Last accessed 2 February 2014]

Gresham-Lancaster, Scot. “The Æsthetics and History of the Hub: The Effects of changing technology on network computer music.” Leonardo Music Journal 8 (December 1998) “Ghosts and Monsters: Technology and Personality in Contemporary Music,” pp. 39–44.

Hookway, Christopher. “Pragmatism.” In Stanford Encyclopedia of Philosophy. Edited by Edward N. Zalta. 16 August 2008, rev. 7 October 2013. http://plato.stanford.edu/entries/pragmatism [Last accessed 2 February 2014]

Kuivila, Ron. Private email exchange. “a Performance at STEIM in 1985 in Which You Used the Formula Programming Language.” 6 November 2013.

Kuivila, Ron, The Hub, Julian Rohrhuber, Fabrice Mogini and Nick Collins, Volko Kamensky, Dave Griffiths et al. A Prehistory of Live Coding. Toplap CD 1, 2007. http://www.sussex.ac.uk/Users/nc81/toplap1.html [Last accessed 2 February 2014]

Leddy, Tom. “Dewey’s Aesthetics.” In Stanford Encyclopedia of Philosophy. Edited by Edward N. Zalta. 29 September 2006, rev. 4 March2013. http://plato.stanford.edu/entries/dewey-aesthetics [Last accessed 2 February 2014]

Magnusson, Thor. “Confessions of a Live Coder.” ICMC 2011: “innovation : interaction : imagination”. Proceedings of the 2011 International Computer Music Conference (Huddersfield, UK: Centre for Research in New Music (CeReNeM) at the University of Huddersfield, 31 July – 5 August 2011).

_____. “The Threnoscope: A Musical Work for Live Coding Performance.” ICSE 2013. Proceedings of the 35th ACM/IEEE International Conference on Software Engineering (San Francisco, USA, 18–26 May 2013).

Markie, Peter. “Rationalism vs. Empiricism.” In Stanford Encyclopedia of Philosophy. Edited by Edward N. Zalta. 19 August 2004, rev. 21 March 2013. http://plato.stanford.edu/archives/sum2013/entries/rationalism-empiricism [Last accessed 2 February 2014]

McCarthy, John and Peter Wright. Technology as Experience. Cambridge MA: MIT Press, 2004.

McLean, Alex. “Hacking Perl in Nightclubs.” Perl.com. 31 August 2004. Available online at http://www.perl.com/pub/2004/08/31/livecode.html [Last accessed 2 February 2014]

_____. “Texture: Visual Notation for Live Coding of Pattern.” ICMC 2011: “innovation : interaction : imagination”. Proceedings of the 2011 International Computer Music Conference (Huddersfield, UK: Centre for Research in New Music (CeReNeM) at the University of Huddersfield, 31 July – 5 August 2011).

McLean, Alex, Dave Griffiths, Nick Collins and Geraint Wiggins. “Visualisation of Live Code.” EVA London 2010. Proceedings of the 2010 Electronic Visualisation and the Arts Conference (London, UK: BCS, 5–7 July 2010).

McLean, Alex and Geraint Wiggins. “Tidal — Pattern Language for the Live Coding of Music.” SMC 2010. Proceedings of the 7th Sound and Music Computing Conference (Barcelona: Universitat Pompeu Fabra, 21–24 July 2010). http://smc2010.smcnetwork.org

Nyman, Michael. Experimental Music: Cage and Beyond. Music in the Twentieth Century. 2nd ed. Cambridge MA: Cambridge University Press, 1999.

Shusterman, Richard. Performing Live: Aesthetic Alternatives for the Ends of Art. Ithaca: Cornell University Press, 2000.

Sorensen, Andrew. “Impromptu: An Interactive programming environment for composition and performance.” ACMA 2005: 12–14 July 2005. Proceedings of the 2005 Australasian Computer Music Conference (Brisbane, Australia: Queensland University of Technology, 12–14 July 2005). http://acma.asn.au/conferences/acmc-2005

Thielemann, Henning. “Live Music Programming In Haskell.” LAC 2013. Proceeding of the 11th Linux Audio Conference (Graz, Austria: IEM, 9–11 May 2013). http://lac.linuxaudio.org/2013

Various authors. “TOPLAP Website.” http://toplap.org [Last accessed 2 February 2014]

Ward, Adrian, Julian Rohrhuber, Fredrik Olofsson, Alex McLean, Dave Griffiths, Nick Collins and Amy Alexander. “Live Algorithm Programming and a Temporary Organisation for its Promotion.” README 2004. Proceedings of the README Software Art Conference (Aarhus, Denmark: Aarhus University, 23–24 August 2004), pp. 243–261.

Zmölnig, Iohannes M. “Pointillism.” 2012. http://umlaeute.mur.at/Members/zmoelnig/projects/pointillism [Last accessed 2 February 2014]

Social top