Interview with R. Benjamin Knapp and Eric Lyon

The Biomuse Trio in conversation

The Biomuse Trio (R. Benjamin Knapp, Eric Lyon, Gascia Ouzounian) was formed in 2008 to perform computer chamber music integrating performance, laptop processing of sound and the transduction of bio-signals for the control of musical gesture. The work of the ensemble encompasses hardware design, audio signal processing, bio-signal processing, composition, improvisation and gesture choreography. The Biomuse Trio has performed and lectured across North America and Europe, including at BEAM Festival, CHI, Diapason Gallery, Green Man Festival, Issue Project Room, NIME, Science Gallery Dublin, STEIM and TheatreLab NYC. Lyon and Ouzounian are Lecturers at the Sonic Arts Research Centre (SARC) at Queen’s University Belfast. Knapp is founding director of the Institute for Creativity, Arts and Technology (ICAT) and Professor of Computer Science at Virginia Polytechnic Institute and State University. This interview took place in Belfast on 18 and 20 January 2012.

Forming a Biomusic Ensemble

[Gascia Ouzounian] Although I’m also a member of the Biomuse Trio, for this interview I’d like to find out more about your research in biomusic in general, and about your work with the Biomuse Trio in particular. How did the idea to form an ensemble that would perform using bio-signals first come about?

[Eric Lyon] It came about as a result of different conversations we started having in 2008. I knew that Ben was very involved in interface design and had built the BioMuse, which I had come across in my travels, particularly in Japan. 1[1. The BioMuse is a bio-signal interface and performance device that currently comprises a set of modular sensors that enable real-time processing of EEG, EMG, EOG and ECG signals. It has undergone several iterations since 1992, when it was introduced by BioControl Systems (co-founded by R. Benjamin Knapp and Hugh S. Lusted). For more on the BioMuse, visit the BioControl Systems website. See also Knapp and Lusted 1990.] I shared my trepidation with him about writing for special-purpose hardware: I had seen several of my colleagues write for special-purpose instruments, often of their own design, and then the instrument would be neglected, would break, would be stored in a closet — and that would be the end of that piece. So writing for custom hardware instruments seemed less appealing than writing for consumer grade computers.

For myself, I wrote instruments in software that were also custom designed, but software has proven surprisingly durable. However, things are changing in the world of hardware and the same things that happened with software in 1990s happened a decade later with hardware: the process became de-institutionalized. Knowledge and resources that had previously existed primarily within universities and corporations made its way to the street. The Arduino board, for example, made it much easier to experiment with hardware.

What I understood from talking with Ben was that, at this point, you weren’t really writing for a single instrument anymore. Rather, you were writing for a model of an instrument. The current hardware would be one implementation of that model and five years later you could build a better implementation of the same model. I realised that the issue of obsolescence wasn’t as scary as it once seemed, because now you were writing for an idea that was more or less permanent. That’s how we got started.

[GO] How did those conversations lead to forming an ensemble?

[EL] We thought it would be interesting to start a group that would perform pieces composed for biosensors. From the start, a primary focus for the group was to aim for as much precision as possible in the use of these instruments: to notate very precisely what the biosensor performer was doing, just as with the other instruments of the ensemble.

[Benjamin Knapp] One of the first things we did was to write a set of gestures — all the gestures we could imagine coding at the time — on a board in my office, which reinforced the idea of being agnostic towards the hardware. We were talking about gestures like arm rotation, flection of a muscle and even whether those gestures would be continuous gestures or discrete actions. That was from the beginning.

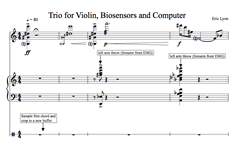

Composing Gesture: Trio for Violin, Biosensors and Computer

[GO] The first piece you developed for the ensemble was Trio for Violin, Biosensors and Computer. You composed and coded the first two movements in 2009 and you’re currently working on adding a third movement. Can you describe what happens in that piece?

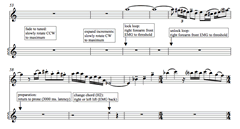

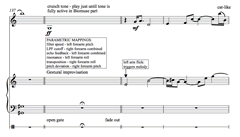

[EL] The piece is built entirely on the sound of the violin, using live sampling. The electronic part is built exclusively from violin sounds that are captured during the performance. The first movement starts with a somewhat aggressive chord played on the violin. After I capture it, the violin starts playing a melody and Ben triggers stacks of the sampled chord on top of the violin part. There are a few other notes that also get captured. The sampled sounds get used almost immediately and the gestures are notated precisely in the score. For example, there might be a “left forearm clench” on the second beat of a bar.

Those were the key elements of that piece: that it was created from sounds sampled live from the violin and that the gestures of the biosensor performer are precisely notated along with the rest of the piece.

[GO] How did Trio for Violin, Biosensors and Computer develop in terms of the collaboration between composer and biosensor performer?

[EL] An interesting aspect of the collaboration process between Ben and myself was that there were different sensors on different parts of Ben’s body. There were two EMG sensors on both arms and an accelerometer on each forearm, so there were many different kinds of sensor input just based on motion and muscle tension. However, I could only compose to the individual sensor. For example, we might need to have a trigger from the right forearm, but there were many different ways that that trigger could be done physically, so Ben became a collaborator in terms of creating the choreography of the gestures. I composed the music, the rhythmic structure and the DSP and then the actual way that it was performed was up to Ben.

[BK] The process was symbiotic. It was a kind of choreography, because a choreographer would have an idea of how the body can be articulated and what can or can’t be done. On top of that, it’s not just which gestures are possible, but what we can derive from the gestures. I sometimes have preconceived ideas of what I want to have happen with the gestures and Eric sometimes has a preconception about what he wants to do with the piece.

Exploring particular gestures (for example, discrete versus continuous gestures) has its own depth to it. One might want a very discrete gesture to trigger something and then for a continuous motion to begin from there. So the discrete gesture has to essentially calibrate the continuous gesture at that point. That’s the level of detail we had to explore with each gesture: every aspect of how the gesture is going to be made and how the data is going to be used.

There are no directives in the composition that are instrument specific. It is all about annotating gesture, which reinforces what Eric said right at the beginning, that everything we do is hardware agnostic. You could see the piece being performed twenty years from now with a whole other piece of software but the same gestures.

[GO] Can you describe some of the gestures you perform as the biosensors performer in Trio for Violin, Biosensors and Computer?

[BK] Some of the discrete gestures are things like the “right forearm throw”: it’s a very discrete gesture [motions throwing right arm from chest to floor in a diagonal motion]. That’s the gesture we wanted, but then there is the question: how do you make sure you grab only that gesture? That involves a little bit of choice of the gesture and also the choice of the sensors themselves. We started out using muscle tension on that gesture. However, it would have probably been better to use accelerometry.

One of the continuous gestures we use involves two parallel hands [motions holding an imaginary ball]. Each front arm tension separately controls a continuous parameter and the distance between the hands controls another dimension. The most interesting thing we’ve probably done to date uses eight simultaneous continuous control dimensions.

[EL] One of the most beautiful things about the process is that everything is correlated through human performance. When you’re controlling eight parameters, all of them are in motion as the gesture evolves, so the kind of control you get is different than if you had eight discrete sensors you were trying to manipulate individually (Video 1). We felt that, in this sense, we had discovered a new kind of controller in this use of the human body.

Working with Bio-Sensors

[GO] What are some of the challenges you’ve encountered in composing for biosensors?

[EL] I came into this project with unreasonable expectations, as composers often do. My experience with performance was conditioned by thinking about the reliability of, say, a percussionist. So when I would write something like “attack on the second beat of the bar,” I assumed that would not be a problem.

But because of the nature of not just the instrument, but interpreting the signals that come from the instrument, determining where a downbeat is makes the kind of precision attacks I had called for in the piece sometimes difficult. This happens in two ways: first, getting absolute precision of attack, and second, because of the different kinds of variability. There is the possibility of false triggers or missing triggers. Essentially, it took me a while to learn what was idiomatic to the instrument and what was not.

[BK] The majority of the problem solving we’ve had to do is probably not due to problems with hardware or software, but understanding what is or isn’t idiomatic to the instrument.

[EL] That’s the beauty of writing for an abstraction of gesture. Even the gestures themselves can be swapped in and out until we find one that’s right.

[GO] Ben, can you describe some of your designs relating to the BioMuse, and the history of those designs?

[BK] The first designs on creating an interface between human physiology and the computer were based on medical equipment. The early designs were sticky electrodes connected by cable to a medical-looking box. What we did in our first designs of the BioMuse system was to move the physiological interface out of the hospital and into a performance environment. The course of the evolution of the BioMuse was to make it more acceptable for performance in any venue and less like a medical device. So the changes that came were: making it smaller, using dry electrodes and making it modular (see Knapp and Lusted 2005).

One of the big changes in the world (besides the microelectronics that allowed us to do those things) was the ubiquity and the programmability of the PC. We could move a lot of the signal processing to the PC itself. The modern incarnation of the instrument that’s used for the Biomuse Trio is a modular set of pieces that use wireless transmitters — a set of bands I’ve designed that have the electronics embedded inside the bands themselves and dry electrodes. This allows us to pick and choose the bands we want to use for each performance. We can have multiple transmitters, which allows for large number of bands to be placed on different parts of the body (Fig. 4). The majority, then, of all of the signal processing occurs on the laptop itself.

[GO] What are the different bands you’ve used in Biomuse Trio compositions?

[BK] For Trio for Violin, Biosensors and Computer there are two EMG bands on each arm (front and back) and two accelerometers on each arm to get position. For Stem Cells we use a headband that picks up facial EMG, EEG and eye motion, a chest wrap for heart rate, a finger electrode for GSR, a chest wrap for breath and the whole montage from Trio. In Stem Cells, members of the audience are also wired for GSR and heart rate, using finger electrodes. That’s the most elaborate montage we use. For Music for Sleeping & Waking Minds we use frontal EEG and an accelerometer on the heads of the four performers. 2[2. For information on the Music for Sleeping & Waking Minds project, see the author’s Vimeo page and Ouzounian et al., 2012.]

Interfacing with Emotion: Stem Cells and Reluctant Shaman

[GO] Stem Cells is an interesting case in that it involved arranging an existing electroacoustic composition for performance by the BioMuse. How was that work conceived?

[BK] In 2009, there was a conference on music and emotion at Durham University. 3[3. ICME 2009 — International Conference on Music and Emotion (Durham University, 31 August – 3 September 2009).] I approached Eric to do something for that and we brainstormed on whether to start a new piece from scratch or start from something existing.

[EL] We came to the conclusion that we could learn more about the instrument by arranging an existing piece, rather than composing a new piece in which we could play to the strengths of the instrument (and bypass its weaknesses).

The basic idea behind Stem Cells was that, as with actual stem cells, you take an undifferentiated piece of musical material and gradually refine it until it takes on a certain particularity. The piece starts out with four sine waves and through iterated DSP the sounds are made increasingly rich and increasingly particular, musically speaking. In the original version, all of this was hidden behind the laptop. Now we had a chance to dramatically reveal those actions to the audience. Because this was a conference on music and emotion, we took on the additional challenge of using emotion as a way of controlling the music. 4[4. For more on Stem Cells, see Knapp and Lyon 2011.]

[GO] What kinds of variables are you looking at when you use emotion (or the physiological indicators of emotion) as a controller, and how did you start working with emotion as an interface?

[BK] The first piece I worked on to try to prototype using emotion was The Reluctant Shaman, which was a music theatre piece. 5[5. For more on Reluctant Shaman, see Knapp, Jaimovich and Coghlan 2009.] It was a story of an older gentleman who visited an Irish sacred site. What he imagined had taken place at that site was musically articulated by a live traditional music group. What he would hear if he were at the sacred site was conveyed to the audience via earphones. So the audience heard the sounds that were actually occurring at the site: his breathing, his heartbeat, environmental sounds, the sounds of his footsteps. At the same time, live performers in the performance space played the music the main actor was was imagining when he was at the site. In Reluctant Shaman, the performers themselves were not wired for physiology, other than the main actor (the older man). The main actor was wired to measure gesture, but not emotion. Emotion was inferred from hearing his heartbeat, hearing his breathing, hearing his footsteps.

Twenty members of the audience were wired for heart rate and Galvanic Skin Response (GSR), also known as ElectroDermal Response, or EDR. We measured this using sensors embedded in the arms of these audience members’ chairs (Fig. 5). The original thought was to use emotion as a way to control sound and music, but instead I decided to first just investigate the control of lighting. The music the audience was listening to changed mood, and the ambient lighting changed from blue to amber to red, depending on the emotional state of the audience.

[GO] How did that interface work?

[BK] We extracted the average heart rate and the average GSR (change in the skin sweat) of the audience; we combined those two variables together and used that amplitude to control lighting.

[GO] Did those variables vary a lot during the course of the piece?

[BK] It’s amazing. If you watch the video of the piece in real time, you almost can’t see the change in lighting. But if you scrub through it very quickly (the piece is 20 minutes long), you can actually see quite a bit of change in lighting with each of the different segments.

[GO] Why do you think emotion-driven interfaces aren’t used more often in music?

[BK] That’s a question one could ask of all of biosignal interfacing. Is the challenge, as Eric said at the beginning, one in which composers are waiting for the interfaces to become more ubiquitous, so that they’re able to compose with them at a more abstract level? Nobody has really answered the research challenge of how are these things to be used in composition. And that was the challenge of Stem Cells.

Performing Emotion: Ensemble Interactions

[GO] What does the emotional interface consist of in Stem Cells, and how was emotion used as a compositional element in that work?

[BK] There are two components to the interface. The performer has several measures of physiological indicators of emotion. We used facial EMG, breath, heart rate, GSR and arm muscle tension. That’s the performer. For the audience we used the next generation of measurement system that measured heart rate and GSR, as in Reluctant Shaman, but in Stem Cells the sensors weren’t tied to the chairs. Rather, they floated free. We thought we were being smart with the chair sensors, but the audience felt they were being constrained by having to keep their arms on the chairs.

[EL] We found a few key ways for emotion to work as a driver for the piece, in transition from one section to the next. At the outset, Ben moves himself from a state of tranquillity to extreme agitation and anger. As he follows that emotional trajectory the piece gradually introduces the four sine waves that serve as the “stem cells” for the composition. The sequence of the stem cells is pre-determined, but the rate at which they’re introduced is controlled entirely by the rate at which Ben moves through the emotional space.

That was, for me, an interesting use of emotion. It was almost the inverse of how music and emotion usually work. Ordinarily, music affects emotion. Here we did the opposite. We used a very specific emotional trajectory generated internally though visualization and used it to drive a rather abstract musical pattern. At the end of the piece, we essentially reversed that process. At the end, the piece moves through a very high level of excitement: there are drumbeats, all kinds of melodies that are happening — a very high level of energy. At a certain point, all of that releases to a tremolando chord and the speed of the tremolo is controlled by Ben’s emotion as he gradually relaxes. The music gradually slows down and thins out until you wind up on a single note (Video 2), so that there’s a kind of symmetry between the beginning and the end of the piece.

There’s also very striking moment in the middle, where we use a very different kind of material. Most of the sounds in Stem Cells are synthetic, but at a certain point in the piece, we used a sample of a woman saying “I’d like to go from where I am now to somewhere else.” The meaning of that sample mirrors the kinds of trajectories we’re looking at in the piece generally.

At a certain moment, very dramatically, Ben samples his emotional state, stands up and allows his emotional state to drive the pitch level of part of the sound. Then the audience is also engaged. At that point, we have an emotional ensemble piece. Each individual member of the audience controls the pitch level of a fragment of the vocal sample and then Ben walks out into the audience and “plays” the audience, essentially. As he moves closer to each individual member of the audience they react and become more agitated and you can hear the pitches of their particular voice part start to fly up. To my mind that’s an extremely interesting passage, because it’s emotion-driven music, but ensemble emotion-driven music, in which each individual voice of the audience is heard.

[GO] How many people in the audience are being engaged at that point?

[BK] We’ve had between nine and fifteen.

[GO] Are they aware of how they’re contributing to the music?

[EL] No, not at the outset.

[BK] Debriefing them afterwards, some had no idea. The majority thought there was some sort of magical position detector that said when I was close to them; another subset understood that their stress level was controlling the change in the sound.

[GO] Ben, what was the experience like for you to follow a predetermined emotional trajectory, or an emotional “score” in Stem Cells?

[BK] The first trajectory involves moving between tranquillity and extreme agitation and anger. In hindsight, it’s mildly problematic to start a piece when you’re supposed to be completely serene, when of course that’s when you’re most nervous. The piece worked best when I could really relax. And the beautiful thing was the reward of having these sine waves appear very slowly and sequentially. In architecting emotional change, we tried to make the rest of the emotional changes more natural.

[GO] More natural in terms of the pacing or sequencing?

[BK] It’s unnatural to start the piece relaxed and it’s unnatural to get to that level of anger and agitation. It’s gruelling and exhausting. So, for example, a natural transition was the part that Eric described in which I get up and walk into the audience and then walk back from the audience to the stage. The natural changes that occur there were increasing anxiety as I walked to the audience and absolute relief when I walked back on stage and sat down.

Music, Sensors and Emotion (MuSE)

[GO] Ben, you’ve done a number of experiments wherein people’s emotional states are tracked as they listen to different recordings of music. There was a large-scale experiment at the Science Gallery in Dublin, which was part of the Bio-Rhythm exhibit you co-curated in 2010. 6[6. For information on the Bio-Rhythm: Music and The Body exhibit and festival, visit the Science Gallery’s Bio-Rhythm website.]

[BK] We started there and we did a small study in Belfast, a small study in Italy, a large study in New York City at the World Science Festival and we’re doing a permanent study in Bergen, Norway. To date we’ve had over 7,000 people participate.

[GO] What do these studies involve?

[BK] The experiment has evolved. It started out as four randomly chosen songs, each lasting about ninety seconds. Participants would have two sensors on their hands while they listened to the songs: one sensor would measure heart rate and the other would measure GSR. In between each song, they would be asked questions about everything from their familiarity with the song, their like or dislike of the song, to their assessment of their own emotions. Before they listened to any songs we collected data about demographics and musical preference. At the end of all the songs we asked questions about which song they paid most attention to, which song they liked the best, et cetera. The changes in the experiment have been reducing the number of songs from four to three and tightening up some of the questions so the experiment doesn’t take as long.

[GO] What have the findings been, and who has been involved in designing or implementing these studies?

[BK] We’ve found that physiological indicators of emotion absolutely evolve with the changing aspects of the music. We have also found large-scale agreement in people’s assessment of the emotions that they feel when they listen to different songs. Niall Cochlan, Javier Jaimovich and Miguel Ortiz-Pérez are some of the people who have been involved in this research.

[GO] They’re part of the Music, Sensors and Emotion (MuSE) group you started at SARC in 2008 and that’s now operating more internationally. 7[7. For information on the Music, Sensors and Emotion research group, visit the MuSE website.] MuSE has also done performances and experiments at different festivals. Can you describe one of those projects?

[BK] The most recent project was at Electric Picnic. We had a version of the “high striker”, which is a carnival game that you hit and it goes “ding”. In our version we had a high striker controlled by people’s muscle tension. It brings up the issue of calibration, which is something that Eric and I have wrestled with. It’s important that every performer has the BioMuse system calibrated to them. The nice thing with High Striker was that we set ranges such that, unlike with the traditional high striker, a little girl could ring the bell, because it was calibrated for little girls, and a very fit young man might not hit the bell, because he’s not as strong as other men in the “young male” category.

[GO] Have there been applications of this research outside of music?

[BK] Yes. One of the applications we’re exploring is: can we look at the emotional state of older people and predict health changes before they happen?

[GO] There was also Biosuite, an emotion-controlled film that was screened at South by Southwest and the Belfast Film Festival. Can you describe that project? 8[8. Visit the Film Trip’s Biosuite & Unsound page for more information about the Biosuite project.]

[BK] There’s a local filmmaker in Belfast, Gawain Morrison, who was friends with Miguel Ortiz. 9[9. Also see Miguel Ortiz’ article “A Brief History Biosignal-Driven Art: From biofeedback to biophysical performance” in this issue of eContact! for an historical overview of the evolution of biosignal-driven art.] They began talking about how to use this emotional audience measurement with film and they ended up creating a 10-minute horror movie where there were multiple paths through the movie. Those paths were chosen based on the audience’s physiological indicators of emotion.

Emotional Feedback: Biospaces

[GO] One of the most interesting experiences I’ve had performing with the Biomuse Trio has been in Biospaces, a piece you’ve been developing more recently for Biomuse performer, instrumentalist and wired audience. What was the idea with this composition?

[EL] The idea was to explore the use of emotion as a controller for music: to explore this using a Biomuse performer who has good control over his physiology (so he can produce the physiological indicators of emotion through self-control or visualisation) and to bring the performer into conversation with an instrumentalist and with an audience that’s less trained and who would react more spontaneously to musical stimuli.

We were very interested in the possibility of feedback. In Stem Cells, we used the physiological indicators of emotion to drive music. In Biospaces we’re much more interested in creating a feedback loop where the performer’s emotion drives the music and the audience responds or reacts to it. We would hear very distinct changes in the music depending on either the individual states of each audience member or the aggregate state of the group.

It’s a very hard problem when you have a feedback loop to have any sort of control, but we did have some very promising responses with some audiences in New York City. In those cases, you were improvising on violin and had the freedom to play in different ways you felt would be evocative of different emotional states. In the most promising moments you could really hear the different voices of the audience which were harmonizing the violin move in very similar ways in response to changes in the violin improvisation. And you could really hear clear changes in the texture of the music as the audience moved to a different aggregate state.

We’ve been prototyping for a more refined version of the piece, where Ben serves almost as an instructor. We play a simple sound to him and, as his emotional state changes, the sound changes in a very simple ways. After that demonstration is done — in a simple way, not explaining, just performing — we set the audience loose, to do the same thing in an ensemble fashion. That part is a prototype. We haven’t yet tried it with a live audience.

[GO] How did people respond to earlier versions?

[EL] With some early versions of Biospaces we actually had audience members come to us and say that they felt as if they were part of a wave, that their emotions were being played by the violinist as a performer and that it was a very unique experience. We’re absolutely at the beginning of exploring the possibilities.

[GO] What are you most interested in learning about through doing this research?

[EL] The experience of emotion in music is such a rich, important, powerful thing for everybody (or at least everybody who likes music), yet when we’re looking at the physiological indicators of emotion, we don’t have much to go on: we’re primarily looking at heart rate and GSR.

This project has made me reflect on two particular senses of musical emotion. From an intuitive standpoint, sometimes the way a musical performance is going just feels right; it stimulates, or excites, or transports you. This happens during the composition process as well. I experience extremely intense emotions when I’m composing. The second sense is much more technical, involving an on-going attempt to quantify in physiological terms what it means to experience emotion.

As a research composer — which is to say, one who uses composition as research into new possibilities — it’s just a fascinating project and one in which the outcomes are really unknown. In many cases we simply had no idea whether something would work until we tried it. Another intriguing thing about emotion is it doesn’t work the same way for everyone. People have very different reactions, so composing for ensemble emotion brings out the diversity of experience in a way that, to my mind, is completely unique and perhaps unprecedented: to expose all that experiential variety in the music itself.

[BK] I want to learn how we can create a musical instrument that connects people emotionally. When I first started doing this research, I was interested in exploring the language of physiological and emotional interface design for musical, artistic or therapeutic applications. My first questions were about the tensions between sonification and musicalization of bio-signals (i.e. not simply sonifying the signal, but designing a new interface that could enable the musician to create using new dimensions of exploration. With the concept of the integral music controller and my more recent work in mobile environments, I am more specifically interested in the larger picture questions of emotional connection and empathy.

Bibliography

Knapp, R. Benjamin and Hugh S. Lusted. “A Bioelectric Controller for Computer Music Applications.” Computer Music Journal 14/1 (Spring 1990) “New Performance Interfaces (1),” pp. 42–47.

_____. “Designing a Biocontrol Interface for Commercial and Consumer Mobile Applications: Effective Control within Ergonomic and Usability Constraints.” HCI International 2005. Proceedings of the 11th International Conference on Human-Computer Interaction (Las Vegas NV, USA: HCI International, 22–27 July 2005).

Knapp, R. Benjamin, Javier Jaimovich and Niall Coghlan. “Measurement of Motion and Emotion during Musical Performance.” ACII 2009. Proceedings of the IEEE International Conference on Affective Computing and Intelligent Interfaces (Amsterdam: IEEE Computer Society, 10–12 September 2009).

Knapp, R. Benjamin and Eric Lyon. “The Measurement of Performer and Audience Emotional State as a New Means of Computer Interaction: A Performance Case Study.” ICMC 2011: “innovation : interaction : imagination”. Proceedings of the International Computer Music Conference (Huddersfield, UK: Centre for Research in New Music (CeReNeM) at the University of Huddersfield, 31 July – 5 August 2011).

Ouzounian, Gascia, R. Benjamin Knapp, Eric Lyon and R. Luke DuBois. “To be Inside Someone Else's Dream: On ‘Music for Sleeping & Waking Minds.’” NIME 2012. Proceedings of the 12th Conference on New Interfaces for Musical Expression (Ann Arbor MI, USA: University of Michigan at Ann Arbor, 21–23 May 2012). http://www.eecs.umich.edu/nime2012

Social top