A Very Fractal Cat

Of Cats, performers, composers and programmers

This article describes the evolution of a series of pieces for a classically trained pianist, a weighted keys piano controller, several pedals and a computer running a custom SuperCollider program and open source software. It describes the evolution (and the motivation for the evolution) of different versions of the piece through time, rather than focusing on the technical underpinnings of the environment used (for more on the technical side of the piece consult my article “CatMaster and A Very Fractal Cat, a piece and its software”.

NB: The audio examples in this article are mono versions of original 3rd-order Ambisonics streams decoded through a UHJ convolution-based decoder.

A Very Long Time Ago… In this Galaxy

When I was a kid, my parents asked me which instrument I would like to learn to play, and my answer was: the piano (I think they hoped for a smaller guitar…). I started studying piano at around age nine and when it looked like I really liked it, they bought me and the whole family a piano. I learned, and for a long time played, pieces from the classical repertoire and some jazz, studied in a private conservatory and then in the National Conservatory (in Buenos Aires, Argentina). At the same time I branched into studying Electronic Engineering, and merged both fields by building my own analogue synthesizers and studio, exploring sound and composing music with electronics, and later using computers and software for the same purpose. All the time slowly trading the beloved ivory keys for a more common qwerty layout and alternative controllers. I kept my fingers warm and ready until around 1990 or so, and then slowly stopped playing.

So it should not be a surprise that for a long time I had been contemplating a return to the piano keyboard, coupled with real-time control through software and computers.

Confluence

There were several elements that were key to starting work on a series of pieces for piano keyboard and computer in 2008. I had bought an 88‑note weighted keyboard stage piano with the intention of retraining my fingers a bit. It turned out that the on-board piano sound font was not of good enough quality to use in a “serious” performance. The appearance of LinuxSampler (a gigasample playback application for Linux) and the ever increasing power of computers (including laptops) made it possible to have high quality sample playback and a large enough number of voices for a reasonable multiple piano simulation (of course nothing compares to the real thing!).

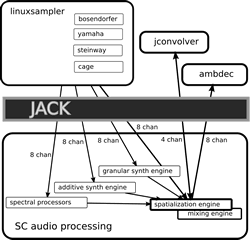

I had also started to use SuperCollider (McCartney et al, 1996–2011), which included all the elements I needed in one very complex and expressive text-based computer language. SuperCollider could manage input / output, sound synthesis and processing, and a GUI, all from the same language. Several other open source packages were also used, including Jconvolver for convolution reverberation and Ambdec for Ambisonics decoding (Adriansen 2006). My operating system of choice (Linux) together with Jack (a low-latency sound server, see Davis et al) enabled me to plug all these components together seamlessly.

Goals

The goal was to return to virtuoso playing by using a weighted key piano controller that, coupled with a computer and virtual pianos, would create an augmented instrument that reacted to the performer’s gestures with a mixture of predictable and unpredictable behaviours.

I did not want to create a completely notated piano piece, but rather an interactive environment that would guide the performer through a pre-composed form with different reactive behaviours, and with elements of free improvisation riding on top of the overall form. Many others have created augmented instruments using pianos and algorithms, from completely notated pieces (Risset 1991, Risset and Van Duyne 1996) to improvised environments using non-traditional controllers to drive pianos (Jaffe and Schloss, 1993).

PadMaster and the Radio Drum

Between 1994 and 1999 I had worked in creating a similar environment using Max Matthew’s Radio Drum as a 3D controller and my own software that controlled external synthesizers through MIDI (Padmaster). Computers were not that powerful at the time, and the NeXT workstation I was using could barely play one or two stereo sounds at the same time as it was controlling MIDI synthesizers and communicating with the Radio Drum. At the time, I wanted to include note-generating algorithms in the program, but I never got to that stage.

PadMaster used short, predefined MIDI sequences and sound files that were triggered and controlled in several ways through PadMaster. The custom sounds programmed in the external synthesizers created the other half of the pieces composed for this system. See Espresso Machine II and House of Mirrors for pieces written and performed using this system (also see Lopez-Lezcano 1995, 1996).

Sounds

The piece is almost completely based on piano sounds rendered by LinuxSampler from Gigasampler format samples and further processed and transformed by SuperCollider instruments. Initial work on the piece started with two pianos, a Steinway and a Bösendorfer, and later added a Yamaha and some notes of a Cage prepared piano piece. The software can also control a Disklavier or similar MIDI-controlled acoustic piano.

Algorithms

My approach to the development of the piano controller pieces and the supporting software has been completely pragmatic. I initially created a very simple skeleton SuperCollider program that would process MIDI events generated by the piano controller, pass them on to the virtual pianos and trigger responses in the form of new MIDI events (individual notes, phrases and / or textures) generated by different algorithms.

The development of the different algorithms that are used throughout the piece was the result of a “write software, play, listen and change” cycle. I wrote code and tested it immediately by playing so I could hear how the algorithm would react to my playing and how different styles of playing could extract musically interesting behaviours from the code. My ears and my musical intuition closed the loop of code writing and musical creation. Sections of the pieces slowly converged as code was developed and piano techniques were tested to create musical content. Code writing and piano gestures closely interacted to develop the pieces.

Like in PadMaster and its pieces before, I was the programmer, the composer and the performer.

I would also like to mention that I approached complexity through simplicity. None of the algorithms are particularly involved and there is not much (if at all) “intelligence” in the program, but there are many algorithms and they can be combined and layered at will during the performance. It is through this combination and layering process that very complex textures can be created and transformed during the performance.

Starting with Markov

The first important addition to the basic program was code that extracted information from incoming MIDI events and trained Markov chains on the fly. Four parameters were stored: intervals between successive notes, rhythm, duration and note velocity information. The last three were not stored as is, but were quantized to a set of hand-chosen values before training the Markov chains (in the case of rhythm and duration this forced a certain rhythmic feel to the piece that is, to a degree, independent of my playing).

The following short fragment illustrates notes and phrases triggering algorithms that extract information from the Markov chain (this segment is captured after already playing for a little while and training the chains):

Control Strategies

In defining how to control the program I used an approach that minimized taking my hands off the keyboard while performing. The main controls are the keys themselves, the modulation wheels and a set of pedals, and the performance does not have elements that visually detract from a focus on the performer.

The overall form of the piece was defined by manually stepping through a series of “scenes” during the performance. Each scene encapsulates a set of parameters and behaviours that define the response of the program to events coming from the piano controller, pedals and other peripherals. It also suggests to the performer a particular set of gestures and playing techniques for that section of the piece. I used the two lowest keys of the keyboard as silent triggers that stepped up and down through the list of scenes, dynamically changing the response of the program to my playing.

Playing notes directly triggered algorithms that kept playing on their own, which algorithm and for how long depending on other controls and the behaviour defined in the current scene. Algorithms could overlap and changing scenes did not affect algorithms that were already playing.

In addition to the regular sustain pedal which was sent directly to all pianos, I used a second “algorithm control” pedal that stopped all running algorithms when pressed. Subtle control of the timing of playing and pedalling could be used to create all sorts of phrases and textures, including abrupt transitions between sections of the piece.

The modulation wheel of the piano controller was used to define the length of the algorithm runs, and a third continuous control pedal defined the relative volume of the notes I was playing versus the notes the algorithms were playing (this control is still available but I rarely use it in current performances).

The pitch bend wheel was also used: it changed the pitch upwards in one of the two main pianos, and downwards by the same amount in the other (but was not routed to the third and fourth pianos), so that it was possible to bend the pianos, going from subtle beatings to microtonal playing during the performance of sections of the piece.

A USB MIDI fader controller was added, and its faders controlled the relative levels of the stage and spatialized pianos and scaled the velocities of the notes being sent to the Disklavier. This was the only non-keyboard oriented interface with the performer, and while I have to reach for it from the keyboard, it was deemed necessary and reasonable as it is not used very often during the performance.

Granular Synthesis

The sound of all the pianos was also being recorded by instruments in SuperCollider, and the recording was used as the source material for a granular synthesis engine that created additional sound textures related to the immediate sonic past of the piece. The granular synthesis voices were dynamically panned around the audience.

Tempo Control

All the algorithmic elements of the piece were tied to a common clock and parameters were added to the scenes that enabled the basic tempo of the playback to be changed either abruptly or through timed transitions.

Feedback

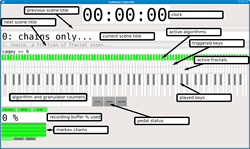

Finally, a GUI was created to give feedback to the performer on the current state of the program.

A running clock keeps track of time (in minutes and seconds) and is started when the first MIDI event is received by the program. Below it there are three text fields that show brief text descriptions of the previous scene (greyed out), the currently active scene, and the next scene (again greyed out). This so I know where I am in the piece and what is coming up.

A piano keyboard shows the state of all keys in the controller, and three matching rows of indicators above it show which keys have algorithms running and how many of them, the activity of the keys that are been triggered by algorithms (as opposed to the keys that the performer has played) and the keys that have active fractal melody algorithms running and how many — this is only on later versions of the program, see below. Several other indicators show the status of the pedals, and various counters and gages show other internal parameters of the program.

The First Performance

With these elements I performed Cat Walk (the original title of the piece) for the first time in late 2008 (CCRMA Fall Concert, November 2008). The program notes talked about a cat that enjoys jumping and playing on the keyboard of a piano (that is also why the control software is called “CatMaster”).

This first iteration of the program used the information stored in the Markov chains to generate short phrases each time I played a note. Alternating that with randomly selected predefined phrases and rhythmic patterns. The responses from the program to my playing were modified echoes of my previous playing.

The resulting MIDI event stream was driving three virtual pianos spatialized dynamically around the audience by using simple amplitude panning, and also controlled a Disklavier acoustic piano.

After that performance a flurry of changes in the software enhanced its capabilities and added to the palette of sounds and behaviours available to the performer. The most important was the addition of another main algorithm and a change in the behaviour of the algorithm control pedal.

One More Algorithm and Many More Sounds

Fractal melodies based on Rick Taube’s example code in his Notes from the Metalevel book were incorporated into the program (the multi-layered melodies are inspired in the Sierpinski Triangle fractal). Notes triggered superimposed melodies with different tempos, with the basic melody derived from previously played pitch intervals extracted from the intervals Markov chain. To control them, I changed the functionality of the algorithm pedal. Fractal melodies were only triggered when the algorithm pedal was “down”. When it was “up” all the other algorithms would get triggered. And the down transition of the algorithm pedal was used to stop all running algorithms in the program.

This very simple change greatly enhanced control. One pedal would stop all algorithms and also control which flavour of algorithm was triggered when I played keys on the keyboard. New pedalling alternatives lead to more materials and behaviours for the piece.

The following example starts with two one-note triggers, each followed by a complete fractal melody. Then fractal superimposed melodies interrupted by the algorithm control pedal, followed by a more complex example that mixes Markov segments with fractal melodies (note that near the end of the segment sine wave components are being added to the fractal melodies as described in the following paragraph).

Sine Waves

A sine wave texture generator was also added to the fractal melody algorithm which accentuated high harmonics of the notes being played and added pitch bend gestures at the end of the notes. The additional voices were also spatialized around the audience. The addition or not of these sine waves was controlled through parameters in each scene of the piece.

Spectral Processing

All pianos were also passed through a set of spectral processors that would do bin scrambling, shifting, and conformal mapping of the spectrum. The pitch bend wheel was also used to control some of the spectral processing parameters together with pitch bend, and was used to dramatically alter the sound of the processed pianos during the performance.

The following example starts with clusters of pitch bent bass notes that are manually transitioned into the spectrally processed audio. A short segment follows with the pitch bend wheel being used to control spectral processing parameters (in addition to pitch bend).

The fader bank controller could now change the mix of all sound components of the piece (in short: stage pianos, spatialized pianos, granular synthesis engine, additive synthesis engine and spectral processors).

Using Ambisonics

The spatialization technique used was also changed to Ambisonics (using 3rd-order encoding and decoding) to make it more flexible and not tied to a particular arrangement of speakers as before.

The palette of elements available to the performer had been substantially enhanced, and the name of the piece was changed to A Very Fractal Cat (it was by now very different from the first performance of the piece). It was played for the first time in one of the evening concerts of the LAC2009 Conference (Linux Audio Conference 2009) in Parma, Italy.

The evolution of the piece in large part is a long process of adding new sonic or behavioural patterns to the program, thus expanding the palette available to the performer, and then exploring these new capabilities and expanding the piece as a result.

The Solo Pedal

The program kept evolving after that and subsequent performances. Another pedal (the “solo” pedal) was added. When pressed, algorithms would not be triggered at all by note events coming from the piano controller, so it would be possible to play solo passages with or without algorithms playing in the background.

In short, the performance was now controlled through the keyboard (with its modulation and pitch bend wheels), four pedals and the fader bank controller.

Another Algorithm

Another algorithm was added later, and the piece was renamed to A very Fractal Cat, Somewhat T[h]rilled as a result (premièred in the 2010 CCRMA Summer Concert). Different styles of trills could be triggered instead of the Markov-based algorithms and that is currently the core of the final section of the piece (which algorithm is triggered by the performer is controlled through configuration of the scenes that make up the piece). The length of the trills is controlled by the modulation wheel of the keyboard controller.

The following example starts with short trills. The length is later changed so that they are longer and finally they are superimposed to create textures (as in the real performance of the piece — almost none of the elements of the sonic and algorithmic palette described so far are heard isolated during the performance).

Current State

The software is currently around 2700 lines of SuperCollider code and manages all the event, audio and GUI processing for the piece, except for convolution reverberation, ambisonics decoding and actually playing the piano gigasample fonts (all of which are managed through external programs controlled from the SuperCollider main program).

A typical performance of the piece travels back and forth through 15 different scenes and can last between 15 and 20 minutes. The audio output of the computer is a 3rd order Ambisonics stream that can be decoded to any reasonable speaker configuration, the smallest one that would be recommended is a 5.1 compatible setup, most of the time the piece has been performed through 8 or more speakers.

More details and a version of the piece recorded in 2009 can be listened to on my website.

Future Evolution

Other Performers

One aspect of these piece that is missing is a way to enable it to be played by other performers. Right now the gestures, intervallic materials to be played and the general feel of each section is in my head, so to speak. I would like to eventually open up the piece to other performers, but it is not clear yet to me what would be the best way to achieve this.

The piece has never been set in stone and has been evolving as the program grows and/or is modified to create new behaviours or change old ones. No two performances are the same, although the general form of the piece is maintained from performance to performance.

One approach would be to do nothing. Just write basic instructions on how to bring up the system and let the performer “discover” a piece (not necessarily the same I play) in the available materials and behaviours embedded into the program. What is discovered would be influenced by the time the performer is willing to invest playing with the software before the concert performance. This approach would let the piece evolve in different directions, which could be good.

Another approach would be to add to the program more guidance for the performer. This would include more detailed text associated with each scene (currently each scene displays a single line of text that is meant to remind me of what I need to do), and most likely a score view that would show the performer either specific notes to play, or suggest intervallic, rhythmic or harmonic materials to be played in a particular scene. Although it would be next to impossible to capture enough information to be able to perfectly replicate the piece as I play it, these additions would be enough to match the spirit of the piece without freezing it completely (which would go against the original goals of the project).

An added complexity to opening up the piece is that I would also have to adapt to more “exotic” operating systems (OSX on a Mac comes to mind) to widen the potential performer audience of the piece. SuperCollider is cross-platform, but the other programs would have to replaced by platform-specific and functionally similar software. Yet another problem is the piano sound fonts themselves, which I would not be able to distribute freely.

A Two-way Street

The software as it stands today is completely reactive. If I stop playing notes, all algorithms eventually stop generating notes and nothing else happens. I would like to write additional software so that the program can start algorithms by itself, acting as another performer in duo with the human performer (as opposed to the current behaviour in which algorithms are only triggered by notes played on the keyboard controller).

Disklavier as a Controller

Currently the only support for using Disklavier MIDI-controlled pianos is sending them algorithmically generated materials (Disklaviers have inherent delays that are difficult, although not impossible, to compensate for). I would like to add software to be able to use a Disklavier as a controller in addition to or replacing the MIDI-weighted key controller. That will involve better management of the delays as well as establishing dynamic “no play” zones around the notes the performer is playing in the Disklavier so that algorithms have less chance of pressing notes the performer is about to play.

Algorithms and Control

More work remains to be done with regards to algorithms, especially in terms of rewriting some of the code to be more modular and easier to expand (the program has undergone a number of rewrites while it was growing because the hands-on experimental nature of the development process did not always lead to orderly code).

Chord processing is one thing that is left to do. Chords are currently being detected but nothing is done about them, it is a potential trigger for a wider palette of responses from the program. More complicated gesture recognition would also be a worthwhile addition and another source of triggers that could be attached to expanded behaviour.

Thanks

I would like to extend my thanks to the thriving Linux audio developers community (and to the Linux community at large): without their contributions to an open source world this piece would not have been realized.

Bibliography

Adriansen, Fons. AmbDec — Ambisonics decoder. Software, 2006. http://kokkinizita.linuxaudio.org/ambisonics [Last accessed 24 March 2011]

____. Jconvolver, a partitioned convolution engine. Software, 2006. http://kokkinizita.linuxaudio.org/ambisonics

Davis, Paul et al. JACK, an open source low-latency sound server. Software, 2001–06. http://jackaudio.org

Lopez-Lezcano, Fernando. “PadMaster, An Improvisation Environment for Real-time Performance.” Proceedings of the International Computer Music Conference (ICMC) 1995 (Banff AB, Canada: Banff Centre for the Arts, 1995). Available on the author’s website https://ccrma.stanford.edu/~nando/publications/padmaster_icmc1995.pdf

_____. “PadMaster: Banging on Algorithms with Alternative Controllers.” Proceedings of the International Computer Music Conference (ICMC) 1996 (China: Hong Kong University of Science and Technology, 1996). Available on the author’s website https://ccrma.stanford.edu/~nando/publications/padmaster_icmc1996.pdf

_____. “CatMaster and A Very Fractal Cat, A piece and its software.” Proceedings of the International Computer Music Conference (ICMC) 2010 (Barcelona: Universitat Pompeu Fabra, 21–24 July 2010). Available on the author’s website: https://ccrma.stanford.edu/~nando/publications/catmaster_smc2010.pdf

McCartney, James et al. SuperCollider. Software, 1996–2011. http://supercollider.sourceforge.net

Risset, Jean-Claude. Three Etudes: Duet for One Pianist (1991).

Risset, Jean-Claude and Scott Van Duyne. “Real-Time Performance Interaction with a Computer-Controlled Acoustic Piano.” Computer Music Journal 20/1 (Spring 1996) “20th Anniversary Issue: The State of the Art”, pp. 62–75.

Schloss, Andrew and David Jaffe. “Intelligent Musical Instruments: The Future of Musical Performance or the Demise of the Performer?” Journal of New Music Research 22/3 (August 1993) “Man-Machine Interaction in Live Performance”, pp. 183–193.

Taube, Heinrich K. Notes from the Metalevel: An Introduction to Computer Composition. Utrecht: Routledge, 2004.

Various. LinuxSampler, an open source audio sampler. Software http://www.linuxsampler.org

Social top