In Vitro Oink

A Composition for piano and wii-remote

1 Introduction

In Vitro Oinking

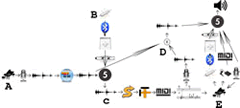

In Vitro Oink is a composition for piano: live processing with fixed and semi-indeterminate electronics controlled by a Nintendo Wii remote (Wii) and a MIDI foot pedal. The score is built from two unique piano sounds: the striking of an individual tone (Tink) and a single knock on the piano (Tonk). These sounds are used to generate a semi-indeterminate electronic accompaniment, which also served as the material for score generation. The Wii enables the performer to influence the electronics by reporting the ancillary movements of the left arm. The MIDI foot pedal and buttons of the Wii allow for convenient direct triggering of events. The entire composition / construction process can be seen in Figure 1.

Figure 1 traces In Vitro Oink’s creation from beginning to performance. At A, a microphone records the piano to a sound file. The recorded sound files are manipulated by the composer using IRIN (Caires 2010) to generate derivative sound files. At B, a Wii uses Bluetooth to communicate with DarwiinRemote, which in turn uses OSC to communicate with Max/MSP. This enables improvisations with the sound files from IRIN, creating another generation of sound files. At C, these improvisation sound files are translated by SPEAR (Klingbeil 2005) and then assembled in AC Toolbox (Berg 2010) into MIDI files, which are turned into music notation. At D, the piano part is recorded and convolved with the sound files from the end of process A. This material results in fixed electronic interludes used in the final piece. Finally, at E the pianist plays the score and wears the Wii, which communicates via Bluetooth to DarwiinRemote, which uses OSC to communicate with Max/MSP. Simultaneously, the pianist triggers sound files with a foot pedal that uses MIDI to communicate with Max/MSP and a microphone samples the piano, sending audio to Max/MSP for delaying and granulation. The result of this is output to a set of stereo speakers that sounds simultaneously to the piano being played.

In Vitro Oink explores the indirect and direct linking of the performers movements with the electronic accompaniment. The performer’s motions are used to influence a semi-indeterminate process used as an electronic accompaniment to the acoustic piano. This is accomplished by placing a Wii on the left arm of the pianist and using accelerometer data from the Wii to trigger windows that reveal an unfolding audio streams. The intention is to provide an organic connection between the movement of the pianist and the electronic accompaniment, while maintaining a simple performance interface. The goal of this interface is to free the performer from coordination with the electronic part and allow him to concern himself with playing the piano.

Terminology

All time scale references in this paper are in reference to Roads’ Time Scales of Music (Roads 2001, 5). The title In Vitro Oink metaphorically references the groundbreaking synthesis of pig flesh in a Dutch laboratory (Rogers 2009). During the three-week compositional process, the composer read reports of this scientific breakthrough and it serves both as inspiration and as a mental model for the composition.

The widespread production of in vitro meat has been posited (Langelaan 2009) and serves as a context in which to consider the evolution of musical production from acoustic instruments to electronic instruments. The terminology used in discussing this piece embraces the spirit of this evolution by suggesting parallel biological and compositional components. It is clear that a more literal translation could occur. Yet, this metaphor has served as a powerful and effective means of communication between the composer, performer and audience.

At the lowest level, the structure of In Vitro Oink is based on recordings of piano tone (Tink) and knocking on a piano (Tonk). Analogous to stem cells differentiating into different cells, these acoustic sounds are used to synthesize and construct acoustic cells (subsequently called cells in this paper). These cells are arranged into streams of sound that act as a framework for holding the cells together, rather analogous to the formation of muscle. These streams serve both as accompaniment in the final composition and as the base material for the score. Sound files of the streams are translated into notation via an FFT process. This piano material, like skin and tendons, functions to encapsulate and link the muscle to the bone. Crossing the original cells with a recording of the piano part, short fixed media interludes are constructed. These rigid unchanging electronic parts serve as a bone-like scaffold that holds the other elements in place.

2. Compositional Process

2.1 Building Sonic Material

The score for In Vitro Oink is a result of a multi-stage compositional process. The first step was the construction of individual sonic cells for the granular accompaniment. These cells are used both for the improvisations that generated the score, described in section 2.2, and later for the semi-indeterminate accompaniment, described in section 3.1. This paper refers to a group of hand-placed grains as a cell. Each individual cell is constructed from either a single piano tone (Tink)or a single knock (Tonk) on the side of the piano. The cells are organized into two streams based on which of the two piano sounds they are derived from. Each cell of granular material was created by hand using the IRIN program (Caires 2010). There are 152 cells in total.

2.1.1 Anatomy of a Cell

The length of the cells ranges from 800 milliseconds to 9 seconds. The cells are comprised of sonic grains, each containing a unique sonic signature. The grains are shaped by five parameters: the playback speed of the granulated sound file, the position of the read head, the stereo-position, the windowing and the grain length. The composer set each parameter of each grain and then arranged them. The IRIN program allows for manual or algorithmic manipulation of these parameters of the grains. IRIN also provides the ability to segment various components of a group. Moving from one cell to the next, the cells evolve by retaining some elements and having other parameters altered.

This process of building sounds from a series of small Microsonic events is indebted to Horacio Vaggione and Curtis Roads. In particular Roads reports this personal communication:

Considering the hand-crafted side, this is the way I worked on Schall (along with algorithmic generation and manipulation of sound materials): making a frame of 7 minutes and 30 seconds and filling it by “replacing” silence with objects, progressively enriching the texture by adding here and there different instances (copies as well as transformations of diverse order) of the same basic material. (Roads 2005, 295–309).

This approach of filling silence with the same basic material is explored both on the Microsonic and Sound Object levels of In Vitro Oink. To provide musical trajectory the evolution of material across cells underpins the thematic changes of the composition.

2.2 Extending Sonic Material: Computer-Assisted Translation

The piano score is the result of improvising with the cells, recording and editing the improvisations and then translating these edited files into notation. A Max/MSP patch was built and used to improvise with the cells as described in section 2.2.1. The improvisations that resulted from these sessions were recorded as audio files. In turn, these files were edited and sequenced in a DAW. This audio material was translated into notation via an FFT process.

Max/MSP provides a flexible environment in which to both improvise and simultaneously adjust the patch in real-time. As David Zicarelli notes of Max/MSP, “it is a game field that operates according to rules that cannot be invented by the player.” (Zicarelli 2002, 45). This improvisatory environment or “game field” allowed for an extensive exploratory phase of programming during which the patch was continually refined. Many of the most successful aspects of this patch were later placed in the final patch that the pianist would perform with.

2.2.1 Improvisation Patch

The Wii acts as interface to a Max/MSP patch that polls the accelerometer information. In this patch there are two streams of audio that are only revealed when a window is triggered. The window is triggered if the accelerometer data passes one of a series of thresholds. This patch was first used and developed as a compositional device and later adapted for the performance patch.

The Max/MSP patch simultaneously moves through the two streams of granular material. The two streams are segregated based on their lineage. Cells based on the piano tone are called Tinks and cells based on knocking the piano are called Tonks. Each of the two streams contains 76 cells. The cells are grouped in clumps of eight to ten. As time passes, the improviser can move from one clump of cells to the next, until finally reaching the end of the stream. Within these clumps the cells are not played in a linear sequence. The cells are reordered by employing various indeterminate strategies implemented in Karlheinz Essl’s Real Time Composition Library (RTC) [Essl 2010].

Each of the streams moves through its collection of cells with a different logic. The RTC collection includes an object called sel-princ, which provides different means of moving through data sets. The sel-princ help file states “Changes between the selection principles ALEA, SERIES and SEQUENCE (as defined by G.M. Koenig)” (Essl 2010).Alternating from clump to clump which of the selection principles is used, can create audible patterns. Thus, the particular order of cells is a unique semi-indeterminate sequence, with each iteration.

While this automated process provides two continuous streams of audio, each can only be heard when an envelope is triggered by accelerating the Wii. The improvisation patch allows fixing or altering the trigger assignment from clump to clump. Each of the two streams is triggered by acceleration in a different plane. (Section 3.1 will address this in more detail.) These envelopes act as a metaphorical window which, when opened, reveals the activity of the stream. In order to control sonic density on the Meso timescale, the frequency of envelopes triggered on the Sound Object timescale is varied.

To maximize the range of sounds, the type of envelope, its amplitude and its length are adjusted. Improvisations generated an interesting range of presets that would eventually populate the final performance patch.

The preliminary improvisations acted as an opportunity to explore various options with only a Wii controlling the two streams. The benefit of this approach is that the composer could explore electronic sounds in any æsthetic direction, knowing that the end result would be translated into notation for the piano. In this way, an organic connection was ensured.

2.2.2 Translation from Audio Notation

This simultaneous building of the performance patch and improvising with Wii generated many audio files. By selectively sequencing a series of the material in a DAW, two improvisation sound files were created, one of Tinks and one of Tonks. To translate the sounds into notation an FFT was used. This FFT was implemented in two stages allowing different aspects of the sound files to be emphasized.

The first stage of translation used the Analysis / Synthesis package SPEAR (Klingbeil 2005). SPEAR was used to analyze and convert the audio files into text files containing the sinusoidal partials and their intensity. This process involved several parameters that influenced the final output.

The first important choice in translation was where to segment the audio file. By grouping material that is similar in length or spectral distribution, the translation will have better results. The FFT resolution has a significant impact on the reported partials. By combining several different resolutions, different overtone series can be emphasized. SPEAR also allows for the thinning of the sound file by partial threshold or partial length. These data reduction strategies can highlight partials of a certain length or intensity. Working with the same sound to bring out two different features and then combining the results provides a unique compositional control. Finally SPEAR enables selective manipulation of partials by hand so that a sound can be altered in a novel fashion.

Composing In Vitro Oink, many text files containing partials data were created with SPEAR. These spectral text files were then imported into The Algorithmic Composers Toolbox (AC Toolbox). AC Toolbox was used to reassemble the data into continuous streams of MIDI notes. A set of filters and the ability to manipulate data sets according to a rule or set of rules enabled the refinement of the data.

After importing the various datasets into AC Toolbox and altering the material in order to emphasize / deemphasize components, the two streams were reconstituted. These two individual streams were then exported, notated and edited by the composer in order to make them playable on a piano. After shaping the individual streams, they were interleaved, changing between streams every one to ten measures. This alteration between streams happens on the Meso timescale. The interleaving explores how the different material can achieve maximum blending, juxtaposition or something in-between these absolutes. This was also the point at which the knocking on the piano was introduced into the score, offering a means of further distinguishing the two streams when compositionally desirable.

2.3 Finalization

In Vitro Oink was written in roughly three-and-a-half weeks. A concert tour had been booked and two commissioned pieces did not materialize, so In Vitro Oink was requested. The first concert of this multi-concert tour was weeks away and the performer needed time to learn the notes and become comfortable performing with the Wii. The composer was in need of time to finish working out the Max/MSP patch as well as create the four fixed electronic pillars. The roughly shaped score and a basic performance patch for the Wii were given to the performer.

2.3.1 Score Finalization

Giving the score to the performer without having had the time to finalize it means there was room for adjustments. The performer was allowed ten days in which to suggest and make any changes that he wished. After that time, the score became fixed. This room for the pianist to edit the score created a unique opportunity for the performer to adjust the score from the perspective of performance on a short schedule. Adding to the challenge, the performer and composer were located in Los Angeles and Palo Alto respectively. With limited proximity and time for in-person rehearsal, the process demanded flexibility from both parties. A series of emails, phone conversations and rehearsal recordings became a crucible of rapid refinement.

2.3.2 Electronic Finalization: Pillars

Finalizing the electronic material for In Vitro Oink consisted of three tasks: the creation of the pillars, balancing the acoustic and electronic elements and solidifying the technical aspects of the patch so that it could be taken on the road and comfortably operated by the performer, a Max/MSP novice.

In order to maximize the organic connection and with limited time, the material from the cells and piano was recycled to create the pillars. The electronic pillars were created by convolving the adjacent piano and electronic materials to form fixed interludes. Individual phrases from the piano material that surrounds each of the four pillars were partitioned. These phrases were convolved with the cells that are present in that section. These new lines were then arranged one atop the other to create a large mass of sound. This convolution reunites the piano sounds and the cells that they are derived from. By crossing the spectrums, the commonalities of the generations emerge.

This use of adjacent material creates a multilayered sound file whose density and reordering obscures the direct repetition. This reordering of the existing material also enables brief moments of recognition to occur for the listener. Gestural fragments of repeated material are able to surface in the listener’s consciousness while the overall sound profile is changed.

The pillars function as fixed sonic entities that the performer can play towards and move away from. In rehearsal the last section was consistently to slow. The performer reported that the last section felt a bit to lengthy and did not seem to function as a logical closing. An analysis of the tempi surrounding this pillar revealed that the material of the original version of this last pillar was causing the performer to slow down while approaching and leaving the pillar. The last pillar was recomposed to counteract this slowing tendency. By shortening the length, increasing the articulation density and widening the spectral range and palette the updated pillar now serves as a springboard to the end of the composition. This example illustrates the way in which fixed material can function as an anchor to assist the performer in shaping the piece.

2.3.3 Electronic Finalization: Balance

The other aspect of finalizing the electronics portion was achieving a balance of the various materials of the composition. In Vitro Oink is concerned with crossing the sonic worlds of the acoustic piano and electronic sounds presented by loudspeakers. In the final weeks of preparation, several in-person rehearsals were scheduled. The balance between electronics and acoustic piano was a challenging issue. After adjusting levels in the performance patch and dynamics in the score, the result was still not satisfying. Discussing the rest of the pieces on the concert revealed that the performer would have a microphone already in place. The opportunity to develop another layer of interaction between electronics and acoustic piano became apparent.

The microphone is used to sample the piano live and reinforce the acoustic piano sound. The sampled piano is sent through fifteen variable delay lines. This delayed sound is granulated both on the Micro and Sound Object timescales. Having the computer randomly choose the playback direction and speed of the granulation further alters the piano playback. The windowing and playback is controlled in the same fashion as the streams of electronic cells.

The piano grains are linked to different trigger thresholds on the Wii than the electronic granular streams. By opposing the direction and thresholds of the live piano granulation streams and the electronic granulation streams, independence of material is built into the control structure. In order to enable the unification of the live piano and electronic streams, the piano granulation control thresholds interpolate between different settings. This allows the composer to begin with the piano and electronic stream in sync and gradually bring them out of phase.

Preparing a patch for months of use meant documentation, and explanations of the patch and Max/MSP. When this project began, the performer had experienced Max/MSP through an undergraduate course roughly five years in the past, but did not own or actively use the program. While it is not necessary that a performer understand Max/MSP or other similar software at a deep level in order to perform In Vitro Oink, having a superficial knowledge provides a useful mental backdrop. Put another way, a pianist does not need to understand the mechanical intricacies of the mechanism between the key and the hammer of a piano, but having a general mental model will inform how they approach playing the instrument. The last two rehearsals and the dress rehearsal were filled with a crash course in Max/MSP and trouble-shooting suggestions. Figuring out how problems could arise became a preoccupation of the composer and a good exercise in thinking beyond the confines of being able to control Max/MSP by sitting at the computer. When the performers’ computer crashed mid-tour and he was forced to switch operating systems, the time spent learning the basics of Max/MSP paid dividends. The performer and composer were able to spend five hours on the phone and get the new system up and running for not only this piece, but also an entire program of piano and electronics works.

3 Electronic Interaction

In Vitro Oink has four types of electronic material: semi-indeterminate granular streams, fixed pillars, pre-recorded knocking sounds and live sampling of the piano. The performer interacts with the computer both directly and indirectly to influence and control this material. The Wii is mounted on the left arm in order to capture ancillary movements of the pianist. The Wii accelerometer data is used to influence the semi-indeterminate granular streams and the sampled live piano. One button of the Wii is used as a trigger for moving through the seven sections of the composition. A MIDI foot pedal is used in order to trigger sound files of knocking on the piano. A microphone is used to sample the piano in real time.

3.1 Wii

The Wii enables the performer to influence the semi-indeterminate granular material by tracking the ancillary movements of the left arm. The Wii is mounted on the arm of the performer by using Velcro to secure the Wii to an elastic sheath. (See Figure 2).

The performer does not need to exact specific control over the Wii, thus allowing the pianist to focus on playing the piano. Cook illustrates that different performers have different mental bandwidths available for processing electronic interfaces (Cook 2001, 1). The Wii enables the pianist to exert influence over the electronics without having to utilize mental bandwidth. Changing the mapping structure only seven times during a ten-minute composition ensures variety, and changes that the performer can keep track of. Having the Wii mounted on the performer allows him to deliberately influence the electronics. This flexibility means that the performer may naturally and intuitively react to each performance situation.

The Wii reports acceleration data for the X, Y and Z planes as well as the yaw and rotation. This data is sent via Bluetooth to the DarwiinRemote program. Through OSC this information is streamed to Max/MSP. The Max/MSP patch has thresholds in the yaw, rotation, and X, Y and Z planes that trigger envelopes. The original granular cells are continually being played in different semi-indeterminate patterns by employing Karlheinz Essl’s sel-princ Max Object. The triggers open a metaphorical window into this sound world. This Sound Object level windowing of the cells creates a stream of granular accompaniment.

3.1.1 Effects of Mappings

The perception of the sonic material in the granular streams is influenced by the density and autonomy of the streams as well as the windowing. The Wii influences these parameters of the granular streams.

Density is a result of several aspects of the mapping strategy. The Wii uses accelerometers to report acceleration in the yaw, rotation, and X, Y and Z planes. The degree of alignment or opposition of the mappings of the streams, controls the resultant density and independence of the streams. The threshold at which the acceleration of the Wii triggers a window can be altered. By dynamically changing the trigger level, the perceptual density of granular streams can be still further altered.

Roads reports on the perceptual influence of windowing in Microsound (Roads 2001, 246–247). On the Sound Object time level, In Vitro Oink uses five different types of windows to reveal the sonic content of the streams: a short impulse-like blip, a Gaussian window, an ADSR, an increasing ramp and a decreasing ramp. Each of these windows has a unique sonic signature. The windows are scaled in length and amplitude to further shape their sonic signature. Each section has a range in which the scaling may occur. The individual streams can be made to sound more or less similar by choosing similar windows and or scaling them to have similar lengths or amplitudes.

As noted in section 2, the Wii was used to control improvisations that are translated by an FFT process to piano notation. The patch that was developed for this improvisation was eventually adapted for the final performance patch. Section 2.2.1 notes that experimentation with different fixed values for the windowing led to ranges that are initialized in each of the seven sections of In Vitro Oink. In addition to the scaling of amplitude, length and the type of window in the performance patch, the use of linear or exponential curves and density also have a range of possibilities for each section. Providing ranges for the different aspects of the windowing and density means that each performance is unique. How the computer selects within this range is determined by the cumulative and local quantity of thresholds surpassed. This indirect mapping of the ancillary movements of the performer’s left arm to the control structure effectively remaps the same data in multiple ways. As a result, some mappings are evident to the audience and others are not, yet there is an organic connection between the movements and sounds of the performance.

3.2 MIDI Foot Pedal and Wii Event Triggering

During the rehearsal process the performer was having a difficult time creating a wide range of knocking sounds on the piano. The challenge is a result of the having to move very quickly from a traditional playing position to that required for a unique knocking sound. A further challenge was that this piece would be performed on a wide range of pianos, each with a different layout. A software solution was arrived at. High quality recordings of a wide range of knocking sounds could supplement the live knocking sounds of the pianist. By using the MIDI foot pedal, the performer is able to easily deploy these knocks when called for in the score and as he sees fit during the electronic pillars. These MIDI-triggered knocking sounds are used to supplement the live knocking sounds that the pianist performs.

3.3 Live Sampling

The composition begins with a clear distinction between the live piano, and the electronic granular streams and fixed electronic pillars. After establishing this rubric, the piano is sampled and delayed with an array of fifteen delay lines. This sound is inconspicuously mixed into the electronic granular streams by using the same window functions. Independence is gained by changing the amplitude, range, length, density, temporal position and eventually the windowing of the granulated piano sounds over time.

In the fourth section, the delayed piano sounds are allowed to push to the foreground and obscure the pianist, who can interact with this material as an improvising performer would interact with a fellow improviser. During the Pillar that follows section four, the performer can improvise with the material in the delay lines by using the Wii to activate a window for the granulated piano sounds. In the following sections the granulated piano is returned into the background. The ability of the performer to adapt to the various ways in which the granulated piano is deployed is necessary. This unpredictability ensures that the piece is unique in yet another fashion with each performance, thus providing a novel way of engaging the performer and audience.

Bibliography

Berg, Paul. AC Toolbox (version 4.5.1, 2010). Software. http://www.koncon.nl/downloads/ACToolbox

Caires, Carlos. IRIN: A Structured Sound Editor for Micromontage (version 2.4, 2009). Software. [link]

Cook, Perry. “Principles for Designing Computer Music Controllers.” Proceedings of the International Conference on New Instruments for Musical Expression (NIME) 2001 (Seattle WA, 1–2 April 2001).

Essl, Karlheinz. “RTC-lib 5.0 for Max5: Abstract.” Software Library. Last updated 15 November 2010. Available on the author’s website http://www.essl.at/works/rtc.html - abs

Hirolog. DarwiinRemote (version 0.6). Open Source Software, 2006–10. http://blog.hiroaki.jp/2006/12/000433.html

Klingbeil, Michael. “Software for Spectral Analysis, Editing and Synthesis.” Proceedings of the International Computer Music Conference (ICMC) 2005: Free Sound (Barcelona, Spain: L’Escola Superior de Música de Catalunya, 5–9 September 2005). Dirsseratiton availabel on the author’s website http://www.klingbeil.com/data/Klingbeil_Dissertation_web.pdf

Langelaana, Marloes L.P., Kristel J.M. Boonena, Roderick B. Polaka, Frank P.T. Baaijensa, Mark J. Posta and Daisy W.J. van der Schaft. “Meet the New Meat: Tissue engineered skeletal muscle.” Trends in Food Science & Technology 21/2 (February 2010). Published online 11 November 2009.

Roads, Curtis. Microsound. Cambridge, MA: MIT Press, 2001.

_____. “The Art of Articulation: The Electroacoustic Music of Horacio Vaggione.” Contemporary Music Review 24/4 & 5 (October 2005) “Horacio Vaggione: Composition Theory,” pp. 295–309.

Rogers, Lois. “Scientists Grow Pork Meat in a Laboratory.” The Sunday Times. London, 29 November 2009.

Zicarelli, David. “How I Learned to Love a Program That Does Nothing.” Computer Music Journal 26/4 (Winter 2002), p. 44–51.

Social top